Qlik Enterprise Manager Setup and User Guide

Qlik Enterprise Manager

TM

May 2023

Last updated: September 02, 2024

Copyright © 1993-2024 QlikTech International AB. All rights reserved.

HELP.QLIK.COM

© 2024 QlikTech International AB. All rights reserved. All company and/or product names may be trade names,

trademarks and/or registered trademarks of the respective owners with which they are associated.

Setup and User Guide - Enterprise Manager, May 2023 3

1 What's new? 9

1.1 Support for Personal Access Tokens as a log in method via the Enterprise Manager API 9

2 Introduction 10

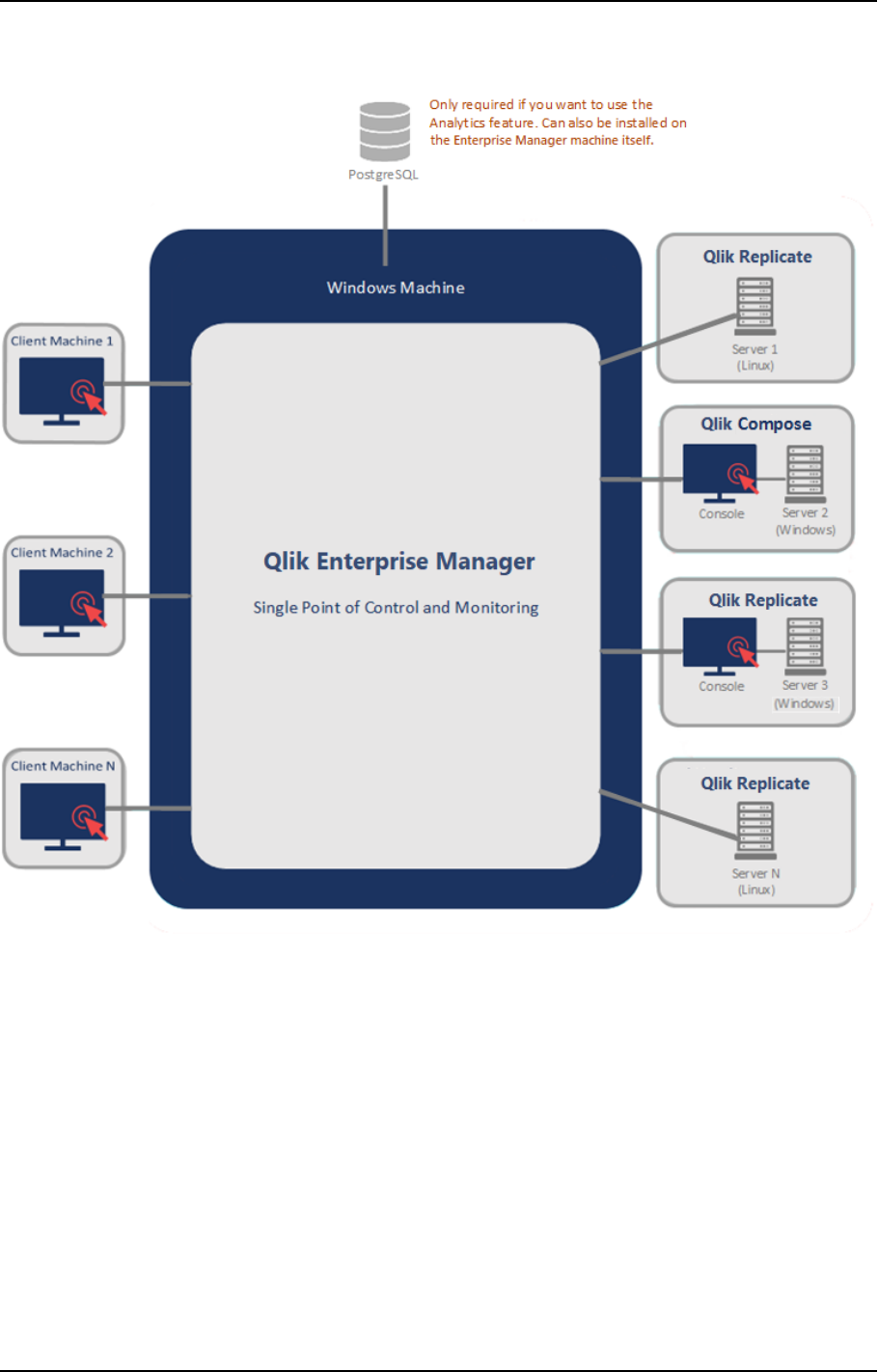

2.1 Example Enterprise Manager environment 11

2.2 Enterprise Manager architecture 12

3 Terminology 13

3.1 Change Data Capture (CDC) 13

3.2 Full load 13

3.3 Apply latency 13

Latency when applying large transactions 13

Latency when no transactions are being applied 13

3.4 Source latency 13

3.5 Target latency 13

3.6 Overall latency 14

3.7 Source endpoint 14

3.8 Target endpoint 14

3.9 Net Changes table 14

4 Installation and setup 15

4.1 Preparing your system for Enterprise Manager 15

Hardware configuration guidelines 15

Sizing guidelines 16

Software requirements 16

Compatibility with Related Qlik Products 17

Replication Management license 17

4.2 Installing Enterprise Manager 18

Installing Qlik Enterprise Manager using the Setup Wizard 19

Upgrading Enterprise Manager 19

Migrating the Enterprise Manager Repository 20

Silently installing Enterprise Manager 21

Silently upgrading Enterprise Manager 22

Silently uninstalling Enterprise Manager 23

4.3 Changing the Enterprise Manager service account 24

4.4 Setting the login authentication method 25

Setting Single Sign-on authentication 25

Setting Single Sign-on authentication with Kerberos 25

Setting form authentication 26

Setting up SAML authentication 27

Setting up Personal Access Token authentication for the API 30

4.5 Starting to work with the Qlik Enterprise Manager Console 33

Registering Licenses 34

5 Security considerations 35

5.1 Setting up HTTPS for the Enterprise Manager console 35

Checking if an SSL Certificate is installed 35

Using the self-signed certificate 35

5.2 Setting up HSTS on Enterprise Manager 38

Enabling HSTS 38

Contents

Setup and User Guide - Enterprise Manager, May 2023 4

Disabling HSTS 38

5.3 Replacing the self-signed certificate on Windows 39

5.4 Setting the hostname and changing the SSL port 40

5.5 Replacing the Master User Password 41

The Master Key 41

High Availability mode 42

5.6 Encrypting the user permissions file 42

5.7 Controlling execution of user-defined commands 43

Executing operating system commands as a different user 44

6 Managing servers 45

6.1 Server requirements 45

Qlik Replicate Server requirements 45

Qlik Compose Server requirements 46

6.2 Adding Servers 46

6.3 Monitoring servers 48

Customizing server columns 49

Searching for servers 49

6.4 Server settings 50

Global error handling 50

Resource Control 51

File Transfer Service 52

External utilities 54

Logging 54

More options 58

Server management permissions 58

6.5 Additional server management options 60

6.6 Registering a license for a monitored server 62

6.7 Viewing server messages 63

7 Defining and managing tasks 64

7.1 Adding tasks 64

Bidirectional replication 66

7.2 Editing and viewing a task description 69

7.3 Defining and managing endpoints 69

Defining an endpoint 70

Editing endpoint configuration information 70

Viewing endpoint configuration information 71

Testing an endpoint connection 71

Duplicating endpoints 71

Searching for endpoints 71

Deleting endpoints 72

7.4 Adding a source and target endpoint to a task 72

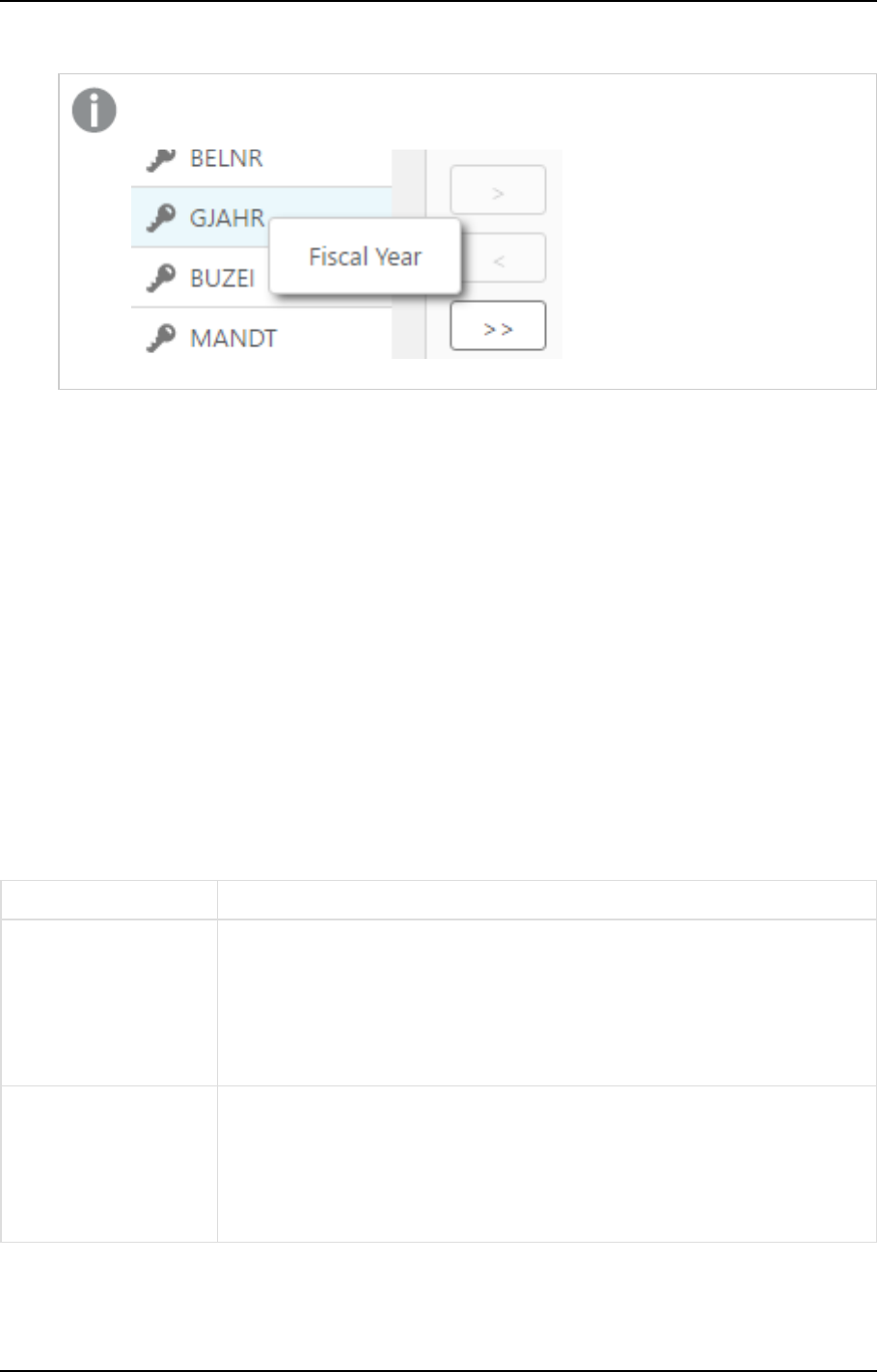

7.5 Selecting tables and/or views for replication 73

Searching for tables/views to use in a replication task 75

Selecting specific tables/views for replication 76

Setting load order 77

Removing specific tables/views from a replication task 77

Contents

Setup and User Guide - Enterprise Manager, May 2023 5

Creating table/view selection patterns 78

7.6 Editing a replication task 79

7.7 Searching for tasks 80

7.8 Deleting a replication task 80

7.9 Exporting and importing tasks 80

8 Customizing tasks 82

8.1 Table Settings 82

Performing General tasks for a single table/view 83

Defining transformations for a single table/view 84

Using filters 92

Parallel Load 98

Handling LOB columns 103

Message format 106

Full Load 106

8.2 Defining global rules 107

Starting the Global Transformation Rules wizard 107

118

118

Starting the Global Filter Rules wizard 131

Managing global rules 136

8.3 Using the Expression Builder 137

Overview of the Expression Builder 137

Build an expression 139

Parse an expression 140

Test an expression 140

Using elements in the Expression Builder 142

8.4 Task Settings 165

Metadata 166

Bidirectional 170

Full Load 171

Change Processing 174

Error handling 186

Logging 192

Character substitution 194

File uploads 195

Message Format 196

Transformations and Filters 201

More options 202

9 Monitoring and controlling tasks 203

9.1 Monitoring Replicate tasks 203

Task progress summary 203

Viewing specific tasks 207

Monitoring Full Load replication 209

Monitoring Change Processing replication 215

9.2 Monitoring Compose tasks and workflows 222

Task progress summary 222

Monitoring Data Lake tasks 225

Contents

Setup and User Guide - Enterprise Manager, May 2023 6

Monitoring Data Warehouse tasks 226

Monitoring workflows 227

9.3 Searching for tasks 230

9.4 Customizing task columns 231

9.5 Grouping tasks 232

9.6 Running a task 234

How to run a task 235

Using the Run button options 235

Start Processing 236

Reload target 236

Advanced Run Options 236

Recovering from data folder loss or corruption 241

9.7 Error handling 243

Task error handling 243

Data error handling 243

9.8 Using the monitor tools 244

Logging 244

Downloading a memory report 246

Downloading a diagnostics package 246

9.9 Scheduling jobs 247

10 Messages and notifications 250

10.1 Message Center overview 250

10.2 Customizing the Message Center 252

Searching for messages 253

10.3 Viewing additional information 253

10.4 Notifications 253

Setting a task notification 254

Setting a server notification 261

Managing notifications 264

Required permissions 265

Event IDs in Windows Event Log 265

10.5 Viewing and downloading log files 267

11 Administration 269

11.1 Enterprise Manager settings 269

Enterprise Manager logging settings 269

Message Center purge settings 271

Repository connection settings 272

Qlik Catalog Server connection 273

Analytics - Data collection and purge settings 273

Configuring mail server settings 275

Registering and managing licenses 276

11.2 User permissions 278

Encrypting the User Permissions File 279

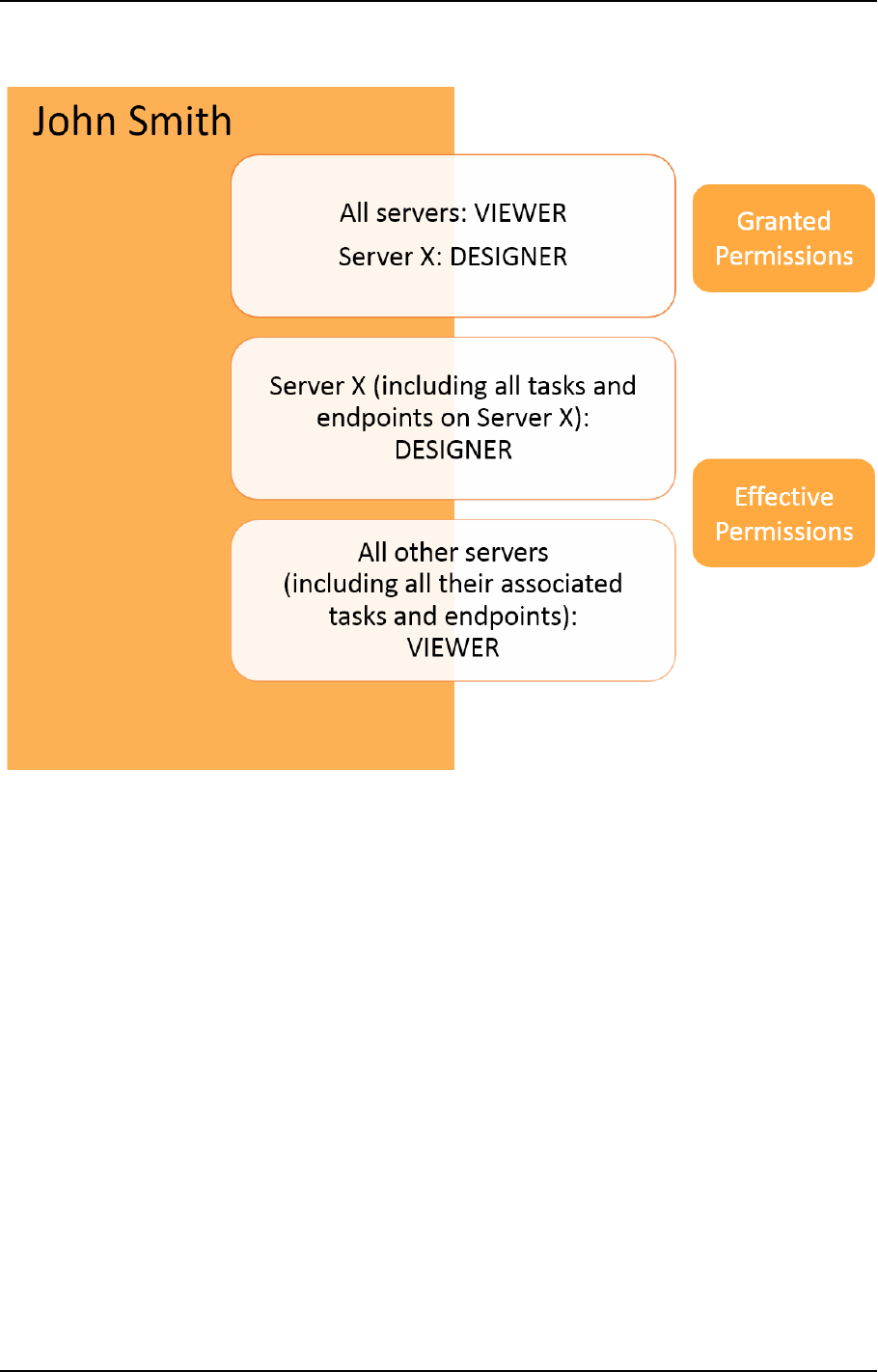

Granular access control 279

Roles and permissions 284

Working with local groups 286

Contents

Setup and User Guide - Enterprise Manager, May 2023 7

Managing user permissions 286

Managing Personal Access Tokens 289

11.3 Creating an audit trail 289

Decoding an encoded stream payload 291

12 Configuring Enterprise Manager using the CLI 293

12.1 Setting the Enterprise Manager host address 293

12.2 Setting the Enterprise Manager HTTP port 293

12.3 Setting the Enterprise Manager HTTPS port 294

12.4 Setting the Enterprise Manager root URL 294

12.5 Showing the Enterprise Manager version 294

12.6 Showing the Enterprise Manager CLI Help 294

12.7 Service Commands 294

12.8 Cleaning the self-signed certificate 295

12.9 Setting the audit trail retention size and age 295

12.10 Master User Password commands 295

Generating a random Master User Password 295

Setting or changing the MUK (Master User Key) 295

Setting or changing the Java MUK (Master User Key) 296

12.11 Showing the connectivity and login settings 297

Connectivity settings 297

SAML settings 297

12.12 Fine tuning performance 297

Turning off the Analytics Server 298

Changing the update intervals 298

13 Cataloging tasks in Qlik Catalog 300

13.1 Terminology 300

13.2 Prerequisites 301

13.3 Setting up connectivity to Qlik Catalog 301

Catalog columns 301

13.4 Limitations and considerations 301

13.5 Catalog operations 302

Cataloging tasks 302

Uncataloging tasks 304

Recataloging tasks 304

14 Analytics 305

14.1 Prerequisites 305

Install PostgreSQL 305

Create a dedicated database and assign the required privileges 306

Configure connectivity to PostgreSQL 306

Set up data collection and purging from PostgreSQL 306

Register a license 306

Obtaining a license 306

Port 307

Hardware 307

14.2 Permissions 307

14.3 Analytics dashboards 307

Contents

Setup and User Guide - Enterprise Manager, May 2023 8

Trends 308

Trends by server 309

Trends by tasks 310

Top servers 311

Top tasks 312

Capacity planning 313

14.4 Exporting to TSV 315

14.5 Creating filters 315

14.6 Using the Pivot Menu 317

14.7 Analytics repository schema 317

aem_endpoint_type 317

aem_meta_source_database 318

aem_meta_target_database 318

aem_server 318

aem_source_database 318

aem_target_database 319

aem_target_processes 319

aem_task 319

aem_task_name 322

aem_task_previous_metrics 323

aem_task_profile 323

aem_task_state 323

aem_task_stop_reason 323

Sample Queries 323

A Setting up High Availability 327

A.1 Installing Qlik Enterprise Manager in a Windows cluster 327

A.2 Upgrading Qlik Enterprise Manager in a Windows cluster 330

A.3 Uninstalling Qlik Enterprise Manager from a Windows cluster 332

B Impact of DST change on Qlik Replicate 335

Contents

1 What's new?

1 What's new?

This section describes the new and enhanced features in Enterprise Manager May 2023.

In addition to these release notes, customers who are not upgrading from the latest GA version

(Enterprise Manager November 2022 SR1) are advised to review the release notes for all versions

released since their current version.

Customers should also review the Enterprise Manager release notes in ≤ Qlik Community for information

about the following:

l

Migration and upgrade

l

End of life/support features

l

Newly supported versions and third-party software

l

Resolved issues

l

Known issues

1.1 Support for Personal Access Tokens as a log in method

via the Enterprise Manager API

From Enterprise Manager May 2023, customers can use Personal Access Tokens to log in to Enterprise

Manager via the API. Using Personal Access Tokens is the recommended best practice, as it offers significant

security benefits.

The high-level flow involves the following steps:

l

Step 1: In Okta, create an app integration that uses OpenID Connect and assign users to it.

l

Step 2: In Enterprise Manager, enable Enterprise Manager to communicate with Okta (using the CLI)

l

Step 3: In Enterprise Manager, generate a Personal Access Token

l

Step 4: Configure the Enterprise Manager API to log in with a Personal Access Token

Tokens can also be revoked by an Enterprise Manager admin. To facilitate this, a new Personal Access

Tokens tab has been added to the Enterprise Manager settings:

For details, see Setting up Personal Access Token authentication for the API (page 30)

Setup and User Guide - Enterprise Manager, May 2023 9

2 Introduction

2 Introduction

Qlik Enterprise Manager, also referred to as Enterprise Manager, provides a single point of control for

designing, executing, and monitoring Qlik Replicate and Compose tasks throughout your organization. If your

site has multiple Qlik servers with tens, if not hundreds of tasks, Enterprise Manager greatly eases the design,

management, and monitoring of these tasks. Whether your site deploys a single Qlik server or multiple

servers, Enterprise Manager is your single go-to interface to create data endpoints, design tasks, execute

them, and monitor the replication process in near real-time. In addition, Enterprise Manager lets you view all

tasks in a tabular format that offers advanced grouping and filtering capabilities.

The following figures show a high-level view of a possible Enterprise Manager installation environment and a

more detailed architecture diagram.

Note that components labeled as "Qlik Server" can either be Qlik Replicate or Qlik Compose.

Setup and User Guide - Enterprise Manager, May 2023 10

2 Introduction

2.1 Example Enterprise Manager environment

Setup and User Guide - Enterprise Manager, May 2023 11

2 Introduction

2.2 Enterprise Manager architecture

Setup and User Guide - Enterprise Manager, May 2023 12

3 Terminology

3 Terminology

The following section describes some key terms used throughout this Help.

3.1 Change Data Capture (CDC)

Captures changes in the source data or metadata as they occur and applies them to the target endpoint as

soon as possible, in near-real-time. The changes are captured and applied as units of single committed

transactions and several different target tables may be updated as the result of a single source commit. This

guarantees transactional integrity in the target endpoint. The CDC process for any file or table starts as soon

as the data loading operation for the file or table begins.

3.2 Full load

Creates all defined files or tables on the target endpoint, automatically defines the metadata that is required

at the target, and populates the tables with data from the source.

3.3 Apply latency

The gap in seconds between capturing a change in one of the source tables and applying that change to the

target endpoint.

Latency when applying large transactions

This is best explained by way of example. When the most recent Apply Latency value was 10 seconds and now

a transaction of one million rows gets committed at the source endpoint, Replicate starts to apply that

transaction to the selected target and it will take some time to write all the changes to the target (for example

60 seconds). During the next 60 seconds, the latency value gradually grows to 70 seconds for the last change

in the transaction. Once the transaction is committed, the latency drops back to the 'regular' latency (10

seconds in this case).

Latency when no transactions are being applied

When a time period passes with no changes applied to the target, the latency calculation is based on the time

difference between the current time and the timestamp of the last change event read from the transaction

log. This could happen, for example, if there is a high volume of activity on tables that were not selected for

replication in the current task.

3.4 Source latency

The gap in seconds between when the source database wrote an event to its transaction log and when

Replicate captured that change.

3.5 Target latency

The gap between when a commit is seen by Replicate (reading the source transaction log) and when the

changes of that commit are seen in the target.

Setup and User Guide - Enterprise Manager, May 2023 13

3 Terminology

3.6 Overall latency

The overall latency is defined as the time gap between when a change is committed in the source database

and when it is visible in the target database.

3.7 Source endpoint

A collection of files or tables managed by an endpoint management system (such as, Oracle, SQL Server) that

is part of the main computing service of the IT organization of an enterprise. This source continuously

updated, may need to provide a high throughput rate, may have strict 24/7 up-time requirements, and may

reference or update a number of tables in the course of a single logical transaction while providing

transactional consistency and integrity for the data.

3.8 Target endpoint

A collection of files or tables managed by an Endpoint Management System (DBMS), which may be different

from the DBMS managing the source endpoint. It contains data that is derived from the source. It may contain

only a subset of the tables, columns, or rows that appear in the source. Its tables may contain columns that

do not appear in the source but are transformations or computations based on the source data.

3.9 Net Changes table

Replicate performs data replication based on changes that appear in the source database's transaction log. A

single update operation on the source, such as "UPDATE MyTable SET f1=..., f2=..." could

potentially update many rows in the source database and create a large number of change records that

Replicate will need to apply to the target. Replicate offers two Change Processing modes:Transactional

apply and Batch optimized apply. In Transactional apply Change Processing mode, Replicate essentially

applies each change to the target, which may take much longer than the original UPDATE took on the source.

Batch optimized apply mode, on the other hand, is designed to handle efficient replication of a large number

of changes. In this mode, Replicate accumulates changes for multiple tables in a memory cache. Repeated

changes to the same row are updated in the memory cache. When the maximum memory cache size defined

for the task is reached (or when the configured time has elapsed), Replicate does the following:

a. Writes the cached (net) changes to a special table on the target (the Net Changes table),

b. Bulk uploads the changes to the target table

c. Uses SQL statements to update the target tables based on the data in the Net Changes table.

Note that for Oracle, Replicate uses a Net Changes table per each source table with changes, while

for other source endpoints a single Net Changes table is used.

Setup and User Guide - Enterprise Manager, May 2023 14

4 Installation and setup

4 Installation and setup

This section describes how to install and set up Qlik Enterprise Manager (Enterprise Manager).

For instruction on installing Enterprise Manager in a Windows Cluster, see Installing Qlik Enterprise

Manager in a Windows cluster (page 327).

Enterprise Manager collects information from Qlik Replicate and/or Qlik Compose Servers to allow a central

point of monitoring for all Replicate and/or Compose Servers in your organization. Therefore, you also need to

install Qlik Replicate and/or Qlik Compose in your organization. For a description of the respective installation

procedures, refer to the Qlik Replicate and/or Qlik Compose product Help.

In this section:

l

Preparing your system for Enterprise Manager (page 15)

l

Installing Enterprise Manager (page 18)

l

Changing the Enterprise Manager service account (page 24)

l

Setting the login authentication method (page 25)

l

Starting to work with the Qlik Enterprise Manager Console (page 33)

4.1 Preparing your system for Enterprise Manager

This section describes the hardware and software requirements for Qlik Enterprise Manager and the

monitored Replicate Servers.

In this section:

l

Hardware configuration guidelines (page 15)

l

Software requirements (page 16)

l

Sizing guidelines (page 16)

l

Compatibility with Related Qlik Products (page 17)

l

Replication Management license (page 17)

Hardware configuration guidelines

It is recommended that the machine hosting Qlik Enterprise Manager meets or exceeds the hardware

configuration shown in the following table:

Medium System Large System

Processor 4-core base 16-core base

Memory 8 GB 32 GB

Disk requirements 128 GB 256 GB

Network 1 Gbps 1 Gbps

Hardware requirements

Setup and User Guide - Enterprise Manager, May 2023 15

4 Installation and setup

Sizing guidelines

Depending on the number of Replicate tasks and concurrent users, you might need to balance the system

load between multiple Enterprise Manager machines. For example, if your hardware configuration is set up for

a large system (40 Replicate servers), it is recommended to monitor no more than 4000 Replicate tasks on a

single Enterprise Manager machine. When you near this threshold, then the recommendation is to split the

tasks between at least two Enterprise Manager machines by dividing the number of monitored Replicate

servers between the Enterprise Manager machines. Similarly, if your hardware configuration is set up for a

medium system and the number of Replicate tasks and users concurrently accessing the system is

approaching the maximum threshold (see table below), then best practice is to split the monitored Replicate

servers between at least two Enterprise Manager machines.

For information on hardware configuration guidelines, see Hardware configuration guidelines (page 15).

Medium

System

Large

System

Replicate servers 8 40

Tasks 800 4000

Concurrent users(Rate of public API calls may affect these numbers) 10 80

Sizing guidelines

To some extent, how you set up granular access control might also impact performance. This is

usually only a factor when many users are assigned different roles and permissions.

Software requirements

Parts of the software use Java components utilizing OpenJDK JRE, which is included in the

Enterprise Manager installation.

Supported Windows platforms

It is strongly recommended to install Qlik Enterprise Manager on a dedicated Windows server, separate from

the Replicate and/or Compose Servers that it manages.

Enterprise Manager can be installed on any of the following Windows platforms:

l

Windows Server 2012 R2 (64-bit)

l

Windows Server 2016 (64-bit)

l

Windows Server 2019 (64-bit)

l

Windows Server 2022 (64-bit)

Setup and User Guide - Enterprise Manager, May 2023 16

4 Installation and setup

Windows Server 2022 is supported from Qlik Enterprise Manager May 2022 Service Release 02

only.

Supported browsers

The following browsers can be used to access the Enterprise Manager Console:

l

Microsoft Edge (with automatic updates turned on)

l

Mozilla Firefox (with automatic updates turned on)

l

Google Chrome (with automatic updates turned on)

Port

Port 443 must be opened for inbound connections on the Enterprise Manager machine.

Additional software requirements

Qlik Enterprise Manager requires the following software:

l

Microsoft Visual Studio C++ 2010 X64 Redistributable and 2015 X64 Redistributable

l

.NET Framework 4.8 or later

l

To use the Analytics feature, PostgreSQL 12.16 or later should be installed either on the Enterprise

Manager machine or on a machine that is accessible from Enterprise Manager.

l

TLS 1.2 or later must be supported in the underlying OS.

On Windows Server 2012 R2, TLS 1.2 should be turned on by default. If it is not, refer to the

Microsoft online help for instructions on how to turn it on.

See also: Compatibility with Related Qlik Products (page 17).

Compatibility with Related Qlik Products

Enterprise Manager May 2023 Initial Release

l

Qlik Replicate - Enterprise Manager May 2023 is compatible with Replicate May 2023, Replicate

November 2022, Replicate May 2022, and Replicate November 2021.

l

Qlik Compose - Enterprise Manager May 2023 is compatible with Qlik Compose May 2022 (and its

Service Releases) only.

l

Qlik Catalog - Enterprise Manager May 2023 is compatible with Qlik Catalog November 2022 only.

Replication Management license

This section explains how to obtain a Replication Management license and lists the processes that continue

even when the license expires or is invalid.

Setup and User Guide - Enterprise Manager, May 2023 17

4 Installation and setup

Obtaining a Replication Management license

A Replication Management license is required in order to use Qlik Enterprise Manager. If no license is detected,

a Register License message will be displayed when you open the Qlik Enterprise Manager Console.

The procedure below does not apply when installing Enterprise Manager in a High Availability

Cluster. To obtain a Replication Management license for Enterprise Manager in a High Availability

Cluster, you must provide your Qlik Sales Representative with the following information, depending

on which Windows version the cluster is running:

l

Windows Server 2016: The official cluster FQDN.

l

Windows Server 2012 R2: The FQDN of each of the cluster nodes and the official cluster

FQDN.

To obtain a license

1. Open the Qlik Enterprise Manager Console and copy the Enterprise Manager machine name from either

of the following locations:

l

The Register License message that is displayed when you open the Qlik Enterprise Manager

Console.

l

The bottom of the Licenses tab in the Settings window.

2. Request a license from your Qlik Sales Representative, making sure to include the Enterprise Manager

machine name in your request.

Process that continue if the license expires or is invalid

The following processes will continue, even if the Replication Management license expires or is invalid:

l

Notifications will continue to be sent.

l

Tasks monitoring information and messages will continue to be collected from Replicate. However,

they will not be visible until a valid Replication Management license is installed.

In such a situation, do one of the following:

l

Register a valid Replication Management license.

l

Stop the Enterprise Manager service or uninstall the product if you do not intend to use it anymore.

4.2 Installing Enterprise Manager

Enterprise Manager must be installed under an Administrator account.

In this section:

Setup and User Guide - Enterprise Manager, May 2023 18

4 Installation and setup

l

Installing Qlik Enterprise Manager using the Setup Wizard (page 19)

l

Silently installing Enterprise Manager (page 21)

l

Silently upgrading Enterprise Manager (page 22)

l

Silently uninstalling Enterprise Manager (page 23)

Installing Qlik Enterprise Manager using the Setup Wizard

The following section describes how to install Enterprise Manager.

To install Enterprise Manager:

1. Run the Enterprise Manager setup file (QlikEnterpriseManager_<version.build>_<systemtype>.exe, such

as QlikEnterpriseManager_7.0.0.105_X64.exe).

The Enterprise Manager setup wizard opens.

2. Optionally, change the installation directory; then click Next.

3. Optionally, change the data directory; then click Next.

All of the data that is created when you use Enterprise Manager is stored in a directory called data. By

default, this directory is located in the installation directory where you install Enterprise Manager.

If you change the data directory location, you must prefix all command line actions with:

-d path_to_the_data_directory

Example:

<product_dir>\bin\AemCtl.exe -d F:\data configuration set -a 123.123.12.1

4. Click Next again to start the installation.

5. When the installation completes, click Finish.

As part of the installation, a new Windows Service called Enterprise Manager is created.

The Enterprise Manager analytics module require a PostgreSQL database. If you plan on using this

module, you will need to install PostgreSQL on either the Enterprise Manager machine or on a machine

that is accessible from Enterprise Manager.

For your convenience, PostgreSQL is included with Enterprise Manager and you will be prompted to

install it after clicking Finish.

6. Click Yes to install PostgreSQL on the Enterprise Manager server or No to exit without installing

PostgreSQL. You can always install PostgreSQL at a later time by running the PostgreSQL installer from

the following location:

<Enterprise_Manager_INSTALLATION_FOLDER>\postgresqlkit

For instructions on installing and maintaining PostgreSQL, refer to the PostgreSQL Help.

7. Open the Enterprise Manager console as described in Starting to work with the Qlik Enterprise Manager

Console (page 33).

Upgrading Enterprise Manager

The upgrade process also describes how to back up the Enterprise Manager "data" folder, which will allow you

to restore your settings if you encounter any issues with the upgrade.

To upgrade Enterprise Manager:

Setup and User Guide - Enterprise Manager, May 2023 19

4 Installation and setup

1. Back up your currently installed version, by copying the Enterprise Manager data folder to a location

outside the product folder. The default data folder location is C:\Program Files\Attunity\Enterprise

Manager\data.

2. Run the Enterprise Manager setup wizard to install the new version.

3. If you notice an issue with the upgrade, you can revert to the previous version as described below or

do the following:

a. Stop all the Enterprise Manager services.

b. Overwrite the data folder with the data folder that you backed up earlier.

c. Start all the Enterprise Manager services.

Reverting to a Previous Version

To revert to a previous version:

1. Back up the Enterprise Manager data directory to a location outside the product folder.

2. Uninstall the product and then reinstall to the same directory.

3. Once the installation is complete, stop all Enterprise Manager services.

4. Overwrite the data directory with the data directory that you backed up earlier.

5. Start all Enterprise Manager services.

Migrating the Enterprise Manager Repository

In certain situations, you may need to migrate Replicate or Compose Servers and settings from one Enterprise

Manager Server to another. This may be required, for example, if you need to move from a test environment

to a production environment or if you need to decommission the Enterprise Manager Server machine. In the

migration procedure, which is described below, Server A is the Enterprise Manager Server configured with

Replicate or Compose Servers, while Server B is a clean installation of Enterprise Manager Server.

All commands should be run as administrator.

1. On Server A:

a. Run the following commands:

Command 1 - Sets the Master User Key:

<INSTALL_DIR>\bin\aemctl.exe [-d data_folder_path] masterukey set -

p password

where -d data_folder_path is only required if the <INSTALL_DIR>\data folder is in a non-

default location.

Command 2 - Sets the Java Master User Key:

<INSTALL_DIR>\java\bin\atajs.bat [-d java_data_folder_path]

masterukey set password

where -d java_data_folder_path is only required if the <INSTALL_DIR>\data\java

folder is in a non-default location.

b. Restart the QlikEnterprise Manager service.

Setup and User Guide - Enterprise Manager, May 2023 20

4 Installation and setup

2. On Server B:

a. Stop the Qlik Enterprise Manager service.

b. Delete the <INSTALL_DIR>\data folder.

c. Copy the data folder from Server A to Server B.

d. Run the following commands:

Command 1:

<INSTALL_DIR>\bin\aemctl.exe [-d data_folder_path] masterukey set -

p password

where -d data_folder_path is only required if the <INSTALL_DIR>\data folder is in a non-

default location.

Command 2:

<INSTALL_DIR>\java\bin\atajs.bat [-d java_data_folder_path]

masterukey set password

e. Start the QlikEnterprise Manager service.

f. Log in to Enterprise Manager and verify that the Replicate and Compose Servers have been

migrated from Server A to Server B, and that all of Server A's configuration settings have been

migrated as well.

Silently installing Enterprise Manager

Enterprise Manager can be installed silently (i.e. without requiring user interaction). This option is useful, for

example, if you need to install Enterprise Manager on several machines throughout your organization.

Before commencing the installation, make sure that the prerequisites have been met.

The installation process consists of two stages:

1. Creating a response file (page 21)

2. Running the silent install (page 22)

Creating a response file

Before starting the installation, you need to create a response file.

To create the response file:

1. From the directory containing the Qlik Enterprise Manager setup file, run the following command(note

that this will also install Enterprise Manager):

QlikEnterpriseManager_version_X64.exe /r /f1my_response_file

where:

my_response_file is the full path to the response file that will be generated.

Example:

QlikEnterpriseManager_6.1.0.536_X64.exe /r /f1C:\Enterprise Manager_install.iss

Setup and User Guide - Enterprise Manager, May 2023 21

4 Installation and setup

At the end of the Enterprise Manager installation, when you are prompted to run the

PostgreSQL installer, click No.

2. To change the default installation directory, open the response file in a text editor and edit the first

szDir value as necessary.

3. To change the default data directory, edit the third szDir value as necessary.

4. Save the file as <name>.iss, e.g. silent_inst_64.iss.

Running the silent install

To silently install Qlik Enterprise Manager, open a command prompt and change the working directory to the

directory containing the Enterprise Manager setup file. Then issue the following command:

Syntax:

QlikEnterpriseManager_version_X64.exe /s /f1my_response_file [/f2log_file]

where:

my_response_file is the full path to the response file you created earlier and log_file is the path to the

optional log file.

Example:

C:\>QlikEnterpriseManager_6.1.0.536_X64.exe /s /f1C:\temp\1\Enterprise Manager_install.iss

/f2C:\temp\1\silent_x64_install.log

If the installation was successful, the log file should contain the following rows:

[ResponseResult]

ResultCode=0

Silently upgrading Enterprise Manager

Silently upgrading Enterprise Manager consists of two stages:

1. Creating a response file (page 22)

2. Running a silent upgrade (page 22)

Creating a response file

Before starting the upgrade, you need to create a response file. You may also want to back up your current

installation as described in Upgrading Enterprise Manager (page 19).

For an explanation of how to create a response file, see Step 1 of Creating a response file (page 21).

Running a silent upgrade

To silently upgrade Enterprise Manager, open a command prompt and change the working directory to the

directory containing the Enterprise Manager setup file.

Then issue the following command:

Syntax:

QlikEnterpriseManager_version_X64.exe /s /f1my_response_file [/f2log_file]

Setup and User Guide - Enterprise Manager, May 2023 22

4 Installation and setup

where:

my_response_file is the full path to the response file you created earlier and log_file is the path to the

optional log file.

Example:

C:\>QlikEnterpriseManager_6.1.0.536_X64.exe /s /f1C:\temp\1\Enterprise Manager_upgrade.iss

/f2C:\temp\1\silent_x64_up.log

If the upgrade was successful, the log file should contain the following rows:

[ResponseResult]

ResultCode=0

Silently uninstalling Enterprise Manager

Silently uninstalling Enterprise Manager consists of two stages:

1. Creating a response file (page 23)

2. Running a silent uninstall (page 23)

Creating a response file

Before starting the uninstall, you need to create a response file.

To create the response file:

1. Copy the following (response file) text into a text editor:

[{999A7077-16C9-4B3B-AFD2-CBBA9FA72C15}-DlgOrder]

Dlg0={999A7077-16C9-4B3B-AFD2-CBBA9FA72C15}-SdWelcomeMaint-0

Count=3

Dlg1={999A7077-16C9-4B3B-AFD2-CBBA9FA72C15}-MessageBox-0

Dlg2={999A7077-16C9-4B3B-AFD2-CBBA9FA72C15}-SdFinish-0

[{999A7077-16C9-4B3B-AFD2-CBBA9FA72C15}-SdWelcomeMaint-0]

Result=303

[{999A7077-16C9-4B3B-AFD2-CBBA9FA72C15}-MessageBox-0]

Result=6

[{999A7077-16C9-4B3B-AFD2-CBBA9FA72C15}-SdFinish-0]

Result=1

bOpt1=0

bOpt2=0

2. Save the file as <name>.iss, e.g. silent_uninst_64.iss.

Running a silent uninstall

To silently uninstall Enterprise Manager, open a command prompt and issue the following command:

Setup and User Guide - Enterprise Manager, May 2023 23

4 Installation and setup

Syntax:

"C:\Program Files (x86)\InstallShield Installation Information\<directory_containing_setup_

file>\setup.exe" /s /f1my_response_file /f2log_file

where:

my_response_file is the full path to the response file you created earlier and log_file is the path to the

optional log file.

The directory containing the Enterprise Manager setup file always ends with the following string:

CBBA9FA72C15

Example:

C:\>"C:\Program Files (x86)\InstallShield Installation Information\{999A7077-16C9-4B3B-AFD2-

CBBA9FA72C15}\setup.exe" /s /f1C:\temp\response.iss /f2C:\temp\1\silent_uninstall.log

If the uninstall was successful, the log file should contain the following rows:

[ResponseResult]

ResultCode=0

4.3 Changing the Enterprise Manager service account

By default, Enterprise Manager is installed with Administrator privileges. For secuirty reasons, you may want

Enterprise Manager to run under a user account that does not have Administrator privileges.

To do this:

1. Install Enterprise Manager.

2. Create a local user without administrative privileges.

3. Reserve the URL for the user you just created by running the following commands:

netsh http add urlacl url=https://+:443/AttunityEnterpriseManager

user=DOMAIN\youruser

netsh http add urlacl url=http://+:80/AttunityEnterpriseManager

user=DOMAIN\youruser

4. Grant the new user the Full control permission for the Enterprise Manager data folder (<EM_INSTALL_

DIR>\data).

5. Open the Local Security Policy window and select Local Policies>User Rights Assignment. Then

grant the Log on as a service policy to the new user.

6. Stop the Enterprise Manager service.

7. In the Log On tab of the Enterprise Manager service properties, select This account and specify the

new user name in the following format:

.\NewUser

8. Save your changes.

9. Start the Enterprise Manager service.

Setup and User Guide - Enterprise Manager, May 2023 24

4 Installation and setup

4.4 Setting the login authentication method

By default, Enterprise Manager uses Single Sign-on through Windows Authentication to authenticate users.

This allows users to open the Enterprise Manager Console without providing additional authentication. To

require users to authenticate themselves at login, you can change the authentication method to Form or

SAML. Setting the authentication method is done using the Enterprise Manager CLI, as described below.

To see the current authentication settings, run the command described in Showing the connectivity and login

settings (page 297).

l

All commands in this section should be "Run as administrator" from the Enterprise Manager

bin directory. The default location is C:\Program Files\Attunity\Enterprise Manager\bin.

l

When the Enterprise Manager data folder is in a non-default location (such as in a cluster

installation), make sure to include the --d data_folder parameter in all commands,

where data_folder is the location of the data folder. The parameter should immediately

follow the name of the Enterprise Manager executable file (e.g. aemctl --d

f:\mydatafolder {command} {parameters})

l

Changes to the authentication method will take affect only after you restart the Enterprise

Manager service.

l

If Form authentication is used, all Login/Logout operations are reported to the Audit Trail.

Setting Single Sign-on authentication

This is the default authentication method, which uses Windows authentication.

To set the authentication method to single sign-on, run:

aemctl.exe configuration set --authentication_method sso

Abbreviated form of --sso: -w

Setting Single Sign-on authentication with Kerberos

Kerberos is an enterprise authentication protocol that uses the concept of tickets and three-way

authentication to enable users and computers to identify themselves and secure access to resources.

Using Kerberos SSO, users can seamlessly log into Enterprise Manager and administrators can completely

externalize and centrally manage users or group memberships using their existing Kerberos infrastructure.

To set the authentication method to single sign-on with Kerberos, run:

aemctl.exe configuration set --authentication_method sso-kerberos

If the Kerberos protocol fails, Enterprise Manager will try to log in using NTLM authentication. If

NTLM authentication is not enabled in the system, an error will be returned.

Setup and User Guide - Enterprise Manager, May 2023 25

4 Installation and setup

Setting form authentication

As opposed to Single Sign-on through Windows Authentication, this method requires users to provide a user

name and password at login.

To set the authentication method to Form, run:

aemctl.exe configuration set --authentication_method form

Abbreviated parameter: -f

Setting a user timeout

Parameter: --user_timeout

Abbreviated form: -u

When setting --form authentication, you can use this parameter to override the default user idle timeout

period (5 minutes) or to disable user idle timeout entirely.

When a user idle timeout value is specified, Enterprise Manager will automatically log out users that have

been inactive for the specified time period (or longer).

To set a user timeout when using Form authentication, run:

aemctl.exe configuration set --authentication_method form --user_timeout

timeout

Where timeout is the length of time in minutes after which users will be logged out. The minimum permitted

value is 1 minute.

For example, to set a user-idle timeout period of two minutes, run:

aemctl.exe configuration set --authentication_method form --user_timeout 2

To disable the user-idle timeout entirely, run:

aemctl.exe configuration set --authentication_method form --user_timeout -1

Specifying an Active Directory domain

Parameter: --domain

Abbreviated form: -m

When setting --form authentication, you can use this parameter to specify an Active Directory domain name

that will be used when a user logs in with a user name only (i.e. without a domain name).

To set a user timeout when using Form authentication, run:

aemctl.exe configuration set --authentication_method form --domain

DomainName

Where DomainName is the name of the domain.

For example, to set the domain to ad2_acme, run:

Setup and User Guide - Enterprise Manager, May 2023 26

4 Installation and setup

aemctl.exe configuration set --authentication_method form --domain ad2_acme

By default, when only a user name is provided in the login form, the domain of the server is used. If the server

does not belong to any domain the server machine name will be used instead.

A user who logs in as "doe" will be assumed to identify as "ad2_acme\doe". If a user specifies a fully qualified

domain name when logging in, this parameter is ignored.

Setting up SAML authentication

This method requires you to log in via your organization's SAML Identity Provider. The command parameters

for setting SAML authentication are the same regardless of your SAML provider, although the parameter values

are slightly different.

The setup procedure consists of the following steps:

l

Step 1: Set up SAML on Enterprise Manager (page 28)

l

Step 2: Set Up an Enterprise Manager Superuser (page 29)

l

Step 3: Log in to Enterprise Manager and create SAML users (page 29)

Before running the commands, you must have already configured Enterprise Manager as an

application in your SAML Identity Provider.

When Enterprise Manager is not installed in a Cluster, the Enterprise Manager Assertion

Consumer Service (ACS) URL is:

https://{host_name}/attunityenterprisemanager/rest/?action=login_saml

When Enterprise Manager is installed in a Cluster, make sure to fulfill the following

prerequisites:

l

Finish the cluster install on all nodes before configuring SAML.

l

In order to propagate the configuration changes, make sure to include the -d data_

folder parameter in the SAML configuration commands described below, where data_

folder is the location of the cluster's shared data folder.

l

The Assertion Consumer Service (ACS) URL which the IDP should call when redirecting SAML

calls should be the cluster wide DNS name (as opposed to a specific machine name).

This is how it should look:

https://{em-cluster-fqdn}/attunityenterprisemanager/?action=login_saml

For more information about setting up Enterprise Manager in a cluster, see Installing Qlik Enterprise

Manager in a Windows cluster (page 327).

Setup and User Guide - Enterprise Manager, May 2023 27

4 Installation and setup

Step 1: Set up SAML on Enterprise Manager

To set the Enterprise Manager authentication method to SAML, run:

Syntax:

aemctl [--d data_folder] configuration set --authentication_method SAML --idp_url

SsoUrl

--

idp_issuer

issuer_name

--idp_certificate_file

CertificateFile

[--idp_user_displayname_

attribute

displayname

] [--idp_username_attribute

username

] [--idp_user_groups_attribute

groups

]

Example: Using Microsoft Azure as the SAML IdP

aemctl configuration set --authentication_method SAML --idp_url

https://login.microsoftonline.com/12854727-3c42-4866-ab29-0c418b8310a1/saml2 --idp_issuer

aemdevtest --idp_certificate_file AEMDevTest.pem

Where:

l

SsoUrl is the URL of the SAML IdP (Identity Provider) that handles sign-in requests.

When using Okta, this is the Okta Single Sign-On URL.

When using Microsoft Azure, this is the AzureAD SAML single sign-on URL.

Enterprise Manager will direct users to this URL to complete the SAML login operation.

l

issuer_name is a unique name that the identity provider uses for SAML 2.0.

When using Okta, this should be a URL.

When using Azure, this should be a string.

l

CertificateFile - The certificate is used by the IdP to sign the SAML assertions sent to Enterprise

Manager. The certificate file can be anywhere on the Enterprise Manager machine, but only needs to be

specified with a path when it does not reside in the Enterprise Manager bin directory.

When using Okta, the certificate must be in .cert format.

When using Microsoft Azure, the certificate must be in .pem format.

l

data_folder - The location of the data folder when it is not the default location such as when

Enterprise Manager is installed in a Windows cluster.

Optional Parameters:

The following parameters are optional and should only be used if required by your SAML IdP:

l

--idp_user_displayname_attribute - The user display name attribute.

l

--idp_username_attribute - By default, with SSO, the SAML Assertion’s “Subject” attribute is used to

define the username. Using the subject is the right solution in most situations, but in extreme cases

(such as the subject being a transient ID) it may be necessary to provide the username in some other

form.

l

--idp_user_groups_attribute - The user group attribute.

Once you have set up SAML, you need to restart the Qlik Enterprise Manager service for the settings

to take effect.

Setup and User Guide - Enterprise Manager, May 2023 28

4 Installation and setup

Step 2: Set Up an Enterprise Manager Superuser

The first time you log in to Enterprise Manager using SAML, you must log in as an Enterprise Manager

superuser. This is because none of the existing (or default) Enterprise Manager users are authorized SAML

users.

The instructions below assume that you have already changed the default Master User key. For

instruction on how to do this, see Configuring Enterprise Manager using the CLI (page 293) and

Configuring Enterprise Manager using the CLI (page 293) respectively.

To set up a superuser, run the following command:

aemctl authorization setsuperuser -s username -e ExpirationTimeoutInMinutes -

m MasterUserKey

Where:

l

username is the superuser user name. The user must be an existing SAML user and can contain any

Unicode character up to 255 characters.

l

ExpirationTimeInMinutes is the expiration time for the specified user. The maximum is 60

minutes.

l

MasterUserKey is your Master User Key.

Step 3: Log in to Enterprise Manager and create SAML users

After setting up SAML authentication, you will be presented with the following page when you try to open the

Qlik Enterprise Manager console:

1. Click Log In with SAML.

You will be redirected to Okta or Microsoft Azure to provide your SAML login credentials.

2. Authenticate yourself with SAML.

After successful authentication, you will be redirected back to the Enterprise Manager Console.

3. Add authorized SAML users and groups, as described in Managing user permissions (page 286).

Setup and User Guide - Enterprise Manager, May 2023 29

4 Installation and setup

For information about other CLI options, see Configuring Enterprise Manager using the CLI (page 293).

Switching between SAML and SSO/Form authentication

If you wish to switch from SAML to SSO/Form authentication (or vice versa), you may need to clear the existing

users from Enterprise Manager. When switching from SAML to SSO/Form authentication, you will not be able

to change user permissions or add users unless you clear the existing SAML users. However, when switching

from SSO/Form authentication to SAML, you do not need to clear the existing users in order to add/delete

users or edit user permissions. You may still want to do this however if you find the presence of non-SAML

users distracts you from managing the SAML users.

Before clearing the users, it is strongly recommended to export them as this will save time (by

importing them) should you later need to revert the authentication type.

To export all users to a JSON file, run the following command:

aemctl repository export_acl -f [fullpath\]filename

where filename is the name of the file to which the users will be exported. By default, the file will be exported

to the Enterprise Manager bin directory. If you wish the file to be exported to a custom path, include

[fullpath\] in the command.

Example:

aemctl repository import_acl -f C:\temp\Enterprise ManagerUsers

To clear all users, run the following command:

aemctl repository clear_acl

To import users from the exported JSON file, run the following command:

aemctl repository import_acl -f [fullpath\]filename

where filename is the name of the file to import. Unless you specified a custom export path, the file will be

located in the Enterprise Manager bin directory. If you wish the file to be imported from a custom path,

include [fullpath\] in the command.

Example:

aemctl repository import_acl -f C:\temp\Enterprise ManagerUsers

Setting up Personal Access Token authentication for the API

Personal Access Token authentication is only supported with the Enterprise Manager API.

Azure Active Domain is not supported.

The high-level flow consists of the following steps:

Setup and User Guide - Enterprise Manager, May 2023 30

4 Installation and setup

l

Step 1: In Okta, create an app integration that uses OpenID Connect

l

Step 2: In Enterprise Manager, enable Enterprise Manager to communicate with Okta

l

Step 3: In Enterprise Manager, generate a Personal Access Token

l

Step 4: Configure the Enterprise Manager API to log in using the Personal Access Token

Step 1: In Okta, create an app integration that uses OpenID Connect

Enterprise Manager uses OpenID Connect to log in with the API. Therefore, before you can use OpenID

Connect with Enterprise Manager, you must create a web integration in Okta.

To create a web integration in Okta for use with Enterprise Manager:

1. Log in to your Okta account.

2. Navigate to Applications>Applications and click Create App Integration.

3. In the Create a new app integration dialog, select OIDC - Open IDConnect.

4. Select Web Application as the Application type and click Next.

5. In the New Web Integration page, configure the following fields:

l

App Integration Name:The name of your App integration. For example,QEM OpenID

Connect.

l

Select Refresh Token.

l

In the Sign-in redirect URIs field, enter the following:

https://

EnterpriseManagerMachine/attunityenterprisemanager/rest/login_

openid

Where EnterpriseManagerMachine is the host name or IP address of your Enterprise

Manager machine.

6. Clear the Enable immediate access with Federation Broker Mode option, and then click Save.

7. Copy your Client ID and Client secret. You will need to provide these parameters in the next stage.

8. Assign the app integration to the users or groups that you want to allow to use the Personal Access

Token.

For details, see ≤ Assign app integrations.

Step 2: In Enterprise Manager, enable Enterprise Manager to communicate with Okta

To enable Enterprise Manager to communicate with Okta, open a CMDprompt as admin and change the

working directory to <Enterprise Manager-INSTALL-DIR>\bin.

Then run the following command:

Syntax

aemctl.exe configuration set --open_id_authority

your-openid-connect-authority

--open_id_

client_id

your-client-id

--open_id_client_secret

your-secret

Example using Okta

Setup and User Guide - Enterprise Manager, May 2023 31

4 Installation and setup

aemctl.exe configuration set --open_id_authority "https://dev-13465054.okta.com" --open_id_

client_id "0oa8ohkl5ftweZNWTT5d7" --open_id_client_secret "FJxXqWOpJsROGrthsaVzfUIcNthG6JLA1-

nAJH0"

Where:

l

--open_id_authority is your Okta URL. For example, https://dev-13465054.okta.com

l

--open_id_client_id is the client ID generated in Step 1: Create an app integration that uses

OpenID Connect above.

l

--open_id_id_client_secret is the client secret generated in Step 1: Create an app

integration that uses OpenID Connect above.

Optional Parameters:

The following parameters are optional and should only be used if required by Okta:

l

--open_id_additional_scopes - Additional scopes that are added to the scope list when an

OpenId Connect login occurs. The default is "groups"

l

--api_token_daily_maintenance_time - Determines when the API token maintenance

background process runs each day. This should be formatted as HH:mm. The default is "00:30"

l

--api_token_lifetime - The number of days a Personal Access Token is valid. The default is

"180"

l

--open_id_refresh_token_lifetime - The number of days a refresh token is valid. The default

is "0" meaning it is valid forever.

l

--open_id_user_name_field - The field name for the OpenID Connect user name. The default is

"preferred_username".

l

--open_id_display_name_field - The field name for the OpenID Connect user display name.

The default is "name".

l

--open_id_group_field - The field name for an OpenID Connect group. The default is "groups".

After you have run the OpenID Connect command, you need to restart the Qlik Enterprise Manager

service for the settings to take effect.

Step 3: In Enterprise Manager, generate a Personal Access Token

1. Log in to Enterprise Manager as a SAML user. This must be one of the users/groups that was assigned

to the app integration in Step 1: In Okta, create an app integration that uses OpenID Connect

above.

For information on setting up SAML, see Setting up SAML authentication above.

2. In the top right of Enterprise Manager, click the inverted triangle to the right of the user name and

select Generate Personal Access Token.

Setup and User Guide - Enterprise Manager, May 2023 32

4 Installation and setup

The Generate Personal Access Token dialog opens.

In the Generate Personal Access Token dialog, you will see one of the following:

l

You do not have a Personal Access Token. This is shown if you have not previously generated

a Personal Access Token:

l

Your Personal Access Token expired on <Date>. This is shown if your Personal Access Token

has expired.

l

Your Personal Access Token expires on <Date>. This is shown if you already have a Personal

Access Token.

When regenerating a token, you will need to confirm that you want to regenerate the

token. This is because API login with the original token will stop working as soon as

you generate a new token.

3. Click Generate token.

The Copy Personal Access Token dialog is displayed.

4. Copy your personal access token. You will need this to log in with the Enterprise Manager API.

See also: Managing Personal Access Tokens (page 289)

Step 4: Configure the Enterprise Manager API to log in using the Personal Access

Token

For instructions, see:

l

REST API: Login

l

.NET API: Getting started - Login

l

Python API: Getting started - Login

4.5 Starting to work with the Qlik Enterprise Manager

Console

To start working with Enterprise Manager, you need to open the Qlik Enterprise Manager Console and register

a Replication Management license.

You can use a Web browser to access the Console from any computer in your network. For information on

supported browsers, see Preparing your system for Enterprise Manager (page 15).

The user logged into Enterprise Manager must be an authorized Qlik Enterprise Manager user.

Setup and User Guide - Enterprise Manager, May 2023 33

4 Installation and setup

To access the Qlik Enterprise Manager Console:

l

From the machine on which it is installed, select All Programs > Qlik Enterprise Manager > Qlik

Enterprise Manager Console from the Windows Start menu.

OR

Type the following address in the address bar of your Web browser:

https://<computer name>/attunityenterprisemanager

On a machine running Microsoft Windows 10 or Windows Server 2012, you need to run the Console as

Administrator.

l

From a remote browser, type the following address in the address bar of your Web browser:

https://<computer name>/attunityenterprisemanager

where <computer name> is the name or IP address of the computer where Qlik Enterprise Manager is

installed.

If no server certificate is installed on the Enterprise Manager machine, a page stating that the connection is

untrusted opens. This is because when Enterprise Manager detects that no server certificate is installed, it

installs a self-signed certificate. Because the browser has no way of knowing whether the certificate is safe, it

displays this page.

For more information, see Setting up HTTPS for the Enterprise Manager console (page 35).

If prompted, enter your user name and password.

The user name may need to include domain information in the following format:

<domain name>@<user name>

For more information, see Setting the login authentication method (page 25).

Registering Licenses

If this is the first time you are using Enterprise Manager, you will be prompted to register a Replication

Management license when the console opens. You may also need to register a Replication Analytics license

(required for the Analytics (page 305) module), depending on whether you have obtained such a license from

your Qlik Sales Representative.

For information on registering licenses, see Registering and managing licenses (page 276).

Setup and User Guide - Enterprise Manager, May 2023 34

5 Security considerations

5 Security considerations

This section provides a detailed rundown of the various security-related procedures that need to be

performed to ensure that your data is secure.

In this section:

l

Setting up HTTPS for the Enterprise Manager console (page 35)

l

Setting up HSTS on Enterprise Manager (page 38)

l

Replacing the self-signed certificate on Windows (page 39)

l

Setting the hostname and changing the SSL port (page 40)

l

Replacing the Master User Password (page 41)

l

Encrypting the user permissions file (page 42)

l

Controlling execution of user-defined commands (page 43)

5.1 Setting up HTTPS for the Enterprise Manager console

Industry-standard security practices dictate that web user interface for enterprise products must use secure

HTTP (HTTPS). Qlik Enterprise Manager enforces the use of HTTPS and will not work if HTTPS is configured

incorrectly.

As Enterprise Manager uses the built-in HTTPS support in Windows, it relies on the proper setup of the

Windows machine it runs on to offer HTTPS access. In most organizations, the IT security group is responsible

for generating and installing the SSL server certificates required to offer HTTPS. It is strongly recommended

that the machine on which Enterprise Manager is installed already has a valid SSL server certificate installed

and bound to the default HTTPS port (443).

Checking if an SSL Certificate is installed

To check whether an SSL certificate is installed, you can use the following command:

netsh http show sslcert | findstr /c:":443"

If an SSL certificate is installed, the output should look like this:

netsh http show sslcert | findstr /c:":443 "

IP:port : 192.168.1.13:443

IP:port : 192.168.1.11:443

IP:port : [fe80::285d:599c:4a55:1092%11]:443

IP:port : [fe80::3d0e:fb1c:f6c3:bc52%23]:443

With a valid SSL certificate installed, the Enterprise Manager web user interface will automatically be available

for secure access from a web browser using the following URL:

https://<machine-name>/attunityenterprisemanager

Using the self-signed certificate

Due to the way the HTTPS protocol works, there is no way for Enterprise Manager to automatically provide

and install a valid SSL server certificate. Still, in the event that no SSL server certificate is installed, Enterprise

Manager automatically generates and installs a self-signed SSL server certificate (as a temporary measure).

Setup and User Guide - Enterprise Manager, May 2023 35

5 Security considerations

This certificate is generated on the Enterprise Manager machine and cannot be exported or used elsewhere.

It should be noted that browsers do not consider the certificate to be valid because it was not signed by a

trusted certificate authority (CA). When connecting with a browser to a server that uses a self-signed

certificate, a warning page is shown such as this one in Chrome:

Or this one in Firefox:

Setup and User Guide - Enterprise Manager, May 2023 36

5 Security considerations

The warning page informs you that the certificate was signed by an unknown certificate authority. All

browsers display a similar page when presented with a self-signed certificate. If you know that the self-signed

certificate is from a trusted organization, then you can instruct the browser to trust the certificate and allow

the connection. Instructions on how to trust the certificate vary between browsers and even between different

versions of the same browser. If necessary, refer to the help for your specific browser.

Some corporate security policies prohibit the use of self-signed certificates. In such cases, it is

incumbent upon the IT Security department to provide and install the appropriate SSL server

certificate (as is the practice with other Windows products such as IIS and SharePoint). If a self-

signed certificate was installed and needs to be removed, then the following command can be used:

<product_dir>\bin\AemCtl.exe certificate clean

Note that after the self-signed certificate is deleted, connections to the Enterprise Manager machine

will not be possible until a valid server certificate is installed. Should you want to generate a new

self-signed certificate (to replace the deleted certificate), simply restart the Enterprise Manager

service.

Setup and User Guide - Enterprise Manager, May 2023 37

5 Security considerations

5.2 Setting up HSTS on Enterprise Manager

HSTS is a web security policy mechanism that helps to protect websites against man-in-the-middle attacks

such as protocol downgrade attacks and cookie hijacking. It allows web servers to declare that web browsers

(or other complying Dilqam) should automatically interact with it using only HTTPS connections, which

provide Transport Layer Security (TLS/SSL).

You can force the Enterprise Manager Web UI and/or the Enterprise Manager REST API connections to use

HSTS (HTTP Strict Transport Security). To do this, run the commands described below.

All commands should be run from as Admin from the product bin folder.

Enabling HSTS

Command syntax

aemctl.exe configuration set --static_http_headers

header_list

--rest_http_headers

header_list

Parameters

Parameter Description

--static_http_headers The headers required to connect to the Enterprise Manager Web UI.

--rest_http_headers The headers required to connect using the API.

Headers should be specified using the following format:

aemctl.exe configuration set --static_http_headers "header1:value1" "header2:value2" --rest_

http_headers "header1:value1" "header2:value2"

Example

aemctl.exe configuration set --static_http_headers "Strict-Transport-Security:max-

age=31536000; includeSubDomains;" --rest_http_headers "Strict-Transport-Security":"max-

age=31536000; includeSubDomains;"

Disabling HSTS

You can also revert to regular HTTPS connections.

Command syntax

aemctl.exe configuration set --static_http_headers ""|--rest_http_headers ""

Parameters

Parameter Description

--static_http_headers Use this parameter to revert the headers required to connect to the

Enterprise Manager Web UI.

--rest_http_headers Use this parameter to revert the headers required to connect using the

API.

Setup and User Guide - Enterprise Manager, May 2023 38

5 Security considerations

Example

Disable static_http_headers

aemctl.exe configuration set --static_http_headers ""

Disable rest_http_headers

aemctl.exe configuration set --rest_http_headers ""

5.3 Replacing the self-signed certificate on Windows

The instructions below are intended for organizations who wish to replace the built-in self-signed certificate

automatically generated by the Enterprise Manager UI Server on Windows with their own certificate. This is

achieved by removing the self-signed certificate and then importing the new certificate.

See also Setting up HTTPS for the Enterprise Manager console (page 35).

Before starting, make sure that the following prerequisites have been met:

l

The replacement certificate must be a correctly configured SSL PFX file containing both the private key

and the certificate.

l

The common name field in the certificate must match the name browsers will use to access the

machine.

To remove the self-signed certificate created by Enterprise Manager:

1. Stop the Qlik Enterprise Manager service.

2. Open a command prompt (using the "Run as administrator" option) and change the path to the bin

directory. The default path is:

C:\Program Files\Qlik\Enterprise Manager\bin.

3. Run the following command:

AemCtl.exe certificate clean

To import your own certificate:

1. Run mmc.exe to open the Microsoft Management Console.

2. From the File menu, select Add/Remove Snap-in.

The Add or Remove Snap-ins dialog box opens.

3. In the left pane, double-click Certificates.

The Certificates snap-in wizard opens.

4. Select Computer account and then click Next.

5. In the Select Computer screen, make sure that Local computer is selected and then click Finish.

6. Click OK to close the Add or Remove Snap-ins dialog box.

7. In the left pane, expand the Certificates folder. Then, right-click the Personal folder and select All

Tasks>Import.

Setup and User Guide - Enterprise Manager, May 2023 39

5 Security considerations

8. In the File to Import screen, select your PFX certificate file. Note that by default the Open dialog box

displays CER files. In order to see your PFX files, you need to select Personal Information Exchange

from the drop-down list in the bottom right of the dialog box.

9. Click Next and enter the private key password.

10. Continue clicking Next until you reach the Completing the Certificate Import Wizard screen. Then

click Finish to exit the wizard.

11. In the Personal> Certificates folder, double-click the newly imported certificate.

The Certificate dialog box opens.

12. Scroll down the Details tab until you see the Thumbprint details and copy them to the clipboard.

13. Open a command prompt and run one of the following commands:

Syntax:

¢ netsh http add sslcert ipport=0.0.0.0:443 certhash=[YOUR_CERTIFICATE_THUMBPRINT_

WITHOUT_SPACES] appid={4dc3e181-e14b-4a21-b022-59fc669b0914}

Example:

netsh http add sslcert ipport=0.0.0.0:443

certhash=5f6eccba751a75120cd0117389248ef3ca716e61 appid={4dc3e181-e14b-4a21-b022-

59fc669b0914}

Syntax:

¢ netsh http add sslcert ipport=[::]:443 certhash=[YOUR_CERTIFICATE_THUMBPRINT_WITHOUT_

SPACES] appid={4dc3e181-e14b-4a21-b022-59fc669b0914}

Example:

netsh http add sslcert ipport=[::]:443 certhash=5f6eccba751a75120cd0117389248ef3ca716e61

appid={4dc3e181-e14b-4a21-b022-59fc669b0914}

14. Close the command prompt and Microsoft Management Console.

15. Start the Qlik Enterprise Manager service.

5.4 Setting the hostname and changing the SSL port

After installing Qlik Enterprise Manager, you can use the Enterprise Manager CLI to set the hostname and SSL

port for accessing the Enterprise Manager server machine.

Under normal circumstances, you should not need to set the hostname. However, on some systems,

connecting using HTTPS redirects to localhost. If this occurs, set the hostname of the Enterprise Manager

machine by running the command shown below.

To set the hostname:

Run the following command:

<product_dir>\bin\AemCtl.exe configuration set -a

hostAddress

where hostAddress is the address of the Enterprise Manager server machine.

Setup and User Guide - Enterprise Manager, May 2023 40

5 Security considerations

When using a Load Balancer, hostAddress should be the Load Balancer host address.

To change the SSL port:

Run the following command:

<product_dir>\bin\AemCtl.exe configuration set -s

httpsPort

where httpsPort is the SSL port number of the Enterprise Manager server machine.

5.5 Replacing the Master User Password

Qlik goes to great lengths to ensure that sensitive information is protected from unauthorized parties, which is

why all passwords and other sensitive information are encrypted (and decrypted) using the Enterprise

Manager Master Key. Enterprise Manager uses only FIPS 140-2 compliant algorithms for hashing and

encryption: SHA256 for hashing and AES256 for encryption.

This topic provides an overview of how the Enterprise Manager Master Key is generated and applied as well as

explaining how to encrypt the User Permissions file.

The Master Key

The following section describes the role the master key and the master user key play in keeping your data

secure.

l

Using the Enterprise Manager CLI, the user provides the system with an alphanumeric password

(Master User Password), which must be at least 32 characters.

By default (i.e. after first time installation), the Master User Password is randomly generated

by Enterprise Manager. It is strongly recommended to change the Master User Password as

soon as possible (especially in a production environment) as this will allow recovery of

backed up data in the event of disk failure. The password should of course be kept in a

secure location for future use.

You can either use your own password or run the

genpassword

utility to generate a password

for you.

l

Enterprise Manager uses a one way hashing functions to generate a key (Master User Key) from the

Master User Password.

The one way hash function guarantees that, given the same Master User Password as input,

the same Master User Key will be generated. This is what happens in high availability

scenarios - the same Master User Password is entered on two different machines.

l

Enterprise Manager encrypts the Master User Key using the local machine’s key (Windows) and stores it

in a file named muk.dat. The muk.dat file can contain several entries (and indeed does in a high

availability environment), with each entry uniquely identifying the machine on which Enterprise

Manager is running.

Setup and User Guide - Enterprise Manager, May 2023 41

5 Security considerations

l

Enterprise Manager generates a random Master Key, encrypts it with the Master User Key and stores it

in a common location (e.g. the root repository).

l

On software startup, the following occurs:

l

The Master User Key is read and decrypted (using the local machine’s key)

l

The Master Key is read and decrypted using the Master User Key

Once this process is complete, Enterprise Manager is able to encrypt and decrypt all sensitive information.

For more information on commands related to the master user password, see Commands Related to the

Master User Password.

High Availability mode

In a High Availability environment, Enterprise Manager runs on at least two machines that share the same

data folder. As there are two machines, there will also be two different keys - one for each machine.

As mentioned earlier, the Master User Key file (muk.dat) is constructed of entries, with each entry

corresponding to one machine. Both machines scan the entries searching for an entry they can decrypt.

If no matching entry can be found, an error will be returned. In this case, simply use the Enterprise Manager

CLI to enter the Master User Password again, and create an entry for the new machine.

See also Installing Qlik Enterprise Manager in a Windows cluster (page 327).

5.6 Encrypting the user permissions file

User permissions are stored in the following repository file:

<product_dir>\Data\cfgrepo.sqlite