QLIK.COM

W H I T E PAPER

Delivering SAP

data to Snowflake

with Qlik

Exploring the comprehensive options to deliver and transform

SAP data in Snowflake using Qlik | Talend.

Dave Freriks – Technology Evangelist, Partner Engineering, Qlik

David Richert –

Principal Data Platform Architect, Field CTO

, Snowflake

Delivering SAP Data to Snowflake with Qlik

1

INTRODUCTION 2

SAP Source Connectivity 3

ECC on DB, ECC on HANA, S/4 HANA 4

SAP BW and S/4 HANA BW 4

SAP HANA (db) 5

SAP Extraction Methodology 5

Change Data Capture (CDC) 5

Batch processing 6

Streaming 6

SAP Protocol Connectivity 7

SAP Operational Data Provisioning (ODP) 7

SAP Business Application Programming Interfaces (BAPIs) 8

REST APIs (Representational State Transfer Application Programming Interfaces) 9

Qlik | Talend Delivery Technology for SAP 10

Qlik Cloud Data Integration 11

Qlik Replicate 11

Talend Studio 12

Make SAP Data More Reliable 16

Reverse ETL and Writeback 18

Qlik SAP Solution Accelerators — Prebuilt SAP Content for Snowflake 19

Summary 20

Delivering SAP Data to Snowflake with Qlik

2

SUMMARY

•

Discuss options and scenarios around SAP Data Integration into Snowflake.

•

Qlik | Talend methods and capabilities will be covered in detail regarding SAP technical

integration options.

•

Recommendations depending on use cases and personas surrounding data integration

scenarios with SAP data.

INTRODUCTION

Qlik is a Snowflake Elite partner with Snowflake Ready Validation for data integration and analytics

solutions. Qlik holds Snowflake Industry Competencies for Manufacturing and Industrial, Retail and

Consumer Goods, Financial Services, Healthcare and Life Sciences, and Technology.

Qlik’s solution provides an end-to-end solution for analytics in Snowflake Data Cloud. It extracts

relevant data from SAP, ingests it into Snowflake in real time and transforms it to analytics-ready data.

The solution automates the design, implementation, and updates of data models while minimizing the

manual, error-prone design processes of data modeling, ETL coding, and scripting. With our 2023

acquisition of Talend, we are adding their leading data transformation, quality, and governance

capabilities. Qlik's capabilities complement SAP systems by providing a user-friendly interface for data

integration and data movement.

Snowflake Data Cloud offers a highly scalable, elastic architecture for storing and analyzing large

volumes of data. It is designed to handle structured and semi-structured data and supports SQL-,

Python-, Scala- , and Java-based transformation and querying for data analysis. Snowflake provides

features like data sharing, data security, zero-copy cloning, time-travel, recursive common table

expressions, and near-real-time data processing. Once the data is in Snowflake Data Cloud,

organizations can enrich that data from other sources (for example from sensors, social feeds, shop

floor devices, service documents, or from over one thousand data providers in the Snowflake

Marketplace) and perform complex queries and analyses on their SAP data, leveraging Snowflake's

processing power and scalability. Large-language Modules (LLM) can run safely and securely within

the Snowflake Data Cloud and can significantly augment the end-user experience while protecting

company data. Try out Snowflake’s time series forecasting as well. Many companies are publishing

Delivering SAP Data to Snowflake with Qlik

3

data applications on Snowflake which can be made available to all other Snowflake customers

worldwide.

In short, using Qlik to integrate SAP and Snowflake can bring significant benefits to organizations by

enabling better data integration, transformation, and management. The combined use of Qlik and

Snowflake can help businesses unlock insights from their SAP data and drive more informed decision-

making processes.

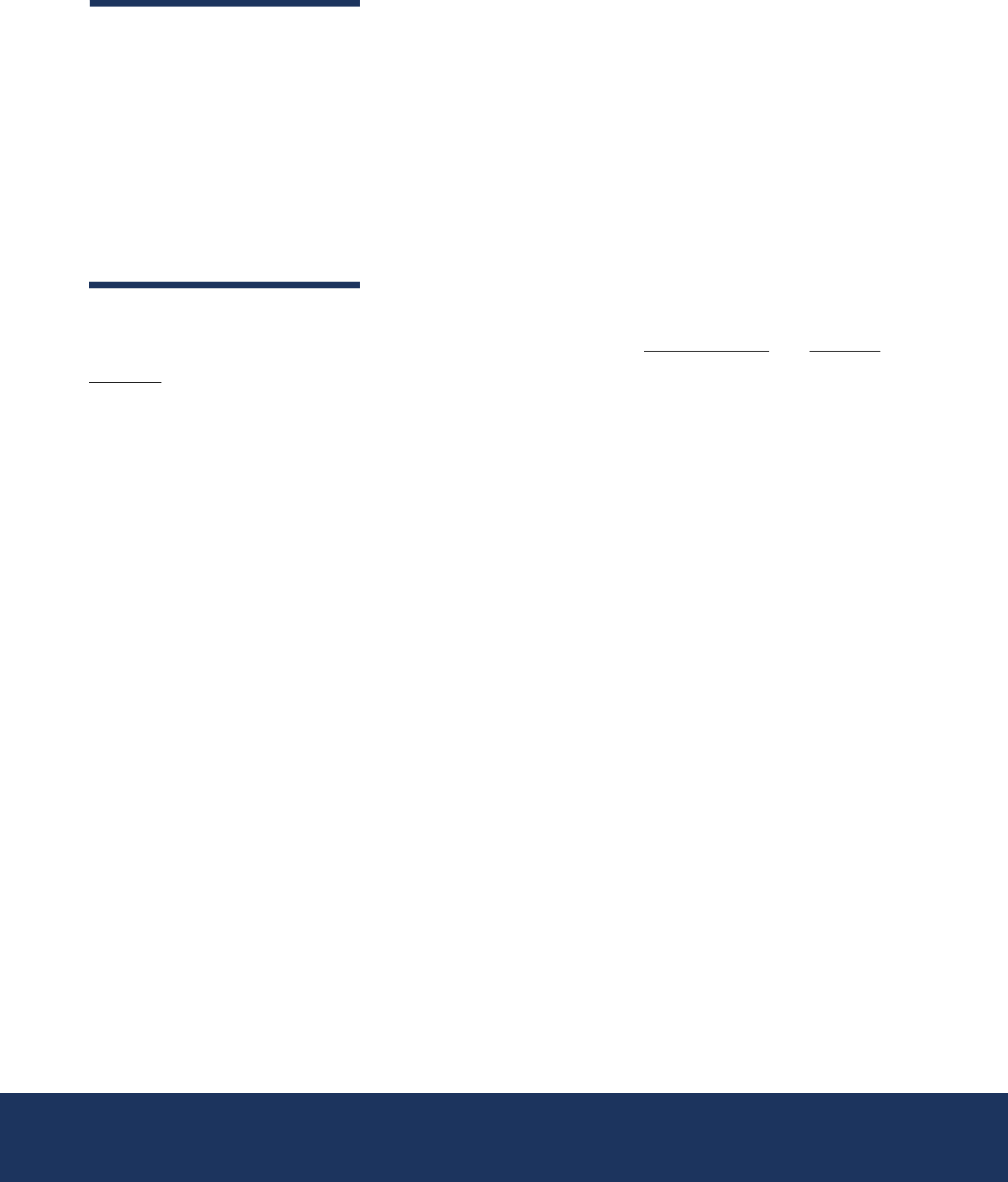

SAP Source Connectivity

Figure 1 - SAP Connectivity Options

First, let’s discuss the section boxed in RED on the left side of the diagram.

Delivering SAP Data to Snowflake with Qlik

4

This diagram illustrates a combination of SAP backend systems and options, data integration methods,

SAP connection methods, and Qlik | Talend outputs to Snowflake. While this will be discussed in much

more detail, an example path could be ECC on HANA -> using CDC -> via ODP for Extractors -> into

Snowflake via Qlik Cloud Data Integration (Figure 2).

Figure 2 – Example Integration

ECC on DB, ECC on HANA, S/4 HANA

Whether the customer has ECC on DB, ECC on HANA, S/4HANA, or

S/4 HANA Cloud, there are capabilities available for data extraction from

any variant of those systems. Choosing an option may come down to a

configuration or licensing situation with SAP (i.e., Runtime licensing vs.

Enterprise) and not all options may be available. Let’s look at the major

SAP components used for data extraction. Qlik Talend now supports a

wide range of SAP backend components and methods that encompass

the major data sources inside SAP.

SAP BW and S/4 HANA BW

Although used less frequently as time progresses, some customers still

have data they would like to move from SAP BW or S/4 HANA BW. Qlik |

Talend now provides a wide array of options for moving data out of these

complex systems. Qlik Analytics also provides dashboards and

visualizations using connectors like BICS for smaller datasets like

Infoqueries. However, for larger data objects like DSOs or InfoCubes, Qlik |

Delivering SAP Data to Snowflake with Qlik

5

Talend can extract the data from those objects directly and load into Snowflake.

SAP HANA (db)

A final scenario is when SAP HANA is being used as a standalone database.While uncommon, it still

can be used as a data source via ODBC drivers for Qlik and JDBC drivers for Talend.

SAP Extraction Methodology

Having covered the objects we can connect with inside of SAP, we need to cover the methods for

moving data from SAP into Snowflake: Change Data Capture (CDC), Batch Processing, and Streams.

Figure 3 - Extraction Methods

Change Data Capture (CDC) is a technique used in Qlik to identify and capture changes made to data

in a source system. The purpose of CDC is to track modifications, additions, or deletions to the data so

that these changes can be efficiently and accurately propagated to target systems or data consumers.

CDC systems monitor and capture changes at the database level, often by observing the transaction

logs or data replication mechanisms of the source database. This approach allows CDC to capture

changes in near-real time without putting excessive strain on the source system's performance.

Delivering SAP Data to Snowflake with Qlik

6

CDC is valuable in scenarios where timely and accurate data propagation is crucial, such as data

warehousing, data synchronization between systems, real-time analytics, and ensuring data

consistency across various applications. It helps organizations avoid resource-intensive full data loads

by focusing only on the changed data, which can lead to improved efficiency, lower latency, and

reduced network bandwidth requirements.

Batch processing refers to a method of moving and processing data in discrete chunks or batches, as

opposed to processing data in real time or on a continuous basis. In this approach, data is collected,

processed, and transferred in predetermined intervals or time windows rather than immediately as it is

generated or modified. Batch processing is often used when the need for real-time data updates is not

critical, and data can be aggregated and processed in larger chunks to improve efficiency.

While batch processing doesn't provide real-time insights, it can be more efficient and scalable for

certain use cases that don't require immediate data updates.

Streaming refers to the process of continuously moving, processing, and analyzing data in real time as

it is generated or updated. Unlike batch processing, which involves processing data in discrete chunks

at scheduled intervals, streaming data movement deals with data that is processed and acted upon as

it flows in a continuous stream.

Streaming data movement is especially valuable in use cases where prompt actions or responses are

necessary, and where analyzing data as it's generated can provide a competitive advantage, improve

operational efficiency, or enhance customer experiences.

When we compare the options with Qlik | Talend, we see that all these

data movement options — or a mix of them — are supported. For

example, in our SAP Accelerators for Qlik SaaS (to be covered later), we

use a mix of both CDC and Batch options in the same process.

Delivering SAP Data to Snowflake with Qlik

7

SAP Protocol Connectivity

Let’s review the options now for processing the SAP extractions options with SAP backend components

which are Operation Data Provisioning (ODP), BAPI Connections, and REST.

Figure 4 - SAP Protocol Options

SAP Operational Data Provisioning (ODP)

is a framework and technology provided by SAP to

enable the efficient and reliable extraction, transformation, and loading (ETL) of data from various

source systems into SAP's data warehousing and analytics solutions. ODP focuses on integrating data

from both SAP and non-SAP source systems into SAP's data warehousing platforms, like SAP BW

(Business Warehouse) and SAP HANA.

Key features and concepts associated with SAP ODP include:

1. Data Extraction and Replication: ODP provides a standardized way to extract data from source

systems, whether they are SAP applications or third-party systems. It supports different types of

extraction methods, such as delta extraction for incremental updates, full extraction for initial loads, and

change data capture (CDC) for real-time updates.

2. Extraction Modes: ODP supports various extraction modes, such as ODP-BW (for integration with SAP

BW) and ODP-SLT (for integration with SAP Landscape Transformation Replication Server). Additional

Delivering SAP Data to Snowflake with Qlik

8

supported methods are SAP Extractors, HANA InfoViews, and CDS Views. These modes offer different

ways to replicate data, each tailored to specific scenarios and requirements.

3. Delta Handling: One of the strengths of ODP is its ability to handle incremental data updates efficiently. It

tracks changes in source systems and extracts only the new or modified records since the last extraction,

reducing the data transfer volume and improving overall performance.

4. Metadata Management: ODP maintains metadata information about the extracted data, such as source

system details, extraction methods, and data transformations. This metadata helps in tracking the lineage

of the data and understanding its context.

5. Real-time and Batch Processing: ODP supports both real-time and batch data replication scenarios.

Real-time replication ensures that changes made in source systems are quickly reflected in the target

data warehouse, enabling near-real-time reporting and analytics.

6. Extended SAP HANA Integration: ODP plays a crucial role in integrating data into SAP HANA, SAP's

in-memory database platform. This integration is crucial for achieving high-speed analytics and

processing of large volumes of data.

Overall, SAP ODP simplifies and standardizes the process of moving and integrating data from various

source systems into SAP's data warehousing solutions, enabling organizations to have accurate,

timely, and comprehensive insights for making informed business decisions. It promotes data

consistency, reduces data transfer overhead, and enhances data quality through various transformation

capabilities.

SAP Business Application Programming Interfaces (BAPIs)

provide a standardized and

efficient way to connect external applications with SAP systems. BAPIs enable seamless integration

between SAP systems and other software components, allowing for data exchange, process

automation, and interaction with SAP's business logic.

Here's how SAP BAPI connectivity works:

1. Standardized Interface: BAPIs are predefined methods or functions provided by SAP that offer a

consistent interface for communication. They encapsulate specific business processes or operations

within the SAP system, such as creating a sales order, posting a goods receipt, or retrieving vendor

information.

2. Remote Function Calls (RFC): BAPIs are exposed as remote function modules in the SAP system.

These functions can be called remotely from external applications using various communication

protocols, such as Remote Function Call (RFC) or SOAP (Simple Object Access Protocol).

Delivering SAP Data to Snowflake with Qlik

9

3. Communication: To connect to an SAP system, external applications use communication mechanisms

such as RFC or web services. RFC is a set of communication protocols used by SAP to exchange data

between systems.

4. Extensibility: In some cases, organizations might need to enhance or customize existing BAPIs to

accommodate unique business requirements. SAP allows for the creation of custom BAPIs or

enhancement of standard BAPIs using the appropriate tools. Qlik and Talend both have their own BAPI

transports that are installed inside of SAP to manage data extraction.

Overall, SAP BAPI connectivity serves as a robust way to integrate external applications with SAP

systems, enabling businesses to streamline processes, reduce manual data entry, and achieve

seamless data exchange between disparate systems. They are fully supported by Qlik | Talend

technologies.

REST APIs (Representational State Transfer Application Programming Interfaces)

. These

APIs allow external applications to communicate with and retrieve data from SAP systems. There are

three options supported by the Qlik |Talend solutions for SAP REST connectivity.

1. SAP REST API: The Representational State Transfer (REST) API is an architectural style that

uses HTTP methods and standard web protocols to interact with resources. In the context of

SAP, REST APIs provide a way to access and manipulate data stored in SAP systems. SAP

offers REST APIs for various modules such as SAP Business Suite, SAP S/4HANA, SAP

SuccessFactors, etc. These APIs allow third-party applications to perform CRUD (Create, Read,

Update, Delete) operations on SAP data. The data is usually exchanged in JSON or XML

formats, making it easily consumable by a wide range of programming languages and platforms.

2. OData (Open Data Protocol): OData is a standardized protocol that builds upon the principles

of REST. It provides a set of conventions for building and consuming REST APIs. OData

enables querying and manipulating data using a URL-based syntax. SAP supports OData

services, which expose a consistent way to interact with SAP data entities. OData services offer

features like filtering, sorting, paging, and more — making it a popular choice for third parties

looking to extract data from SAP systems. OData services typically deliver data in JSON or XML

formats.

3. SAP SOAP Web Services: Simple Object Access Protocol (SOAP) is a protocol that uses XML

for communication between applications over a network. SAP supports SOAP-based web

services, which allow third-party applications to communicate with SAP systems. These web

services can be used for various purposes, including data extraction. SOAP web services

Delivering SAP Data to Snowflake with Qlik

10

provide a standardized way to define operations, inputs, outputs, and data types using Web

Services Description Language (WSDL). While SOAP is considered more heavyweight

compared to REST and OData, it offers strong security features and well-defined

communication patterns.

It is worth noting that REST connections are the slowest performing and

least effective methods for extracting data from SAP. However, they will

work on any version of SAP (ECC, HANA, etc.). REST based protocols

DO NOT support change data capture and are batch only.

Qlik | Talend Delivery Technology for SAP

Now we have all the SAP data backend source, data movement methods, and SAP protocols mapped,

it’s time to discuss how we use those various components of the Qlik | Talend portfolio to conduct the

data integrations. We will cover three solutions: Qlik Cloud Data Integration, Qlik Replicate, and Talend

Studio.

Figure 5 – Qlik | Talend solutions for SAP

Delivering SAP Data to Snowflake with Qlik

11

Qlik Cloud Data Integration

: Qlik Cloud Data Integration is a cloud-based data integration platform.

It focuses on providing efficient, real-time data integration and replication solutions. When it comes to

integrating with SAP systems, Qlik Cloud Data Integration offers the following capabilities:

•

Real-time Data Replication: Qlik Cloud Data Integration can replicate data from SAP systems in real

time to various target systems like data warehouses, data lakes, or cloud storage platforms. This enables

organizations to have up-to-date, synchronized data across their landscape.

•

Change Data Capture (CDC): The platform supports CDC mechanisms, allowing it to capture and

replicate only the changed data, reducing the overhead on the source system and optimizing the

replication process.

•

Data Transformation: Qlik Cloud Data Integration provides tools to transform data during the replication

process, ensuring that data is cleansed, enriched, and properly formatted as it moves from SAP to other

systems.

•

Automated Workflows: The platform offers automation features to create end-to-end data integration

workflows, enabling organizations to schedule, monitor, and manage data replication processes

efficiently.

•

Data Warehouse Automation: The platform extends the capabilities on top of data extraction to data

warehouse management and data mart creation and management on top of a data platform like

Snowflake.

Qlik Replicate

: Qlik Replicate is an enterprise-level data replication and integration solution. It is

designed to enable real-time data delivery, migration, and synchronization across various data sources,

including SAP systems. Its capabilities for integrating with SAP include:

•

SAP-specific Adapters: Qlik Replicate offers specialized adapters for SAP applications, ensuring

seamless connectivity and integration with SAP systems without the need for complex custom coding.

•

High-Performance Data Movement: Qlik Replicate employs log-based CDC technology to capture and

replicate changes in real time, minimizing the impact on source systems while delivering near-

instantaneous updates to target systems.

•

Automatic Schema Evolution: Qlik Replicate can handle schema changes in source systems, adapting

to modifications in SAP data structures without disrupting the replication process.

Delivering SAP Data to Snowflake with Qlik

12

•

Security and Compliance: The platform supports data encryption, masking, and data lineage features to

maintain data security and compliance while transferring sensitive data from SAP systems.

Talend Studio: Talend Studio is an industry-leading data integration and ETL (Extract, Transform,

Load) solution. When integrating with SAP systems, Talend Studio provides the following capabilities:

•

SAP Connectors: Talend Studio offers pre-built connectors for SAP systems, enabling users to easily

establish connections, extract data, and load it into various target systems.

•

Data Transformation and Enrichment: Talend Studio provides a wide range of data transformation and

enrichment options, allowing users to cleanse, transform, and enhance SAP data before loading it into

other systems.

•

Code Generation: Talend generates optimized code for data integration jobs, which can be executed on

various platforms to ensure efficient data movement from SAP to other destinations.

•

Job Orchestration: The platform enables the creation of complex data integration workflows, including

conditional logic and error handling, to ensure the reliability of data transfers from SAP systems.

.

Delivering SAP Data to Snowflake with Qlik

13

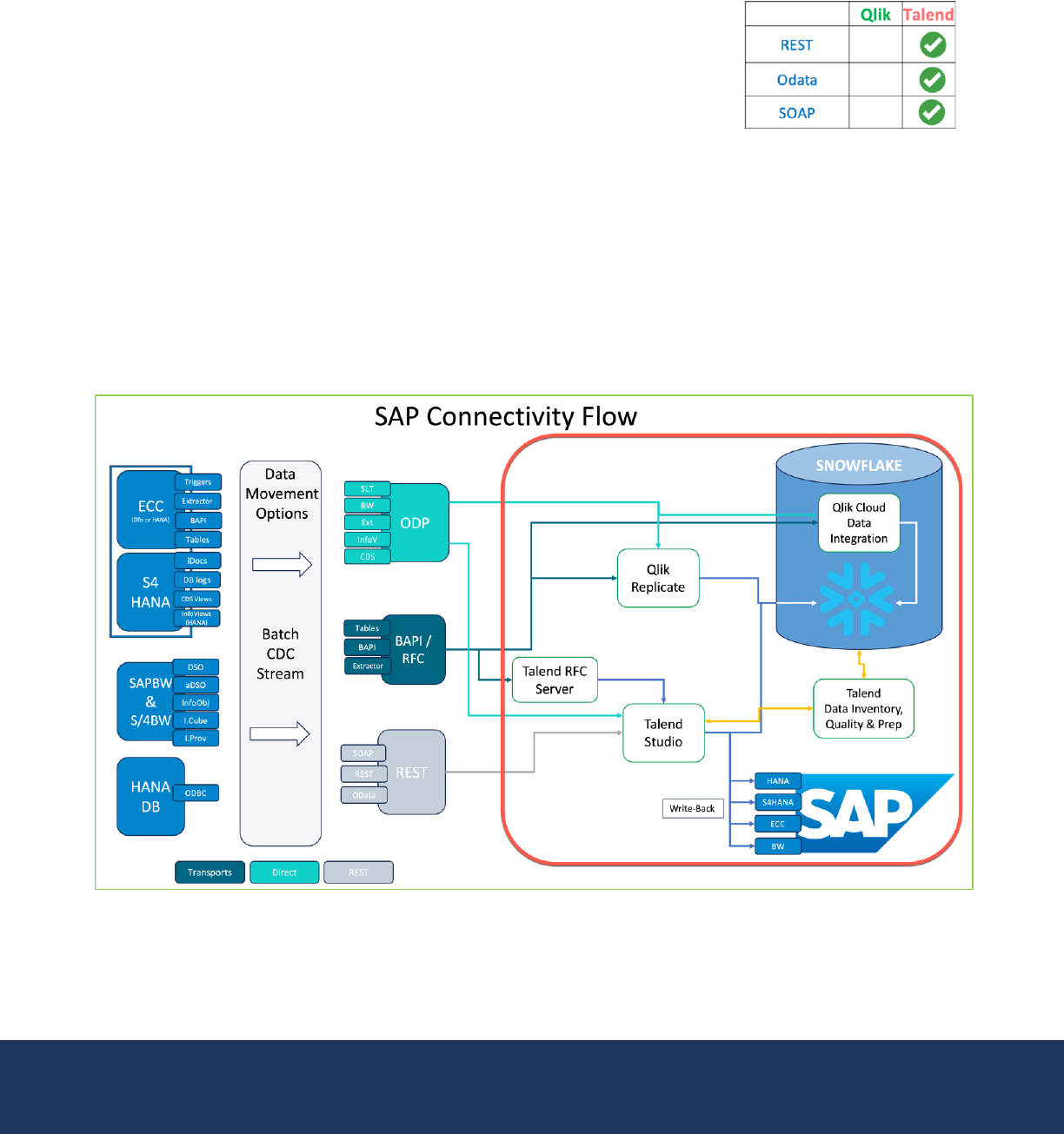

Note that there are some considerations for the different combination of options.

CDC Batch Stream

Read BW & S/4 HANA BW (BAPI)

Read SAP BAPI Objects

Read SAP iDocs Support

Read BW DSO / aDSO (BAPI)

Read BW InfoObjects, Providers, etc. (BAPI)

REST Support (SOAP, OData)

RFC Server Required (BAPI)

Read BW & S/4 HANA BW (ODP)

Read HANA DB (ODBC)

Talend

Read ECC(db), ECC (HANA), S/4HANA (ODP)

QCDI

Qlik Replicate

Talend

Read ECC(db), ECC (HANA), S/4HANA (BAPI)

QCDI (coming soon)

Qlik Replicate

Read BW DSO / aDSO (ODP)

SLT Support (ODP)

Leverage DB Logs for CDC

Read SAP Tables (Clstr, Pool, Trans)

Read SAP Extractors (BAPI)

Read CDS Views (ODP)

Read SAP Infoviews (ODP)

Talend

QCDI

QCDI

Qlik Replicate

QCDI (coming soon)

Qlik Replicate

Talend

Talend

Qlik Replicate

Talend

Talend

QCDI

Qlik Replicate

Talend

Talend

QCDI

Qlik Replicate

Talend

Talend

QCDI (coming soon)

Qlik Replicate

Talend

QCDI (coming soon)

Qlik Replicate

Qlik Replicate

Talend

Can be automated by API’s (App Automation)

READ FROM SAP

Talend

Transports Required (BAPI)

QCDI

Qlik Replicate

Talend

QCDI (coming soon)

Qlik Replicate

Talend

Talend

Talend

QCDI (coming soon)

Qlik Replicate

Delivering SAP Data to Snowflake with Qlik

14

Details for Talend’s SAP connectivity can be found here: https://help.talend.com/r/en-US/8.0/sap/sap

Details for Qlik’s SAP connectivity can be found here: https://help.qlik.com/en-

US/replicate/May2023/Content/Replicate/Main/SAP/AR_SAP.htm

This matrix shows the possible combinations of the previous three sections in a single chart. We can

now approach any customer scenario with this guide, and it will help us choose our path(s) to

accomplish the requisite tasks. Let’s work through some examples:

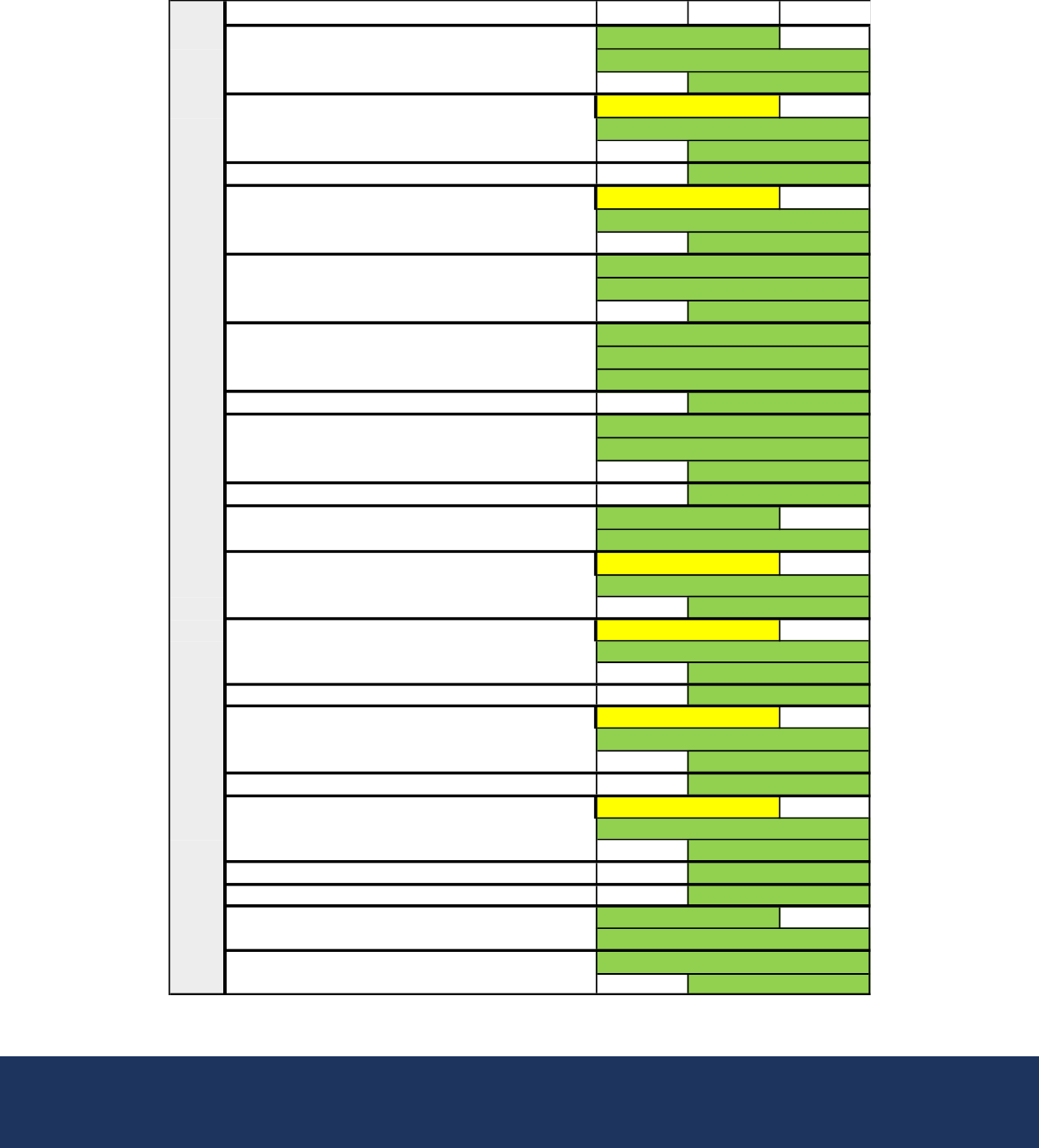

Scenario 1: A company is looking to offload their SAP ECC (on DB2) table data (KNA1, MARA, VBAP,

VBAK, etc.) on-premise data into Snowflake and keep it synchronized.

Figure 6 - CDC Scenario Base Tables

Using Qlik Replicate, we would connect to SAP using BAPI/RFC connection with installed SAP

transports using DB log-based replication and change data capture to load data into Snowflake and

keep the data synchronized. This combination is the highest throughput and provides real-time updates

in Snowflake.

CDC Batch Stream

Write HANA DB (ODBC)

Write SAP Tables (Clstr, Pool, Trans)

Write SAP BAPI Objects

Write SAP iDocs Support

Write BW DSO / aDSO (BAPI)

Write BW InfoObjects, Providers, etc. (ODP)

Supports Reverse ETL

RFC Server Required (BAPI)

Can be automated by API’s (App Automation)

WRITE TO SAP

Talend

Talend

Talend

Talend

Talend

Talend

Talend

Talend

Talend

Delivering SAP Data to Snowflake with Qlik

15

Scenario 2: An organization is looking to automate processes related to internal SAP functions in

S/4HANA. They would like to take the output from SAP, write to Snowflake, and then monitor for certain

changes. When detected, an automated response is sent via email to correct persons and triggers

other updates.

Figure 7 - SAP iDocs and Automation

The best way to address this scenario would be to use Talend Studio to read the iDocs via BAPI/RFC

connections from inside SAP and write that information into Snowflake, then monitor the output and use

Qlik Application Automation to act by sending an email that was triggered by the data.

Scenario 3: A large enterprise is looking to extract value from their SAP data in S/4HANA by moving it

to a more flexible and cost-effective platform like Snowflake. They also want to transform the data and

get it ready for users by shaping the data into analytics-ready content. The IT team would also like to

use SAP extractors as they are abandoning SAP BW but wish to keep their logic that has been built.

The organization, however, has a runtime license for SAP S/4HANA as an added complication.

Delivering SAP Data to Snowflake with Qlik

16

Figure 8 - SAP Modernization for Analytics

The best option in this scenario would be to use Qlik Cloud Data Integration. The data would be fully

lifted from the SAP system using SAP Extractors via ODP (which works with runtime SAP license) and

landed into Snowflake. Qlik will then manage the data lake, data warehouse, and data marts

automatically as the data is transformed into analytics-ready content. We will discuss an example of

this later in the SAP Accelerators section explaining how this can be implemented. Qlik Cloud Data

Integration can also manage the combination of delta (CDC) enabled content mixed with batch

(Trigger) content in the same project, which provides a single management of all data required for

analytics.

The combination of Qlik | Talend solutions can cover most, if not all, data scenarios related to SAP

content. However, there are some interesting EXTENDED use cases that are unique to the Qlik |

Talend portfolio, which we’ll explore below.

Make SAP Data More Reliable

One of the biggest problems with any ERP like SAP is data reliability! There are often layers of data

integrity issues, bad data, duplicates, and errors within areas like Customer Master, Material Master,

etc.

Talend Cloud Data Fabric (https://www.talend.com/products/data-fabric/) is a managed cloud

integration platform that makes it easy for developers and data constituents to collect, transform, and

clean data. Components include:

•

Talend Data Inventory, which provides automated tools for dataset documentation, quality

proofing, and promotion. It identifies data silos across data sources and targets to provide

visualization of reusable and shareable data assets. Talend Data Inventory uses Snowflake

Snowpark for creating the Talend Trust Score” (https://www.talend.com/products/trust-score/).

Delivering SAP Data to Snowflake with Qlik

17

•

Talend Data Preparation (TDP) allows customers to simplify and speed up the process of

preparing data for analysis and other tasks. TDP allows customers to create, update, remove,

and share datasets, then create preparations on top of the datasets that can be incorporated

into Talend Jobs with Talend Studio.

•

Talend Data Stewardship (TDS) allows customers to collaboratively curate, validate, and

resolve conflicts in data, as well as address potential data integrity issues.

With these capabilities you can fix, monitor, and govern SAP data directly sourced using one of the

above methods. Data can be fixed inline or after the data has been landed into Snowflake.

More details about how Data Fabric can benefit Snowflake customers can be found here:

https://www.talend.com/partners/snowflake/

Snowflake customers can test the Talend Trust Score directly on their Snowflake instance via the

Snowflake Partner Portal here: https://trial.snowflake.com/?owner=SPN-PID-149016

Delivering SAP Data to Snowflake with Qlik

18

Reverse ETL and Writeback

Qlik products are very effective at moving data from SAP into Snowflake, but what about the reverse?

Talend Studio allows for pushing/writing data back into SAP or executing internal SAP jobs via iDocs.

SAP Master Data (like Customer Master) could be cleansed using the Talend Data Preparation Engine

and then the cleansed data would be pushed back into SAP using Talend Studio.

SAP Writeback Task

Description

tSAPADSOOutput

Writes data to an active Advanced Data Store Object (ADSO)

tSAPBapi

Extracts data from or loads data to an SAP server using multiple input/output

parameters or the document type parameter.

tSAPCommit

Commits a global transaction in one go, using a unique connection instead

of doing that on every row or every batch — thus providing gain in

performance.

tSAPDataSourceOutput

Writes Data Source objects into an SAP BW Data Source system.

tSAPDSOOutput

Creates or updates DSO data in an SAP BW table.

tSAPHanaBulkExec

Improves performance while carrying out the Insert operations to an SAP

HANA database.

tSAPIDocOutput

Uploads IDoc data set in XML fomat to an SAP system.

tSAPInfoObjectOutput

Writes InfoObject data into an SAP BW system.

tSAPRollback

Cancels the transaction commit in the connected SAP.

tSAPHanaCommit

Commits in one go, using a unique connection, a global transaction instead

of doing that on every row or every batch — thus providing gain in

performance.

tSAPHanaOutput

Executes the action defined on the table and/or on the data contained in the

table, based on the flow incoming from the preceding component in the Job.

tSAPHanaRollback

Avoids to commit part of a transaction involuntarily.

tSAPHanaRow

Acts on the actual database structure or on the data (although without

handling data).

These capabilities make Qlik | Talend a unique offering in the SAP markets as we can push Snowflake

data back into SAP!

Delivering SAP Data to Snowflake with Qlik

19

Qlik SAP Solution Accelerators — Prebuilt SAP

Content for Snowflake

The Qlik SAP Solution Accelerators are quick-start templates designed against a STANDARD SAP

IDES install to demonstrate the art of the possible for extraction, transformation, and analytics using the

Qlik Cloud SaaS solutions which includes both Qlik Cloud Data Integration and Qlik Cloud Analytics.

Figure 9 – Qlik SAP Solution Accelerators for Snowflake

The SAP Accelerators take raw SAP data from ECC or HANA and turn it into analytics-ready data with

Qlik Cloud Analytics, using prebuilt dashboards.

The process extracts the data required for three initial SAP use cases:

•

Orders to Cash

•

Inventory Management

•

Financial Analytics

The extracted data is then transformed using the Qlik Cloud Data Integration engine into analytics-

ready data marts. The process also turns the raw SAP unique shorthand codes, which are based on

Delivering SAP Data to Snowflake with Qlik

20

German language and hard to read, into readable business terms and consolidates the dimensional

data into reusable and shared assets for the various SAP Fact data. That result is then cataloged and

fed into the Qlik SaaS Analytics layer for consumption by business users.

Full details and a Snowflake version of the Qlik SAP Solution Accelerators can be found here:

https://github.com/Qlik-PE/Qlik_SaaS_SAP_Accelerators

A recent video of Snowflake, SAP and the Qlik SAP Solution Accelerators can be found here:

https://www.youtube.com/watch?v=NYJp1VsWNFQ

Summary

Qlik | Talend offer robust capabilities for integrating SAP data with Snowflake computing environments,

enabling organizations to streamline data extraction, transformation, and visualization processes.

Qlik, historically known for its powerful data visualization and analytics platform, provides SAP users

with the ability to seamlessly connect to SAP systems and extract data for analysis. Through Qlik's

connectors and data modeling capabilities, organizations can efficiently transform SAP data into

meaningful insights within Snowflake. Qlik's unique associative analytics engine further allows users to

explore data relationships and uncover hidden patterns, facilitating informed decision-making.

Additionally, Qlik's Data Integration with Snowflake ensures that the data remains up-to-date and

accessible for real-time analytics — enhancing the overall data-driven decision-making process.

Talend, a leading data integration and transformation solution, simplifies the process of extracting,

transforming, and loading (ETL) SAP data into Snowflake. Talend's extensive library of connectors and

pre-built transformations streamlines the data integration process, ensuring that SAP data is efficiently

prepared and loaded into Snowflake's cloud data warehouse. Moreover, Talend offers data quality and

governance features to maintain data accuracy and compliance, which is vital for SAP data in

Snowflake.

Now combined the Qlik | Talend portfolio provides a comprehensive solution for SAP data integration

and analytics within the Snowflake computing environment, empowering organizations to unlock the full

potential of all their data for informed decision making and strategic insights.

Delivering SAP Data to Snowflake with Qlik

21

© 2023 QlikTech International AB. All rights reserved. All company and/or product names may be trade names, trademarks and/or registered trademarks of the respective owners with which

they are associated.

About Qlik

Qlik is the global leader in data integration, data quality, and analytics solutions. Its

comprehensive cloud platform unifies data across cloud and hybrid environments, automates

information pipelines and data-driven workflows, and augments insights with AI. Qlik enables

users to make data more available and actionable for better, faster business outcomes. With

more than 40,000 active customers in over 100 countries, Qlik is committed to providing

powerful data solutions to meet the evolving needs of organizations worldwide.

qlik.com