69

SHANE M. SHERLUND

Board of Governors of the

Federal Reserve System

PAUL WILLEN

Federal Reserve Bank of Boston

Making Sense of the Subprime Crisis

ABSTRACT Should market participants have anticipated the large increase

in home foreclosures in 2007 and 2008? Most of these foreclosures stemmed

from mortgage loans originated in 2005 and 2006, raising suspicions that

lenders originated many extremely risky loans during this period. We show

that although these loans did carry extra risk factors, particularly increased

leverage, reduced underwriting standards alone cannot explain the dramatic

rise in foreclosures. We also investigate whether market participants under-

estimated the likelihood of a fall in home prices or the sensitivity of fore-

closures to falling prices. We show that given available data, they should

have understood that a significant price drop would raise foreclosures sharply,

although loan-level (as opposed to ownership-level) models would have pre-

dicted a smaller rise than occurred. Analyst reports and other contemporary

discussions reveal that analysts generally understood that falling prices would

have disastrous consequences but assigned that outcome a low probability.

H

ad market participants anticipated the increase in defaults on sub-

prime mortgages originated in 2005 and 2006, the nature and extent

of the current financial market disruptions would be very different. Ex

ante, investors in subprime mortgage-backed securities (MBSs) would

have demanded higher returns and greater capital cushions. As a result,

borrowers would not have found credit as cheap or as easy to obtain as it

became during the subprime credit boom of those years. Rating agencies

would have reacted similarly, rating a much smaller fraction of each deal

investment grade. As a result, the subsequent increase in foreclosures

would have been significantly smaller, with fewer attendant disruptions

in the housing market, and investors would not have suffered such out-

sized, and unexpected, losses. To make sense of the subprime crisis, one

needs to understand why, when accepting significant exposure to the

KRISTOPHER GERARDI

Federal Reserve Bank of Atlanta

ANDREAS LEHNERT

Board of Governors of the

Federal Reserve System

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 69

creditworthiness of subprime borrowers, so many smart analysts, armed

with advanced degrees, data on the past performance of subprime borrow-

ers, and state-of-the-art modeling technology, did not anticipate that so

many of the loans they were buying, either directly or indirectly, would

go bad.

Our bottom line is that the problem largely had to do with expecta-

tions about home prices. Had investors known the future trajectory of

home prices, they would have predicted large increases in delinquency and

default and losses on subprime MBSs roughly consistent with what has

occurred. We show this by using two different methods to travel back to

2005, when the subprime market was still thriving, and look forward from

there. The first method is to forecast performance using only data available

in 2005, and the second is to look at what market participants wrote at the

time. The latter, “narrative” analysis provides strong evidence against

the claim that investors lost money because they purchased loans that,

because they were originated by others, could not be evaluated properly.

Our first order of business, however, is to address the more basic ques-

tion of whether the subprime mortgages that defaulted were themselves

unreasonable ex ante—an explanation commonly offered for the crisis.

We show that the problem loans, most of which were originated in 2005

and 2006, were not that different from loans made earlier, which had

performed well despite carrying a variety of serious risk factors. That

said, we document that loans in the 2005–06 cohort were riskier, and we

describe in detail the dimensions along which risk increased. In particu-

lar, we find that borrower leverage increased and, further, did so in a way

that was relatively opaque to investors. However, we also find that the

change in the mix of mortgages originated is too slight to explain the huge

increase in defaults. Put simply, the average default rate on loans origi-

nated in 2006 exceeds the default rate on the riskiest category of loans

originated in 2004.

We then turn to the role of the collapse in home price appreciation

(HPA) that started in the spring of 2006.

1

To have invested large sums in

subprime mortgages in 2005 and 2006, lenders must have expected either

that HPA would remain high (or at least not collapse) or that subprime

defaults would be insensitive to a big drop in HPA. More formally, letting

70 Brookings Papers on Economic Activity, Fall 2008

1. The relationship between foreclosures and HPA in the subprime crisis is well docu-

mented. See Gerardi, Shapiro, and Willen (2007), Mayer, Pence, and Sherlund (forthcom-

ing), Demyanyk and van Hemert (2007), Doms, Furlong, and Krainer (2007), and Danis and

Pennington-Cross (2005).

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 70

f represent foreclosures, p prices, and t time, we can decompose the growth

in foreclosures over time, df/dt, into a part corresponding to the sensitivity

of foreclosures to price changes and a part reflecting the change in prices

over time:

Our goal is to determine whether market participants underestimated

df/dp, the sensitivity of foreclosures to price changes, or whether dp/dt, the

trajectory of home prices, came out much worse than they expected.

Our first time-travel exercise, as mentioned, uses data that were avail-

able to investors ex ante on mortgage performance, to determine whether it

was possible at the time to estimate df/dp on subprime mortgages accurately.

Because severe home price declines are relatively rare and the subprime

market is relatively new, one plausible theory is that the data lacked

sufficient variation to allow df/dp to be estimated in scenarios in which dp/dt

is negative and large. We put ourselves in the place of analysts in 2005,

using data through 2004 to estimate the type of hazard models commonly

used in the industry to predict mortgage defaults. We use two datasets. The

first is a loan-level dataset from First American LoanPerfomance that is

used extensively in the industry to track the performance of mortgages

packaged in MBSs; it has sparse information on loans originated before

1999. The second is a dataset from the Warren Group, which has tracked

the fates of homebuyers in Massachusetts since the late 1980s. These data

are not loan-level but rather ownership-level data; that is, the unit of

observation is a homeowner’s tenure in a property, which may encompass

more than one mortgage loan. The Warren Group data were not (so far as

we can tell) widely used by the industry but were, at least in theory, avail-

able and, unlike the loan-level data, do contain information on the behav-

ior of homeowners in an environment of falling prices.

We find that it was possible, although not necessarily easy, to measure

df/dp with some degree of accuracy. Essentially, a researcher with perfect

foresight about the trajectory of prices from 2005 forward would have

forecast a large increase in foreclosures starting in 2007. Perhaps the

most interesting result is that despite the absence of negative HPA in

1998–2004, when almost all subprime loans were originated, we could still

determine, albeit not exactly, the likely behavior of subprime borrowers in

an environment of falling home prices. In effect, the out-of-sample (and

out-of-support) performance of default models was sufficiently good to

have predicted large losses in such an environment.

dd dd ddft fp pt=×.

GERARDI, LEHNERT, SHERLUND, and WILLEN 71

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 71

Although it was thus possible to estimate df/dp, we also find that the

relationship was less exact when using the data on loans rather than the

data on ownerships. A given borrower might refinance his or her original

loan several times before defaulting. Each of these successive loans except

the final one would have been seen by lenders as successful. An owner-

ship, in contrast, terminates only when the homeowner sells and moves, or

is foreclosed upon and evicted. Thus, although the same foreclosure would

appear as a default in both loan-level and ownership-level data, the inter-

mediate refinancings between purchase and foreclosure—the “happy

endings”—would not appear in an ownership-level database.

Our second time-travel exercise explores what analysts of the mort-

gage market said in 2004, 2005, and 2006 about the loans that eventually

got into trouble. Our conclusion is that investment analysts had a good

sense of df/dp and understood, with remarkable accuracy, how falling

dp/dt would affect the performance of subprime mortgages and the

securities backed by them. As an illustrative example, consider a 2005

analyst report published by a large investment bank:

2

analyzing a

representative deal composed of 2005 vintage loans, the report argued it

would face 17 percent cumulative losses in a “meltdown” scenario in

which house prices fell 5 percent over the life of the deal. That analysis

was prescient: the ABX index, a widely used price index of asset-backed

securities, currently implies that such a deal will actually face losses of

18.3 percent over its life. The problem was that the report assigned only

a 5 percent probability to the meltdown scenario, where home prices fell

5 percent, whereas it assigned probabilities of 15 percent and 50 percent to

scenarios in which home prices rose 11 percent and 5 percent, respectively,

over the life of the deal.

We argue that the fall in home prices outweighs other changes in

driving up foreclosures in the recent period. However, we do not take a

position on why prices rose so rapidly, why they fell so fast, or why they

peaked in mid-2006. Other researchers have examined whether factors

such as lending standards can affect home prices.

3

Broadly speaking, we

maintain the assumption that although, in the aggregate, lending standards

may indeed have affected home price dynamics (we are agnostic on this

72 Brookings Papers on Economic Activity, Fall 2008

2. This is the bank designated Bank B in our discussion of analyst reports below, in a

report dated August 15, 2005.

3. Examples include Pavlov and Wachter (2006), Coleman, LaCour-Little, and Vandell

(2008), Wheaton and Lee (2008), Wheaton and Nechayev (2008), and Sanders and others

(2008).

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 72

point), no individual market participant felt that his or her actions could

affect prices. Nor do we analyze whether housing was overvalued in 2005

and 2006, such that a fall in prices was to some extent predictable. There

was a lively debate during that period, with some arguing that housing was

reasonably valued and others that it was overvalued.

4

Our results suggest that some borrowers were more sensitive to a single

macro risk factor, namely, home prices. This comports well with the find-

ings of David Musto and Nicholas Souleles, who argue that average

default rates are only half the story: correlations across borrowers, perhaps

driven by macroeconomic forces, are also an important factor in valuing

portfolios of consumer loans.

5

In this paper we focus almost exclusively on subprime mortgages.

However, many of the same arguments might also apply to prime mort-

gages. Deborah Lucas and Robert McDonald compute the price volatil-

ity of the assets underlying securities issued by the housing-related

government-sponsored enterprises (GSEs).

6

Concentrating mainly on

prime and near-prime mortgages and using information on the firms’

leverage and their stock prices, these authors find that risk was quite high

(and, as a result, that the value of the implicit government guarantee on

GSE debt was quite high).

Many have argued that a major driver of the subprime crisis was the

increased use of securitization.

7

In this view, the “originate to distribute”

business model of many mortgage finance companies separated the under-

writer making the credit extension decision from exposure to the ultimate

credit quality of the borrower, and thus created an incentive to maximize

lending volume without concern for default rates. At the same time, infor-

mation asymmetries, unfamiliarity with the market, or other factors pre-

vented investors, who were accepting the credit risk, from putting in place

effective controls on these incentives. Although this argument is intu-

itively persuasive, our results are not consistent with such an explanation.

One of our key findings is that most of the uncertainty about losses

stemmed from uncertainty about the future direction of home prices, not

from uncertainty about the quality of the underwriting. All that said, our

GERARDI, LEHNERT, SHERLUND, and WILLEN 73

4. Among the first group were Himmelberg, Mayer, and Sinai (2005) and McCarthy and

Peach (2004); the pessimists included Gallin (2006, 2008) and Davis, Lehnert, and Martin

(2008).

5. Musto and Souleles (2006).

6. Lucas and McDonald (2006).

7. See, for example, Keys and others (2008) and Calomiris (2008).

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 73

models do not perfectly predict the defaults that occurred, and they often

underestimate the number of defaults. One possible explanation is that

there was an unobservable deterioration of underwriting standards in 2005

and 2006.

8

But another is that our model of the highly nonlinear relation-

ship between prices and foreclosures is wanting. No existing research has

successfully distinguished between these two explanations.

The endogeneity of prices does present a problem for our estimation.

One common theory is that foreclosures drive price declines by increasing

the supply of homes for sale, in effect introducing a new term into the

decomposition of df/dt, namely, dp/df. However, our estimation techniques

are to a large extent robust to this issue. As discussed by Gerardi, Adam

Shapiro, and Willen,

9

most of the variation in the key explanatory vari-

able, homeowner’s equity, is within-town (or, more precisely, within-

metropolitan-statistical-area), within-quarter variation and thus could not

be driven by differences in foreclosures over time or across towns. In fact,

as we will show, one can estimate the effect of home prices on foreclosures

even in periods when there were very few foreclosures, and in periods in

which foreclosed properties sold quickly.

No discussion of the subprime crisis is complete without mention of the

interest rate resets built into many subprime mortgages, which virtually

guaranteed large increases in monthly payments. Many commentators

have attributed the crisis to the payment shock associated with the first

reset of subprime 2/28 adjustable-rate mortgages (these are 30-year ARMs

with 2-year teaser rates). However, the evidence from loan-level data

shows that resets cannot account for a significant portion of the increase in

foreclosures. Christopher Mayer, Karen Pence, and Sherlund, as well as

Christopher Foote and coauthors, show that the overwhelming majority of

defaults on subprime ARMs occur long before the first reset.

10

In effect,

many lenders would have been lucky had borrowers waited until the first

reset to default.

The rest of the paper is organized as follows. We begin in the next sec-

tion by documenting changes in underwriting standards on mortgages. The

following section explores what researchers could have learned with the

data they had in 2005. In the penultimate section we review contemporary

analyst reports. The final section presents some conclusions.

74 Brookings Papers on Economic Activity, Fall 2008

8. This explanation is favored by Demyanyk and van Hemert (2007).

9. Gerardi, Shapiro, and Willen (2007).

10. Mayer, Pence, and Sherlund (forthcoming); Foote and others (2008a).

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 74

Underwriting Standards in the Subprime Market

We begin with a brief background on subprime mortgages, including a

discussion of the competing definitions of “subprime.” We then discuss

changes in the apparent credit risk of subprime mortgages originated from

1999 to 2007, and we link those changes to the actual performance of those

loans. We argue that the increased number of subprime loans that were

originated with high loan-to-value (LTV) ratios was the most important

observable risk factor that increased over the period. Further, we argue

that the increases in leverage were to some extent masked from investors

in MBSs. Loans originated with less than complete documentation of

income or assets, and particularly loans originated with both high lever-

age and incomplete documentation, exhibited sharper subsequent rises in

default rates than other loans. A more formal decomposition exercise,

however, confirms that the rise in defaults can only partly be explained by

observed changes in underwriting standards.

Some Background on Subprime Mortgages

One of the first notable features encountered by researchers working on

subprime mortgages is the dense thicket of jargon surrounding the field,

particularly the multiple competing definitions of “subprime.” This ham-

pers attempts to estimate the importance of subprime lending. There are,

effectively, four useful ways to categorize a loan as subprime. First, mort-

gage servicers themselves recognize that certain borrowers require more

frequent contact in order to ensure timely payment, and they charge higher

fees to service these loans; thus, one definition of a subprime loan is one

that is classified as subprime by the servicer. Second, some lenders spe-

cialize in loans to financially troubled borrowers, and the Department of

Housing and Urban Development maintains a list of such lenders; loans

originated by these “HUD list” lenders are often taken as a proxy for sub-

prime loans. Third, “high-cost” loans are defined as loans that carry fees

and interest rates significantly above those charged to typical borrowers.

Fourth, a subprime loan is sometimes defined as any loan packaged into an

MBS that is marketed as containing subprime loans.

Table 1 reports two measures of the importance of subprime lending in

the United States. The first is the percent of loans in the Mortgage Bankers

Association (MBA) delinquency survey that are classified as “subprime.”

Because the MBA surveys mortgage servicers, this measure is based on

the first definition above. As the table shows, over the past few years,

subprime mortgages by this definition have accounted for about 12 to

GERARDI, LEHNERT, SHERLUND, and WILLEN 75

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 75

14 percent of outstanding mortgages. The second and third columns show

the percent of loans tracked by the Federal Financial Institutions Examina-

tion Council under the Home Mortgage Disclosure Act (HMDA) that are

classified as “high cost”—the third definition. In 2005 and 2006 roughly

25 percent of loan originations were subprime by this measure.

11

These two measures point to an important discrepancy between the

stock and the flow of subprime mortgages (source data and definitions also

account for some of the difference). Subprime mortgages were a growing

part of the mortgage market during this period, and therefore the flow of

new subprime mortgages will naturally exceed their presence in the stock

of outstanding mortgages. In addition, subprime mortgages, for a variety

of reasons, tend not to last as long as prime mortgages, and for this reason,

too, they form a larger fraction of the flow of new mortgages than of the

stock of outstanding mortgages. Furthermore, until the mid-2000s most

subprime mortgages were used to refinance an existing loan and, simulta-

neously, to increase the principal balance (thus allowing the homeowner to

borrow against accumulated equity), rather than to finance the purchase of

a home.

76 Brookings Papers on Economic Activity, Fall 2008

11. The high-cost measure was introduced in the HMDA data only in 2004; for opera-

tional and technical reasons, the reported share of high-cost loans in 2004 may be depressed

relative to later years.

Table 1. Subprime Share of the Mortgage Market, 2004–08

a

Percent

Subprime loans as a share of

Mortgage loans

New originations

c

Period outstanding

b

Home purchases Refinancings

2004 12.3 11.5 15.5

2005 13.4 24.6 25.7

2006 13.7 25.3 31.0

2007 12.7 14.0 21.7

2008Q2 12.2 n.a. n.a.

Sources: Mortgage Bankers Association; Avery, Canner, and Cook (2005); Avery, Brevoort, and

Canner (2006, 2007, 2008).

a. Only first liens are counted; shares are not weighted by loan value.

b. From MBA national delinquency surveys; data are as of the end of the period (end of fourth quarter

except for 2008).

c. Share of loans used for the indicated purpose that were classified as “high cost” (roughly speaking,

those carrying annual percentage rates at least 3 percentage points above the yield on the 30-year

Treasury bond).

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 76

In this section we will focus on changes in the kinds of loans made over

the period 1999–2007. We will use loan-level data on mortgages sold into

private-label MBSs marketed as subprime. These data (known as the

TrueStandings Securities ABS data) are provided by First American

LoanPerformance and were widely used in the financial services industry

before and during the subprime boom. We further limit the set of loans

analyzed to the three most popular products: those carrying fixed interest

rates to maturity and the so-called 2/28s and 3/27s. As alluded to above, a

2/28 is a 30-year mortgage in which the contract rate is fixed at an initial,

teaser rate for two years; after that it adjusts to the six-month LIBOR

(London interbank offer rate) plus a predetermined margin (often around 6

percentage points). A 3/27 is defined analogously. Together these three loan

categories account for more than 98 percent of loans in the original data.

In this section the outcome variable of interest is whether a mortgage

defaults within 12 months of its first payment due date. There are several

competing definitions of “default”; here we define a mortgage as having

defaulted by month 12 if, as of its 12th month of life, it had terminated fol-

lowing a foreclosure notice, or if the loan was listed as real estate owned

by the servicer (indicating a transfer of title from the borrower), or if the

loan was still active but foreclosure proceedings had been initiated, or if

payments on the loan were 90 or more days past due. Note that some of the

loans we count as defaults might subsequently have reverted to “current”

status, if the borrower made up missed payments. In effect, any borrower

who manages to make 10 of the first 12 mortgage payments, or who re-

finances or sells without a formal notice of default having been filed, is

assumed to have not defaulted.

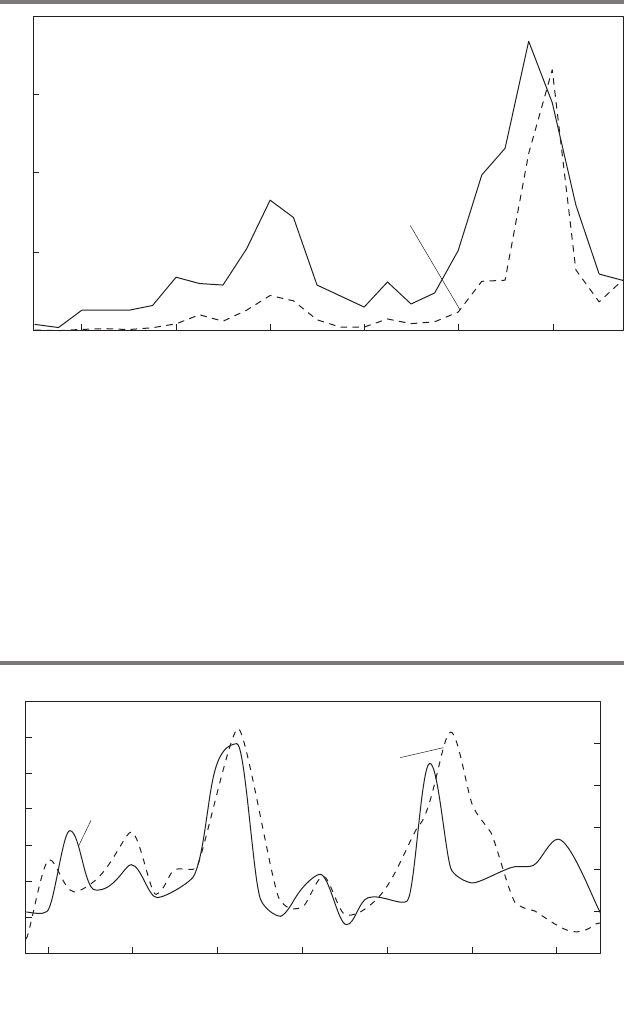

Figure 1 tracks the default rate in the ABS data under this definition

from 1999 through 2006. Conceptually, default rates differ from delin-

quency rates in that they track the fate of mortgages originated in a given

month by their 12th month of life; in effect, the default rate tracks the

proportion of mortgages originated at a given point that are “dead” by

month 12. Delinquency rates, by contrast, track the proportion of all active

mortgages that are “sick” at a given point in calendar time. Further,

because we close our dataset in December 2007, we can track the fate of

only those mortgages originated through December 2006. The continued

steep increase in mortgage distress is not reflected in these data, nor is the

fate of mortgages originated in 2007, although we do track the underwrit-

ing characteristics of these mortgages.

Note that this measure of default is designed to allow one to compare

the ex ante credit risk of various underwriting terms. It is of limited

GERARDI, LEHNERT, SHERLUND, and WILLEN 77

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 77

usefulness as a predictor of defaults, because it considers only what hap-

pens by the 12th month of a mortgage, and it does not consider changes

in the home prices, interest rates, or the overall economic environment

faced by households. Further, this measure does not consider the changing

incentives to refinance. The competing-risks duration models we estimate

in a later section are, for these reasons, far better suited to determining the

credit and prepayment outlook for a group of mortgages.

Changes in Underwriting Standards

During the credit boom, lenders published daily “rate sheets” showing,

for various combinations of loan risk characteristics, the interest rates they

would charge to make such loans. A simple rate sheet, for example, might

be a matrix of credit scores and LTV ratios; borrowers with lower credit

scores or higher LTV ratios would be charged higher interest rates or be

required to pay larger fees up front. Loans for certain cells of the matrix

representing combinations of low credit scores and high LTV ratios might

not be available at all.

Unfortunately, we do not have access to information on changes in rate

sheets over time, but underwriting standards can change in ways that are

78 Brookings Papers on Economic Activity, Fall 2008

Sources: First American LoanPerformance; authors’ calculations.

a. Share of all subprime mortgages originated in the indicated month that default within 12 months of

origination.

5

10

20

Percent

2002 2006

Origination date

25

15

2000 2004

Figure 1. Twelve-Month Default Rate on Subprime Mortgages

a

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 78

observable in the ABS data. Of course, underwriting standards can also

change in ways observable to the loan originator but not reflected in the

ABS data, or in ways largely unobservable even by the loan originator (for

example, an increase in borrowers getting home equity lines of credit after

origination). In this section we consider the evidence that more loans with

ex ante observable risky characteristics were originated during the boom.

Throughout we use loans from the ABS database described earlier.

We consider trends over time in borrower credit scores, loan documen-

tation, leverage, and other factors associated with risk, such as the purpose

of the loan, non-owner-occupancy, and amortization schedules. We find

that from 1999 to 2007, borrower leverage, loans with incomplete docu-

mentation, loans used to purchase homes (as opposed to refinancing

an existing loan), and loans with nontraditional amortization schedules

all grew. Borrower credit scores increased, while loans to non-owner-

occupants remained essentially flat. Of these variables, the increase in bor-

rower leverage appears to have contributed the most to the increase in

defaults, and we find some evidence that leverage was, in the ABS data at

least, opaque.

CREDIT SCORES. Credit scores, which essentially summarize a bor-

rower’s history of missing debt payments, are the most obvious indicator

of prime or subprime status. The most commonly used scalar credit score

is the FICO score originally developed by Fair, Isaac & Co. It is the only

score contained in the ABS data, although subprime lenders often used

scores and other information from all three credit reporting bureaus.

Under widely accepted industry rules of thumb, borrowers with FICO

scores of 680 or above are not usually considered subprime without some

other accompanying risk factor, borrowers with credit scores between 620

and 680 may be considered subprime, and those with credit scores below

620 are rarely eligible for prime loans. Subprime pricing models typically

used more information than just a borrower’s credit score; they also con-

sidered the nature of the missed payment that led a borrower to have a low

credit score. For example, a pricing system might weight missed mortgage

payments more than missed credit card payments.

Figure 2 shows the proportions of newly originated subprime loans

falling into each of these three categories. The proportion of such loans

to borrowers with FICO scores of 680 and above grew over the sample

period, while loans to traditionally subprime borrowers (those with scores

below 620) accounted for a smaller share of originations.

LOAN DOCUMENTATION. Borrowers (or their mortgage brokers) submit

a file with each mortgage application documenting the borrower’s income,

GERARDI, LEHNERT, SHERLUND, and WILLEN 79

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 79

liquid assets, and other debts, and the value of the property being used as

collateral. Media attention has focused on the rise of so-called low-doc or

no-doc loans, for which documentation of income or assets was incom-

plete. (These include the infamous “stated-income” loans.) The top left

panel of figure 3 shows that the proportion of newly originated subprime

loans carrying less than full documentation rose from around 20 percent

in 1999 to a high of more than 35 percent by mid-2006. Thus, although

reduced-documentation lending was a part of subprime lending, it was by

no means the majority of the business, nor did it increase dramatically dur-

ing the credit boom.

As we discuss in greater detail below, until about 2004, subprime loans

were generally backed by substantial equity in the property. This was espe-

cially true for subprime loans with less than complete documentation.

Thus, in some sense the lender accepted less complete documentation in

exchange for a greater security interest in the underlying property.

LEVERAGE. The leverage of a property is, in principle, the total value of

all liens on the property divided by its value. This is often referred to as

the property’s combined loan-to-value, or CLTV, ratio. Both the numera-

tor and the denominator of the CLTV ratio will fluctuate over a borrower’s

tenure in the property: the borrower may amortize the original loan, refi-

80 Brookings Papers on Economic Activity, Fall 2008

Sources: First American LoanPerformance; authors’ calculations.

20

40

80

Percent

2000 2004

Origination date

60

2002 2006

FICO ≥ 680

620 ≤ FICO < 680

FICO < 620

Figure 2. Distribution of Subprime Mortgages by FICO Score at Origination

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 80

nance, or take on junior liens, and the potential sale price of the home will

change over time. However, the current values of all of these variables

ought to be known at the time of a loan’s origination. The lender under-

takes a title search to check for the presence of other liens and hires an

appraiser to confirm either the price paid (when the loan is used to pur-

chase a home) or the potential sale price of the property (when the loan is

used to refinance an existing loan).

In practice, high leverage during the boom was also accompanied by

additional complications and opacity. Rather than originate a single loan

for the desired amount, originators often preferred to originate two loans:

one for 80 percent of the property’s value, and the other for the remaining

desired loan balance. In the event of a default, the holder of the first lien

would be paid first from the sale proceeds, with the junior lien holder

GERARDI, LEHNERT, SHERLUND, and WILLEN 81

Sources: First American LoanPerformance; authors’ calculations.

a. CLTV ratio ≥ 90 percent or including a junior lien.

Percent

2004

Origination date

2002 2006

Low documentation

10

20

30

40

50

2000

Percent

2004

Origination date

2002 2006

Leverage

10

20

30

40

50

2000

Percent

2004

Origination date

2002 2006

Other risk factors

10

20

30

40

50

2000

Percent

2004

Origination date

2002 2006

Risk layering

10

20

30

40

50

2000

High CLTV ratio

a

With second lien

Nontraditional amortization

Non-owner-occupied

For home purchase

High CLTV ratio + low FICO score

High CLTV ratio + low

or no documentation

High CLTV ratio + home purchase

Figure 3. Shares of Subprime Mortgages with Various Risk Factors

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 81

getting the remaining proceeds, if any. Lenders may have split loans in this

way for the same reason that asset-backed securities are tranched into an

AAA-rated piece and a below-investment-grade piece. Some investors

might specialize in credit risk evaluation and hence prefer the riskier piece,

while others might prefer to forgo credit analysis and purchase the less

risky loan.

The reporting of these junior liens in the ABS data appears spotty. This

could be the case if, for example, the junior lien was originated by a differ-

ent lender than the first lien, because the first-lien lender might not prop-

erly report the second lien, and the second lien lender might not report the

loan at all. If the junior lien was an open-ended loan, such as a home equity

line of credit, it appears not to have been reported in the ABS data at all,

perhaps because the amount drawn was unknown at origination.

Further, there is no comprehensive national system for tracking liens on

any given property. Thus, homeowners could take out a second lien shortly

after purchasing or refinancing, raising their CLTV ratio. Although such

borrowing should not affect the original lender’s recovery, it does increase

the probability of a default and thus lowers the value of the original loan.

The top right panel of figure 3 shows the growth in the number of loans

originated with high CLTV ratios (defined as those with CLTV ratios of

90 percent or more or including a junior lien); the panel also shows the

proportion of loans originated for which a junior lien was recorded.

12

Both

measures of leverage rose sharply over the past decade. High-CLTV-ratio

lending accounted for roughly 10 percent of originations in 2000, rising to

over 50 percent by 2006. The incidence of junior liens also rose.

The presence of a junior lien has a powerful effect on the CLTV ratio of

the first lien. As table 2 shows, loans without a second lien reported an

average CLTV ratio of 79.9 percent, whereas those with a second lien

reported an average CLTV ratio of 98.8 percent. Moreover, loans with

reported CLTV ratios of 90 percent or above were much likelier to have

associated junior liens, suggesting that lenders were leery of originating

single mortgages with LTV ratios greater than 90 percent. We will discuss

later the evidence that there was even more leverage than reported in the

ABS data.

OTHER RISK FACTORS. A variety of other loan and borrower character-

istics could have contributed to increased risk. The bottom left panel of

82 Brookings Papers on Economic Activity, Fall 2008

12. The figures shown here and elsewhere are based on first liens only; where there is an

associated junior lien, that information is used in computing the CLTV ratio and for other

purposes, but the junior loan itself is not counted.

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 82

figure 3 shows the proportions of subprime loans originated with a nontra-

ditional amortization schedule, to non-owner-occupiers, and to borrowers

who used the loan to purchase a property (as opposed to refinancing an

existing loan).

A standard or “traditional” U.S. mortgage self-amortizes; that is, a

portion of each month’s payment is used to reduce the principal. As the

bottom left panel of figure 3 shows, nontraditional amortization schedules

became increasingly popular among subprime loans. These were mainly

loans that did not require sufficient principal payments (at least in the early

years of the loan) to amortize the loan completely over its 30-year term.

Thus, some loans had interest-only periods, and others were amortized

over 40 years, with a balloon payment due at the end of the 30-year term.

The effect of these terms was to slightly lower the monthly payment, espe-

cially in the early years of the loan.

Subprime loans had traditionally been used to refinance an existing

loan. As the bottom left panel of figure 3 also shows, subprime loans used

to purchase homes also increased over the period, although not dramati-

cally. Loans to non-owner-occupiers, which include loans backed by a

property held for investment purposes, are, all else equal, riskier than loans

to owner-occupiers because the borrower can default without facing evic-

tion from his or her primary residence. As the figure shows, such loans

never accounted for a large fraction of subprime originations, nor did they

grow over the period.

RISK LAYERING. As we discuss below, leverage is a key risk factor for

subprime mortgages. An interesting question is the extent to which high

leverage was combined with other risk factors in a single loan; this prac-

tice was sometimes known as “risk layering.” As the bottom right panel of

figure 3 shows, risk layering grew over the sample period. Loans with

GERARDI, LEHNERT, SHERLUND, and WILLEN 83

Table 2. Distribution of New Originations by Combined Loan-to-Value Ratio, 2004–08

Percent

CLTV ratio Without second lien With second lien

Less than 80 percent 35 1

Exactly 80 percent 18 0

Between 80 and 90 percent 18 1

Exactly 90 percent 15 1

Between 90 and 100 percent 8 16

100 percent or greater 5 80

Memorandum: average CLTV ratio 79.92 98.84

Sources: First American LoanPeformance; authors’ calculations.

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 83

incomplete documentation and high leverage had an especially notable

rise, from essentially zero in 2001 to almost 20 percent of subprime origi-

nations by the end of 2006. Highly leveraged loans to borrowers purchas-

ing homes also increased over the period.

Effect on Default Rates

We now consider the performance of loans with the various risk factors

just outlined. We start with simple univariate descriptions before turning

to a more formal decomposition exercise. We continue here to focus on

12-month default rates as the outcome of interest. In the next section we

present results from dynamic models that consider the ability of borrowers

to refinance as well as default.

DOCUMENTATION LEVEL. The top left panel of figure 4 shows default

rates over time for loans with complete and those with incomplete docu-

mentation. The two loan types performed roughly in line with one another

until the current cycle, when default rates on loans with incomplete docu-

mentation rose far more rapidly than default rates on loans with complete

documentation.

LEVERAGE. The top right panel of figure 4 shows default rates on loans

with and without high CLTV ratios (defined, again, as those with a CLTV

ratio of at least 90 percent or with a junior lien present at origination).

Again, loans with high leverage performed approximately in line with

other loans until the most recent episode.

As we highlighted above, leverage is often opaque. To dig deeper into

the correlation between leverage at origination and subsequent perfor-

mance, we estimated a pair of simple regressions relating the CLTV ratio

at origination to the subsequent probability of default and to the initial con-

tract interest rate charged to the borrower. For all loans in the sample, we

estimated a probit model of default and an ordinary least squares (OLS)

model of the initial contract rate. Explanatory variables were various mea-

sures of leverage, including indicator (dummy) variables for various

ranges of the reported CLTV ratio (one of which is for a CLTV ratio of

exactly 80 percent) as well as for the presence of a second lien. We esti-

mated two versions of each model: version 1 contains only the CLTV ratio

measures, the second-lien indicator, and (in the default regressions) the

initial contract rate; version 2 adds state and origination date fixed effects.

These regressions are designed purely to highlight the correlation among

variables of interest and not as fully fledged risk models. Version 1 can

be thought of as the simple multivariate correlation across the entire sam-

ple, whereas version 2 compares loans originated in the same state at the

84 Brookings Papers on Economic Activity, Fall 2008

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 84

GERARDI, LEHNERT, SHERLUND, and WILLEN 85

Sources: First American LoanPerformance; authors’ calculations.

Percent

2004

Origination date

2002 2006

Documentation status

5

10

15

20

25

2000

Percent

2004

Origination date

2002 2006

Leverage

5

10

15

20

25

2000

Percent

2004

Origination date

2002 2006

Owner-occupancy

5

10

15

20

25

2000

Percent

2004

Origination date

2002 2006

Purpose of loan

5

10

15

20

25

2000

CLTV ratio ≥ 90% or second lien

CLTV ratio < 90% and no second lien

Owner-occupied

Non-owner-occupied

Incomplete

Full

Percent

2004

Origination date

2002 2006

Amortization schedule

5

10

15

20

25

2000

Traditional

Nontraditional

Refinancing

All other

Figure 4. Twelve-Month Default Rates of Mortgages with Selected Characteristics

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 85

same time. The results are shown in table 3; using the results from ver-

sion 2, figure 5 plots the expected default probability against the CLTV

ratio for loans originated in California in June 2005.

As the figure shows, default probabilities generally increase with

leverage. Note, however, that loans with reported CLTV ratios of exactly

80 percent, which account for 15.7 percent of subprime loans, have a sub-

86 Brookings Papers on Economic Activity, Fall 2008

Table 3. Regressions Estimating the Effect of Leverage on Default Probability

and Mortgage Interest Rates

Marginal effect on

probability of default Marginal effect on

within 12 months of initial contract

origination

a

interest rate

b

Variable

Independent variable Version 1 Version 2 Version 1 Version 2 mean

c

Constant 7.9825 10.4713

CLTV ratio (percent) 0.00219 0.00223 0.0093 0.0083 82.6929

CLTV

2

/100 −0.00103 −0.00103 −0.0063 −0.0082 70.3912

Initial contract 0.01940 0.02355 8.2037

interest rate

(percent a year)

Indicator variables

CLTV ratio = 80 percent 0.00961 0.01036 −0.0127 −0.0817 15.72

CLTV ratio between 0.00014 −0.00302 0.0430 0.1106 15.56

80 and 90 percent

CLTV ratio = 90 percent 0.00724 −0.00041 0.1037 0.2266 12.86

CLTV ratio between 0.00368 −0.00734 0.0202 0.3258 9.68

90 and 100 percent

CLTV ratio 100 percent 0.00901 −0.00740 0.0158 0.3777 16.20

or greater

Second lien recorded 0.05262 0.04500 −0.8522 −0.6491 14.52

Regression includes No Yes No Yes

origination date

effects

Regression includes No Yes No Yes

state effects

No. of observations

d

679,518 679,518 707,823 707,823

Memorandum: mean 6.55

default rate (percent)

Source: Authors’ regressions.

a. Results are from a probit regression in which the dependent variable is an indicator equal to 1 when

the mortgage has defaulted by its 12th month.

b. Results are from an ordinary least squares regression in which the dependent variable is the original

contract interest rate on the mortgage.

c. Values for indicator variables are percent of the total sample for which the variable equals 1.

d. Sample is a 10 percent random sample of the ABS data.

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 86

stantially higher default probability than loans with slightly higher or

lower CLTV ratios. Indeed, under version 2 such loans are among the

riskiest originated. As the bottom panel of figure 5 shows, however, there

is no compensating increase in the initial contract rate charged to the bor-

rower, although the lender may have charged points and fees up front (not

measured in this dataset) to compensate for the increased risk. This evi-

dence suggests that borrowers with apparently reasonable CLTV ratios

were in fact using junior liens to increase their leverage in a way that was

neither easily visible to investors nor, apparently, compensated by higher

mortgage interest rates.

GERARDI, LEHNERT, SHERLUND, and WILLEN 87

0.04

0.06

Probability

Default probability

80 100

CLTV ratio at origination

CLTV ratio at origination

0.07

0.05

75 85 90 95 105

7.0

8.0

Percent a year

Initial contract interest rate

80 100

7.5

75 85 90 95 105

Version 1

Version 2

Version 1

Version 2

Sources: First American LoanPerformance; authors’ calculations.

a. Estimation results of model versions 1 and 2 are reported in table 3.

Figure 5. Effect of CLTV Ratio on Default Probability and Initial Interest Rate

a

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 87

OTHER RISK FACTORS. The bottom three panels of figure 4 show the default

rates associated with the three other risk factors described earlier: non-

owner-occupancy, loan purpose, and nontraditional amortization sched-

ules. Loans to non-owner-occupiers were not (in this sample) markedly

riskier than loans to owner-occupiers. The 12-month default rates on loans

originated from 1999 to 2004 varied little between those originated for

home purchase and those originated for refinancing, and between those

carrying traditional and nontraditional amortization schedules. However,

among loans originated in 2005 and 2006, purchase loans and loans with

nontraditional amortization schedules defaulted at much higher rates than

did refinancings and traditionally amortizing loans, respectively.

RISK LAYERING. Figure 6 shows the default rates on loans carrying the

multiple risk factors discussed earlier. As the top panel shows, loans with

high CLTV ratios and low FICO scores have nearly always defaulted at

higher rates than other loans. High-CLTV-ratio loans that were used to

purchase homes also had a worse track record (middle panel). In both

cases, default rates for high-CLTV-ratio loans climbed sharply over the

last two years of the sample. Loans with high CLTV ratios and incomplete

documentation (bottom panel), however, showed the sharpest increase in

defaults relative to other loans. This suggests that within the group of high-

leverage loans, those with incomplete documentation were particularly

prone to default.

Decomposing the Increase in Defaults

As figure 1 showed, subprime loans originated in 2005 and 2006

defaulted at a much higher rate than those originated earlier in the sample.

The previous discussion suggests that this increase is not related to observ-

able underwriting factors. For example, high-CLTV-ratio loans originated

in 2002 defaulted at about the same rate as other loans originated that same

year. However, high-CLTV-ratio loans originated in 2006 defaulted at

much higher rates than other loans.

Decomposing the increase in defaults into a piece due to the mix of

types of loans originated and a piece due to changes in home prices

requires data on how all loan types behave under a wide range of price sce-

narios. If the loans originated in 2006 were truly novel, there would be no

unique decomposition between home prices and underwriting standards.

We showed that at least some of the riskiest loan types were being origi-

nated (albeit in low numbers) by 2004.

To test this idea more formally, we divide the sample into two groups:

an “early” group of loans originated in 1999–2004, and a “late” group

88 Brookings Papers on Economic Activity, Fall 2008

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 88

GERARDI, LEHNERT, SHERLUND, and WILLEN 89

5

20

Percent defaulting

FICO scores

2006

Origination date

25

15

10

5

20

25

15

10

5

20

25

15

10

2000 2002 2004

20062000 2002 2004

2000 2002 2004 2006

Percent defaulting

Loan purpose

Origination date

Percent defaulting

Documentation status

Origination date

Sources: First American LoanPerformance; authors’ calculations.

High CLTV ratio and low FICO score

All other mortgages

High CLTV ratio and for purchase

High CLTV ratio and low or no documentation

All other mortgages

All other mortgages

Figure 6. Twelve-Month Default Rates on Mortgages with Risk Layering

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 89

originated in 2005 and 2006. We estimate default models separately on

each group, and we track changes in risk factors over the entire period. We

then measure the changes in risk factors between the two groups and the

changes in the coefficients of the risk model. We find that increases in

high-leverage lending and risk layering can account for some, but by no

means all, of the increase in defaults.

Table 4 reports the means of the relevant variables for the two groups

and for the entire sample. The table shows that a much larger fraction of

loans originated in the late group defaulted: 9.28 percent as opposed to

4.60 percent in the early group. The differences between the two groups on

other risk factors are in line with the earlier discussion: FICO scores,

CLTV ratios, the incidence of 2/28s, low-documentation loans, and loans

with nontraditional amortization all rose from the early group to the late

group, while the share of loans for refinancing fell (implying that the share

for home purchase rose).

Table 5 reports the results of a loan-level probit model of the probabil-

ity of default, estimated using data from the early group and the late group.

The table shows marginal effects and standard errors for a number of loan

and borrower characteristics; the model also includes a set of state fixed

effects (results not reported). The differences in estimated marginal effects

between the early and the late group are striking. Defaults are more sensi-

tive in the late group to a variety of risk factors, such as leverage, credit

score, loan purpose, and type of amortization schedule. The slopes in

table 5 correspond roughly to the returns in a Blinder-Oaxaca decompo-

sition, whereas the sample means in table 4 correspond to the differences

in endowments between the two groups. However, because the underlying

model is nonlinear, we cannot perform the familiar Blinder-Oaxaca

decomposition.

As a first step toward our decomposition, table 6 reports the predicted

default rate in the late group using the model estimated on data from the

early group, as well as other combinations. Using early-group coefficients

on the early group of loans, the model predicts a 4.60 percent default rate.

Using the same coefficients on the late-group data, the model predicts a

4.55 percent default rate. Thus, the early-group model does not predict a

significant rise in defaults based on the observable characteristics for the

late group. These results are consistent with the view that a factor other

than underwriting changes was primarily responsible for the increase in

mortgage defaults. However, because these results mix changes in the dis-

tribution of risk factors between the two groups as well as changes in the

riskiness of certain characteristics, it will be useful to consider the increase

90 Brookings Papers on Economic Activity, Fall 2008

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 90

(continued)

Table 4. Summary Statistics for Variables from the ABS Data

Percent of total except where stated otherwise

All mortgages Early group

a

Late group

b

Variable Mean Standard deviation Mean Standard deviation Mean Standard deviation

Outcome 12 months after origination

Defaulted 6.57 24.78 4.60 20.95 9.28 29.01

Refinanced 16.22 36.86 15.96 36.63 16.57 37.18

Mortgage characteristics

Contract interest rate (percent a year) 8.21 1.59 8.38 1.76 7.97 1.27

Margin over LIBOR (percentage points) 4.45 2.94 4.28 3.11 4.69 2.67

FICO score 610 60 607 61 615 58

CLTV ratio (percent) 83 14 81 14 85 15

Mortgage type

Fixed rate 28.14 44.97 32.30 46.76 22.43 41.71

2/28

c

58.54 49.27 53.40 49.88 65.58 47.51

3/27 13.33 33.99 14.30 35.01 11.99 32.48

Documentation status

Complete 68.28 46.54 70.62 45.55 65.07 47.68

No documentation 0.31 5.58 0.38 6.12 0.23 4.75

Low documentation 30.71 46.13 27.82 44.81 34.68 47.60

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 91

Table 4. Summary Statistics for Variables from the ABS Data (Continued)

Percent of total except where stated otherwise

All mortgages Early group

a

Late group

b

Variable Mean Standard deviation Mean Standard deviation Mean Standard deviation

Other

Nontraditional amortization

d

16.04 36.69 6.93 25.40 28.53 45.15

Non-owner-occupied 6.57 24.78 6.51 24.68 6.66 24.93

Refinancing 67.00 47.02 70.95 45.40 61.58 48.64

Second lien present 14.59 35.30 7.50 26.34 24.32 42.90

Prepayment penalty 73.55 44.11 74.00 43.87 72.93 44.43

No. of observations 3,532,525 2,043,354 1,489,171

Sources: First American LoanPeformance; authors’ calculations.

a. Mortgages originated from 1999 to 2004.

b. Mortgages originated in 2005 and 2006.

c. A 30-year mortgage with a low initial (“teaser”) rate in the first two years; a 3/27 is defined analogously.

d. Any mortgage that does not completely amortize or that does not amortize at a constant rate.

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 92

GERARDI, LEHNERT, SHERLUND, and WILLEN 93

Table 5. Probit Regressions Estimating the Effect of Loan and Other Characteristics

on Default Probability

a

Early group Late group

(1999–2004 (2005–06

originations) originations)

Marginal Standard Marginal Standard

Variable effect error effect error

Contract interest rate 0.0097 0.0001 0.0328 0.0002

(percent a year)

Margin over LIBOR 0.0013 0.0001 0.0016 0.0003

(percentage points)

Loan is a 2/28 0.0036 0.0009 0.0158 0.0016

Loan is a 3/27 0.0030 0.0010 0.0105 0.0020

CLTV ratio 0.0007 0.0001 0.0037 0.0002

CLTV

2

/100 −0.0002 0.0001 −0.0018 0.0002

CLTV ratio = 80 percent 0.0035 0.0005 0.0225 0.0012

80 percent < CLTV −0.0017 0.0006 0.0119 0.0014

ratio < 90 percent

90 percent ≤ CLTV −0.0014 0.0008 0.0154 0.0022

ratio < 100 percent

CLTV ratio ≥ 100 percent −0.0000 0.0015 0.0229 0.0029

Second lien present 0.0165 0.0008 0.0391 0.0009

FICO score −0.0003 0.0000 −0.0003 0.0000

FICO < 620 −0.0015 0.0008 0.0202 0.0015

FICO = 620 −0.0012 0.0016 0.0194 0.0031

620 < FICO < 680 −0.0040 0.0006 0.0110 0.0010

High CLTV ratio and low FICO −0.0004 0.0006 0.0013 0.0010

High CLTV ratio and purchase 0.0053 0.0006 −0.0143 0.0010

High CLTV ratio and low 0.0059 0.0007 0.0129 0.0010

documentation

Loan is a refinancing −0.0064 0.0004 −0.0223 0.0009

Non-owner-occupied 0.0113 0.0006 0.0158 0.0010

Low documentation 0.0127 0.0004 0.0160 0.0007

No documentation 0.0107 0.0027 0.0293 0.0059

Prepayment penalty 0.0012 0.0003 0.0087 0.0006

Payment-to-income ratio 1

b

0.0003 0.0000 0.0008 0.0000

Payment-to-income ratio 2 0.0008 0.0008 0.0008 0.0001

Ratio 1 missing 0.0131 0.0007 0.0330 0.0014

Ratio 2 missing 0.0240 0.0006 0.0273 0.0017

Loan is from a retail lender 0.0036 0.0005 −0.0204 0.0012

Loan is from a wholesale lender 0.0050 0.0004 0.0044 0.0009

Loan is from a mortgage broker 0.0011 0.0011 −0.0055 0.0019

Nontraditional amortization 0.0043 0.0005 0.0218 0.0006

No. of observations 2,043,354 1,489,171

Pseudo-R

2

0.0929 0.0971

Source: Authors’ regressions.

a. The dependent variable is the probability of default after 12 months. All regressions include a

complete set of state fixed effects.

b. Ratios 1 and 2 are back- and front-end debt-to-income ratios, respectively.

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 93

in riskiness of a typical loan after varying a few characteristics in turn.

Again, because of the nonlinearity of the underlying model, we have to

consider just one set of observable characteristics at a time.

To this end, we consider a typical 2/28 loan originated in California

with observable characteristics set to their early-period sample means. We

change each risk characteristic in turn to its late-period sample mean or to

a value suggested by the experience in the late period. Table 7 shows that

even for loans with the worst combination of underwriting characteristics,

the predicted default rate is less than half the actual default rate experi-

enced by this group of loans. The greatest increases in default probability

are associated with higher-leverage scenarios. (Note that decreasing the

CLTV ratio to exactly 80 percent increases the default probability, for rea-

sons discussed earlier.)

What Can We Learn from the 2005 Data?

In this section we focus on whether market participants could reasonably

have estimated the sensitivity of foreclosures to home price decreases. We

estimate standard competing-risks duration models using data on the per-

formance of loans originated through the end of 2004—presumably the

information set available to lenders as they were making decisions about

loans originated in 2005 and 2006. We produce out-of-sample forecasts of

foreclosures assuming the home price outcomes that the economy actually

experienced. Later we address the question of what home price expecta-

94 Brookings Papers on Economic Activity, Fall 2008

Table 6. Predicted Default Rates

Percent

Default probability using model estimated on data from

Data used in estimation Early period (1999–2004) Late period (2005–06)

Early period 4.60 9.30

Late period 4.55 9.27

Origination year

1999 6.66 15.37

2000 8.67 20.00

2001 6.52 14.34

2002 4.83 9.86

2003 3.49 6.42

2004 3.44 6.05

2005 3.96 7.50

2006 5.31 11.55

Source: Authors’ calculations.

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 94

tions investors had, but here we assume that market participants had per-

fect foresight about future HPA.

In conducting our forecasts, we use two primary data sources. The first

is the ABS data discussed above. These data are national in scope and have

been widely used by mortgage analysts to model both prepayment and

default behavior in the subprime mortgage market, so it is not unreason-

able to use these data as an approximation of market participants’ informa-

tion set. The second source of data is publicly available, individual-level

data on both housing and mortgage transactions in the state of Massachu-

setts, from county-level registry of deeds offices. Although these data are

not national in scope and lack the level of detail on mortgage and borrower

characteristics that the ABS data have, their historical coverage is far supe-

rior. The deed registry data extend back to the early 1990s, a period in

which the Northeast experienced a significant housing downturn. In con-

trast, the ABS data have very sparse coverage before 2000, as the non-

agency, subprime MBS market did not become relevant until the turn of

the century. Hence, for the vast majority of the period covered by the ABS

data, the economy was in the midst of a significant housing boom. In the

GERARDI, LEHNERT, SHERLUND, and WILLEN 95

Table 7. Effects of Selected Mortgage Characteristics on Default Probability

for a Generic 2/28 Mortgage

Percent

Loan characteristics Estimated 12-month default probability

a

Base case

b

1.96

Base case except:

CLTV ratio = 80 percent 2.28

High CLTV ratio (= 99.23 percent, 3.76

with second lien)

Low FICO score (FICO = 573) 2.47

Low documentation 2.88

Nontraditional amortization 1.96

Home purchase 2.41

High CLTV ratio and low documentation 6.17

High CLTV ratio and low FICO score 3.76

High CLTV ratio and home purchase 5.22

Source: Authors’ calculations.

a. Calculated using the model estimated from early-period (1999–2004) data.

b. The base case is a 2/28 mortgage originated in California for the purpose of refinancing and carrying

an initial annual interest rate of 8.22 percent (and a margin over LIBOR of 6.22 percent), with a CLTV

ratio of 81.3, a FICO score of 600, complete documentation, no second lien, and traditional amortization.

Mortgages with these characteristics experienced an actual default probability of 11.36 percent. Each of

the remaining cases differs from the base case only with respect to the characteristic(s) indicated. Values

chosen for these characteristics are late-period (2005–06) sample means or otherwise suggested by the

experience in that period.

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 95

next section we discuss the potential implications of this data limitation for

predicting mortgage defaults and foreclosures.

The Relationship between Housing Equity and Foreclosure

For a homeowner with positive equity who needs to terminate his or her

mortgage, a strategy of either refinancing the mortgage or selling the home

dominates defaulting and allowing foreclosure to occur. However, for an

“underwater” homeowner (that is, one with negative equity, where the

mortgage balance exceeds the home’s market value), default and foreclo-

sure are sometimes the optimal economic decision.

13

Thus, the theoretical

relationship between equity and foreclosure is not linear. Rather, the sensi-

tivity of default to equity should be approximately zero for positive values

of equity, but negative for negative values. These observations imply that

the relationship between housing prices and foreclosure is highly sensitive

to the housing cycle. In a home price boom, even borrowers in extreme

financial distress have more appealing options than foreclosure, because

home price gains are expected to result in positive equity. However, when

home prices are falling, highly leveraged borrowers will often find them-

selves in a position of negative equity, which implies fewer options for

those experiencing financial distress.

As a result, estimating the empirical relationship between home prices

and foreclosures requires, in principle, data that span a home price bust as

well as a boom. In addition, analysts using loan-level data must account for

the fact that even as foreclosures rise in a home price bust, prepayments

will also fall.

Given that the ABS data do not contain a home price bust through the

end of 2004, and that, as loan-level data, they could not track the experi-

ence of an individual borrower across many loans, we expect (and find)

that models estimated using the ABS data through 2004 have a harder time

predicting foreclosures in 2007 and 2008.

Forecasts Using the ABS Data

As described earlier, the ABS data are loan-level data that track mort-

gages held in securitized pools marketed as either alt-A or subprime. We

restrict our attention to first-lien, 30-year subprime mortgages originated

from 2000 to 2007.

A key difference between the model we estimate in this section and the

decomposition exercise above is in the definitions of “default” and “pre-

96 Brookings Papers on Economic Activity, Fall 2008

13. See Foote and others (2008a) for a more detailed discussion.

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 96

payment.” The data track the performance of these mortgages over time.

Delinquency status (current, 30 days late, 60 days late, 90 days or more late,

or in foreclosure) is recorded monthly for active loans. The data also differ-

entiate between different types of mortgage termination: by foreclosure or

by prepayment without a notice of foreclosure. Here we define a default as

a mortgage that terminates after a notice of foreclosure has been served,

and a prepayment as a mortgage that terminates without such a notice (pre-

sumably through refinancing or sale of the home). Thus, loans can cycle

through various delinquency stages and can even have a notice of default

served, but whether they are classed as happy endings (prepayments) or

unhappy endings (defaults) will depend on their status at termination.

To model default and prepayment behavior, we augment the ABS data

with metropolitan-area-level home price data from S&P/Case-Shiller,

where available, and state-level house price data from the Office of Federal

Housing Enterprise Oversight (OFHEO) otherwise. These data are used

to construct mark-to-market CLTV ratios and measures of home price

volatility. Further, we augment the data with state-level unemployment

rates, monthly oil prices, and various interest rates to capture other pres-

sures on household balance sheets. Finally, we include zip code-level data

on average household income, share of minority households, share of

households with a high school education or less, and the child share of the

population, all from the Census Bureau.

EMPIRICAL MODEL. We now use the ABS data to estimate what an ana-

lyst with perfect foresight about home prices, interest rates, oil prices, and

other variables would have predicted for prepayment and foreclosures in

2005–07, given information on mortgage performance available at the end

of 2004. We estimate a competing-risks model over 2000–04 and simulate

mortgage defaults and prepayments over 2005–07. The baseline hazard

functions for prepayment and default are assumed to follow the Public

Securities Association (PSA) guidelines, which are fairly standard in the

mortgage industry.

14

Factors that can affect prepayment and default include mortgage and

borrower characteristics at loan origination, such as CLTV and payment-

to-income ratios, the contractual mortgage interest rate, the borrower’s

credit score, the completeness of loan documentation, and occupancy

status. We also include whether the loan has any prepayment penalties,

interest-only features, or piggybacking; whether it is a refinancing or a pur-

chase; and the type of property. Further, we include indicator variables to

GERARDI, LEHNERT, SHERLUND, and WILLEN 97

14. For the specific forms of the PSA guidelines, see Sherlund (2008).

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 97

identify loans with risk layering of high leverage and poor documenta-

tion, loans to borrowers with credit scores below 600, and an interaction

term between occupancy status and cumulative HPA over the life of the

mortgage.

Similarly, we include dynamically updated mortgage and borrower

characteristics that vary from month to month after loan origination. The

most important of these is an estimate of the mark-to-market CLTV ratio;

changes in home prices will primarily affect default and prepayment rates

through this variable. In addition, we include the current contract interest

rate, home price volatility, state-level unemployment rates, oil prices, and,

for ARMs, the fully indexed mortgage interest rate (six-month LIBOR

plus the loan margin).

Because of the focus on payment changes, we include three indicator

variables to capture the effects of interest rate resets. The first is set to unity

in the three months around (one month before, the month of, and the month

after) the first reset. The second captures whether the loan has passed its

first reset date. The third identifies changes in the monthly mortgage pay-

ment of more than 5 percent from the original monthly payment, to capture

any large payment shocks. Variable names and definitions for our models

using the ABS data are reported in table 8, and summary statistics in table 9.

ESTIMATION STRATEGY AND RESULTS. We estimate a competing-risks,

proportional hazard model for six subsamples of our data. First, the data

are broken down by subprime product type: hybrid 2/28s, hybrid 3/27s,

and fixed-rate mortgages. Second, for each product type, estimation is

carried out separately for purchase mortgages and refinancings.

Table 10 reports the estimation results for the default hazard functions.

15

These results are similar to those previously reported by Sherlund.

16

As one

would expect, home prices (acting through the mark-to-market CLTV ratio

term) are extremely important. In addition, non-owner-occupiers are, all

else equal, likelier to default. The payment shock and reset window vari-

ables have relatively small effects, possibly because so many subprime

borrowers defaulted in 2006 and 2007 ahead of their resets. Aggregate

variables such as oil prices and unemployment rates do push up defaults,

but by relatively small amounts, once we control for loan-level observables.

SIMULATION RESULTS. With the estimated parameters in hand, we turn to

the question of how well the model performs over the 2005–07 period.

98 Brookings Papers on Economic Activity, Fall 2008

15. For brevity we do not report the parameter estimates for the prepayment hazard

functions. They are available upon request from the authors.

16. Sherlund (2008).

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 98

GERARDI, LEHNERT, SHERLUND, and WILLEN 99

Table 8. Variable Names and Definitions in the ABS Data

Variable name Definition

cash Indicator variable = 1 when mortgage is a refinancing with cash-out

cltvnow Current mark-to-market CLTV ratio (percent)

cltvorig CLTV ratio at origination (percent)

doc Indicator variable = 1 when documentation is complete

educ Share of population in zip code with high school education or less

ficoorig FICO score at origination

frmnow Current market interest rate on 30-year fixed-rate mortgages

(percent a year)

frmorig Market interest rate on 30-year fixed-rate mortgages at origination

(percent a year)

hhincome Average household income in zip code (dollars)

hpvol Current home price volatility (2-year standard deviation of HPA,

in percent)

hpvorig Home price volatility at origination (2-year standard deviation of

HPA, in percent)

indnow Current fully indexed market interest rate on ARMs (6-month

LIBOR plus margin, percent a year)

indorig Fully indexed market interest rate on ARMs at origination

(percent a year)

invhpa Cumulative HPA if non-owner-occupied (percent)

kids Share of population in zip code who are children

lngwind Indicator variable = 1 when mortgage rate has previously reset

lofico Indicator variable = 1 when FICO < 600

loqual Indicator variable = 1 when CLTV ratio > 95 and no documentation

mratenow Current mortgage interest rate (percent a year)

mrateorig Contract interest rate at origination (percent a year)

nonowner Indicator variable = 1 when home is non-owner-occupied

oil Change in oil price since origination (percent)

origamt Loan amount at origination (dollars)

piggyback Indicator variable = 1 when a second lien is recorded at origination

pmi Indicator variable = 1 when there is private mortgage insurance

pmt Indicator variable = 1 when current monthly payment is more than

5 percent higher than original payment

ppnow Indicator variable = 1 when prepayment penalty is still in effect

pporig Indicator variable = 1 when prepayment penalty was in effect at

origination

proptype Indicator variable = 1 when the home is a single-family home

pti Payment-to-income ratio at origination (percent)

race Minority share of population in zip code

refi Indicator variable = 1 when the loan is a refinancing

(with or without cash-out)

rstwind Indicator variable = 1 when the mortgage is in the reset period

unempnow Change in state-level unemployment rate since origination

(percentage points)

unorig State-level unemployment rate at origination (percent)

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 99

Table 9. Sample Averages of Variables in the ABS Data

a

2000–04

2004 2005

Variable name At origination Active mortgages Mortgages in default Mortgages prepaid At origination At origination

cash 0.57 0.57 0.52 0.58 0.58 0.54

cltvnow 81.91 73.59 66.10 0.00 83.76 84.90

cltvorig 81.91 83.15 81.61 79.81 83.76 84.90

doc 0.70 0.69 0.74 0.70 0.66 0.64

educ 0.36 0.37 0.38 0.35 0.37 0.37

ficoorig 610 616 582 605 616 619

frmnow 6.28 5.75 5.75 5.75 5.88 5.85

frmorig 6.28 6.03 6.89 6.62 5.88 5.85

hhincome 43,110 42,421 39,116 44,945 43,007 42,379

hpvol 3.38 4.15 3.20 4.78 3.91 4.57

hpvorig 3.38 3.41 2.52 3.46 3.91 4.57

indnow 8.52 9.06 9.51 9.12 7.90 9.81

indorig 8.52 8.06 10.06 9.05 7.90 9.81

invhpa 1.63 1.14 2.31 2.38 0.55 0.16

kids 0.27 0.27 0.27 0.27 0.27 0.27

lngwind 0.00 0.09 0.20 0.11 0.00 0.00

loqual 0.05 0.07 0.03 0.03 0.09 0.12

mratenow 8.22 7.73 9.95 8.81 7.32 7.56

mrateorig 8.22 7.72 9.95 8.82 7.32 7.56

11472-02_Gerardi_rev3.qxd 3/6/09 12:24 PM Page 100

nonowner 0.08 0.09 0.10 0.07 0.09 0.08

oil 0.00 26.96 54.47 53.35 0.00 0.00

origamt 118,523 119,569 89,096 121,636 136,192 148,320

piggyback 0.08 0.11 0.05 0.04 0.14 0.23

pmi 0.27 0.24 0.35 0.31 0.19 0.23

pmt 0.00 0.04 0.03 0.00 0.00 0.00

ppnow 0.73 0.67 0.36 0.38 0.73 0.72

pporig 0.73 0.74 0.75 0.71 0.73 0.72