CONCEPTS OF

PROGRAMMING LANGUAGES

TENTH EDITION

This page intentionally left blank

CONCEPTS OF

PROGRAMMING LANGUAGES

TENTH EDITION

ROBERT W. SEBESTA

University of Colorado at Colorado Springs

Boston Columbus Indianapolis New York San Francisco Upper Saddle River

Amsterdam Cape Town Dubai London Madrid Milan Munich Paris Montreal Toronto

Delhi Mexico City Sao Paulo Sydney Hong Kong Seoul Singapore Taipei Tokyo

Vice President and Editorial Director, ECS:

Marcia Horton

Editor in Chief: Michael Hirsch

Executive Editor: Matt Goldstein

Editorial Assistant: Chelsea Kharakozova

Vice President Marketing: Patrice Jones

Marketing Manager: Yez Alayan

Marketing Coordinator: Kathryn Ferranti

Marketing Assistant: Emma Snider

Vice President and Director of Production:

Vince O’Brien

Managing Editor: Jeff Holcomb

Senior Production Project Manager: Marilyn Lloyd

Manufacturing Manager: Nick Sklitsis

Operations Specialist: Lisa McDowell

Cover Designer: Anthony Gemmellaro

Text Designer: Gillian Hall

Cover Image: Mountain near Pisac, Peru;

Photo by author

Media Editor: Dan Sandin

Full-Service Vendor: Laserwords

Project Management: Gillian Hall

Printer/Binder: Courier Westford

Cover Printer: Lehigh-Phoenix Color

This book was composed in InDesign. Basal font is Janson Text. Display font is ITC Franklin Gothic.

Copyright © 2012, 2010, 2008, 2006, 2004 by Pearson Education, Inc., publishing as Addison-Wesley.

All rights reserved. Manufactured in the United States of America. This publication is protected by Copy-

right, and permission should be obtained from the publisher prior to any prohibited reproduction, storage

in a retrieval system, or transmission in any form or by any means, electronic, mechanical, photocopying,

recording, or likewise. To obtain permission(s) to use material from this work, please submit a written

request to Pearson Education, Inc., Permissions Department, One Lake Street, Upper Saddle River, New

Jersey 07458, or you may fax your request to 201-236-3290.

Many of the designations by manufacturers and sellers to distinguish their products are claimed as trade-

marks. Where those designations appear in this book, and the publisher was aware of a trademark claim,

the designations have been printed in initial caps or all caps.

Library of Congress Cataloging-in-Publication Data

Sebesta, Robert W.

Concepts of programming languages / Robert W. Sebesta.—10th ed.

p. cm.

Includes bibliographical references and index.

ISBN 978-0-13-139531-2 (alk. paper)

1. Programming languages (Electronic computers) I. Title.

QA76.7.S43 2009

005.13—dc22 2008055702

10 9 8 7 6 5 4 3 2 1

ISBN 10: 0-13-139531-9

ISBN 13: 978-0-13-139531-2

New

to the

Tenth

Ed

iti

on

Chapter

5: a new

section

on the let

construct

in

functional pro-

gramming languages

was

added

Chapter

6: the

section

on

COBOL's record operations

was

removed;

new

sections

on

lists, tuples,

and

unions

in F#

were added

Chapter

8:

discussions

of

Fortran's

Do

statement

and

Ada's

case

statement were removed; descriptions

of the

control statements

in

functional

programming languages were moved

to

this chapter

from

Chapter

15

Chapter

9: a new

section

on

closures,

a new

section

on

calling sub-

programs indirectly,

and a new

section

on

generic

functions

in F#

were

added;

the

description

of

Ada's

generic subprograms

was

removed

Chapter

11:

a new

section

on

Objective-C

was

added,

the

chapter

was

substantially revised

Chapter

12: a new

section

on

Objective-C

was

added,

five new fig-

ures were added

Chapter

13: a

section

on

concurrency

in

functional programming

languages

was

added;

the

discussion

of

Ada's asynchronous message

passing

was

removed

Chapter

14: a

section

on C#

event handling

was

added

Chapter

15: a new

section

on F# and a new

section

on

support

for

functional

programming

in

primarily imperative languages were added;

discussions

of

several

different

constructs

in

functional programming

languages

were moved

from

Chapter

15

to

earlier chapters

vi

Preface

Changes for the Tenth Edition

T

he goals, overall structure, and approach of this tenth edition of Concepts

of Programming Languages remain the same as those of the nine ear-

lier editions. The principal goals are to introduce the main constructs

of contemporary programming languages and to provide the reader with the

tools necessary for the critical evaluation of existing and future programming

languages. A secondary goal is to prepare the reader for the study of com-

piler design, by providing an in-depth discussion of programming language

structures, presenting a formal method of describing syntax and introducing

approaches to lexical and syntatic analysis.

The tenth edition evolved from the ninth through several different kinds

of changes. To maintain the currency of the material, some of the discussion

of older programming languages has been removed. For example, the descrip-

tion of COBOL’s record operations was removed from Chapter 6 and that of

Fortran’s Do statement was removed from Chapter 8. Likewise, the description

of Ada’s generic subprograms was removed from Chapter 9 and the discussion

of Ada’s asynchronous message passing was removed from Chapter 13.

On the other hand, a section on closures, a section on calling subprograms

indirectly, and a section on generic functions in F# were added to Chapter 9;

sections on Objective-C were added to Chapters 11 and 12; a section on con-

currency in functional programming languages was added to Chapter 13; a

section on C# event handling was added to Chapter 14; a section on F# and

a section on support for functional programming in primarily imperative lan-

guages were added to Chapter 15.

In some cases, material has been moved. For example, several different

discussions of constructs in functional programming languages were moved

from Chapter 15 to earlier chapters. Among these were the descriptions of the

control statements in functional programming languages to Chapter 8 and the

lists and list operations of Scheme and ML to Chapter 6. These moves indicate

a significant shift in the philosophy of the book—in a sense, the mainstreaming

of some of the constructs of functional programming languages. In previous

editions, all discussions of functional programming language constructs were

segregated in Chapter 15.

Chapters 11, 12, and 15 were substantially revised, with five figures being

added to Chapter 12.

Finally, numerous minor changes were made to a large number of sections

of the book, primarily to improve clarity.

The Vision

This book describes the fundamental concepts of programming languages by

discussing the design issues of the various language constructs, examining the

design choices for these constructs in some of the most common languages,

and critically comparing design alternatives.

Any serious study of programming languages requires an examination of

some related topics, among which are formal methods of describing the syntax

and semantics of programming languages, which are covered in Chapter 3.

Also, implementation techniques for various language constructs must be con-

sidered: Lexical and syntax analysis are discussed in Chapter 4, and implemen-

tation of subprogram linkage is covered in Chapter 10. Implementation of

some other language constructs is discussed in various other parts of the book.

The following paragraphs outline the contents of the tenth edition.

Chapter Outlines

Chapter 1 begins with a rationale for studying programming languages. It then

discusses the criteria used for evaluating programming languages and language

constructs. The primary influences on language design, common design trade-

offs, and the basic approaches to implementation are also examined.

Chapter 2 outlines the evolution of most of the important languages dis-

cussed in this book. Although no language is described completely, the origins,

purposes, and contributions of each are discussed. This historical overview is

valuable, because it provides the background necessary to understanding the

practical and theoretical basis for contemporary language design. It also moti-

vates further study of language design and evaluation. In addition, because none

of the remainder of the book depends on Chapter 2, it can be read on its own,

independent of the other chapters.

Chapter 3 describes the primary formal method for describing the syntax

of programming language—BNF. This is followed by a description of attribute

grammars, which describe both the syntax and static semantics of languages.

The difficult task of semantic description is then explored, including brief

introductions to the three most common methods: operational, denotational,

and axiomatic semantics.

Chapter 4 introduces lexical and syntax analysis. This chapter is targeted to

those colleges that no longer require a compiler design course in their curricula.

Like Chapter 2, this chapter stands alone and can be read independently of the

rest of the book.

Chapters 5 through 14 describe in detail the design issues for the primary

constructs of programming languages. In each case, the design choices for several

example languages are presented and evaluated. Specifically, Chapter 5 covers

the many characteristics of variables, Chapter 6 covers data types, and Chapter 7

explains expressions and assignment statements. Chapter 8 describes control

Preface vii

viii Preface

statements, and Chapters 9 and 10 discuss subprograms and their implementa-

tion. Chapter 11 examines data abstraction facilities. Chapter 12 provides an in-

depth discussion of language features that support object-oriented programming

(inheritance and dynamic method binding), Chapter 13 discusses concurrent

program units, and Chapter 14 is about exception handling, along with a brief

discussion of event handling.

The last two chapters (15 and 16) describe two of the most important alterna-

tive programming paradigms: functional programming and logic programming.

However, some of the data structures and control constructs of functional pro-

gramming languages are discussed in Chapters 6 and 8. Chapter 15 presents an

introduction to Scheme, including descriptions of some of its primitive functions,

special forms, and functional forms, as well as some examples of simple func-

tions written in Scheme. Brief introductions to ML, Haskell, and F# are given

to illustrate some different directions in functional language design. Chapter 16

introduces logic programming and the logic programming language, Prolog.

To the Instructor

In the junior-level programming language course at the University of Colorado

at Colorado Springs, the book is used as follows: We typically cover Chapters 1

and 3 in detail, and though students find it interesting and beneficial reading,

Chapter 2 receives little lecture time due to its lack of hard technical content.

Because no material in subsequent chapters depends on Chapter 2, as noted

earlier, it can be skipped entirely, and because we require a course in compiler

design, Chapter 4 is not covered.

Chapters 5 through 9 should be relatively easy for students with extensive

programming experience in C++, Java, or C#. Chapters 10 through 14 are more

challenging and require more detailed lectures.

Chapters 15 and 16 are entirely new to most students at the junior level.

Ideally, language processors for Scheme and Prolog should be available for

students required to learn the material in these chapters. Sufficient material is

included to allow students to dabble with some simple programs.

Undergraduate courses will probably not be able to cover all of the mate-

rial in the last two chapters. Graduate courses, however, should be able to

completely discuss the material in those chapters by skipping over parts of the

early chapters on imperative languages.

Supplemental Materials

The following supplements are available to all readers of this book at www

.pearsonhighered.com/cssupport.

• A set of lecture note slides. PowerPoint slides are available for each chapter

in the book.

• PowerPoint slides containing all the figures in the book.

A companion Website to the book is available at www.pearsonhighered.com/sebe-

sta. This site contains mini-manuals (approximately 100-page tutorials) on a

handful of languages. These proceed on the assumption that the student knows

how to program in some other language, giving the student enough informa-

tion to complete the chapter materials in each language. Currently the site

includes manuals for C++, C, Java, and Smalltalk.

Solutions to many of the problem sets are available to qualified instruc-

tors in our Instructor Resource Center at www.pearsonhighered.com/irc.

Please contact your school’s Pearson Education representative or visit

www.pearsonhighered.com/irc to register.

Language Processor Availability

Processors for and information about some of the programming languages

discussed in this book can be found at the following Websites:

C, C++, Fortran, and Ada gcc.gnu.org

C# and F# microsoft.com

Java java.sun.com

Haskell haskell.org

Lua www.lua.org

Scheme www.plt-scheme.org/software/drscheme

Perl www.perl.com

Python www.python.org

Ruby www.ruby-lang.org

JavaScript is included in virtually all browsers; PHP is included in virtually all

Web servers.

All this information is also included on the companion Website.

Acknowledgments

The suggestions from outstanding reviewers contributed greatly to this

book’s present form. In alphabetical order, they are:

Matthew Michael Burke

I-ping Chu DePaul University

Teresa Cole Boise State University

Pamela Cutter Kalamazoo College

Amer Diwan University of Colorado

Stephen Edwards Virginia Tech

David E. Goldschmidt

Nigel Gwee Southern University–Baton Rouge

Preface ix

x Preface

Timothy Henry University of Rhode Island

Paul M. Jackowitz University of Scranton

Duane J. Jarc University of Maryland, University College

K. N. King Georgia State University

Donald Kraft Louisiana State University

Simon H. Lin California State University–Northridge

Mark Llewellyn University of Central Florida

Bruce R. Maxim University of Michigan–Dearborn

Robert McCloskey University of Scranton

Curtis Meadow University of Maine

Gloria Melara California State University–Northridge

Frank J. Mitropoulos Nova Southeastern University

Euripides Montagne University of Central Florida

Serita Nelesen Calvin College

Bob Neufeld Wichita State University

Charles Nicholas University of Maryland-Baltimore County

Tim R. Norton University of Colorado-Colorado Springs

Richard M. Osborne University of Colorado-Denver

Saverio Perugini University of Dayton

Walter Pharr College of Charleston

Michael Prentice SUNY Buffalo

Amar Raheja California State Polytechnic University–Pomona

Hossein Saiedian University of Kansas

Stuart C. Shapiro SUNY Buffalo

Neelam Soundarajan Ohio State University

Ryan Stansifer Florida Institute of Technology

Nancy Tinkham Rowan University

Paul Tymann Rochester Institute of Technology

Cristian Videira Lopes University of California–Irvine

Sumanth Yenduri University of Southern Mississippi

Salih Yurttas Texas A&M University

Numerous other people provided input for the previous editions of

Concepts of Programming Languages at various stages of its development. All

of their comments were useful and greatly appreciated. In alphabetical order,

they are: Vicki Allan, Henry Bauer, Carter Bays, Manuel E. Bermudez, Peter

Brouwer, Margaret Burnett, Paosheng Chang, Liang Cheng, John Crenshaw,

Charles Dana, Barbara Ann Griem, Mary Lou Haag, John V. Harrison, Eileen

Head, Ralph C. Hilzer, Eric Joanis, Leon Jololian, Hikyoo Koh, Jiang B. Liu,

Meiliu Lu, Jon Mauney, Robert McCoard, Dennis L. Mumaugh, Michael G.

Murphy, Andrew Oldroyd, Young Park, Rebecca Parsons, Steve J. Phelps,

Jeffery Popyack, Raghvinder Sangwan, Steven Rapkin, Hamilton Richard,

Tom Sager, Joseph Schell, Sibylle Schupp, Mary Louise Soffa, Neelam

Soundarajan, Ryan Stansifer, Steve Stevenson, Virginia Teller, Yang Wang,

John M. Weiss, Franck Xia, and Salih Yurnas.

Matt Goldstein, editor; Chelsea Kharakozova, editorial assistant; and,

Marilyn Lloyd, senior production manager of Addison-Wesley, and Gillian

Hall of The Aardvark Group Publishing Services, all deserve my gratitude for

their efforts to produce the tenth edition both quickly and carefully.

About the Author

Robert Sebesta is an Associate Professor Emeritus in the Computer Science

Department at the University of Colorado–Colorado Springs. Professor Sebesta

received a BS in applied mathematics from the University of Colorado in Boulder

and MS and PhD degrees in computer science from Pennsylvania State University.

He has taught computer science for more than 38 years. His professional interests

are the design and evaluation of programming languages.

Preface xi

xii

Contents

Chapter 1 Preliminaries 1

1.1

Reasons for Studying Concepts of Programming Languages ............... 2

1.2 Programming Domains ..................................................................... 5

1.3 Language Evaluation Criteria ........................................................... 7

1.4 Influences on Language Design ....................................................... 18

1.5 Language Categories ...................................................................... 21

1.6 Language Design Trade-Offs ........................................................... 23

1.7 Implementation Methods ................................................................ 23

1.8 Programming Environments ........................................................... 31

Summary • Review Questions • Problem Set .............................................. 31

Chapter 2 Evolution of the Major Programming Languages 35

2.1

Zuse’s Plankalkül .......................................................................... 38

2.2 Pseudocodes .................................................................................. 39

2.3 The IBM 704 and Fortran .............................................................. 42

2.4 Functional Programming: LISP ...................................................... 47

2.5 The First Step Toward Sophistication: ALGOL 60 ........................... 52

2.6 Computerizing Business Records: COBOL ........................................ 58

2.7 The Beginnings of Timesharing: BASIC ........................................... 63

Interview: ALAN COOPER—User Design and Language Design ................. 66

2.8 Everything for Everybody: PL/I ...................................................... 68

2.9 Two Early Dynamic Languages: APL and SNOBOL ......................... 71

2.10 The Beginnings of Data Abstraction: SIMULA 67 ........................... 72

2.11 Orthogonal Design: ALGOL 68 ....................................................... 73

2.12 Some Early Descendants of the ALGOLs ......................................... 75

Contents xiii

2.13 Programming Based on Logic: Prolog ............................................. 79

2.14 History’s Largest Design Effort: Ada .............................................. 81

2.15 Object-Oriented Programming: Smalltalk ........................................ 85

2.16 Combining Imperative and Object-Oriented Features: C++................ 88

2.17 An Imperative-Based Object-Oriented Language: Java ..................... 91

2.18 Scripting Languages ....................................................................... 95

2.19 The Flagship .NET Language: C# ................................................. 101

2.20 Markup/Programming Hybrid Languages ...................................... 104

Summary • Bibliographic Notes • Review Questions • Problem Set •

Programming Exercises ........................................................................... 106

Chapter 3 Describing Syntax and Semantics 113

3.1

Introduction ................................................................................. 114

3.2 The General Problem of Describing Syntax .................................... 115

3.3 Formal Methods of Describing Syntax ........................................... 117

3.4 Attribute Grammars ..................................................................... 132

History Note ..................................................................................... 133

3.5 Describing the Meanings of Programs: Dynamic Semantics ............ 139

History Note ..................................................................................... 154

Summary • Bibliographic Notes • Review Questions • Problem Set ........... 161

Chapter 4 Lexical and Syntax Analysis 167

4.1

Introduction ................................................................................. 168

4.2 Lexical Analysis ........................................................................... 169

4.3 The Parsing Problem .................................................................... 177

4.4 Recursive-Descent Parsing ............................................................ 181

4.5 Bottom-Up Parsing ...................................................................... 190

Summary • Review Questions • Problem Set • Programming Exercises ..... 197

Chapter 5 Names, Bindings, and Scopes 203

5.1

Introduction ................................................................................. 204

5.2 Names ......................................................................................... 205

History Note ..................................................................................... 205

xiv Contents

5.3 Variables ..................................................................................... 207

5.4 The Concept of Binding ................................................................ 209

5.5 Scope .......................................................................................... 218

5.6 Scope and Lifetime ...................................................................... 229

5.7 Referencing Environments ............................................................ 230

5.8 Named Constants ......................................................................... 232

Summary • Review Questions • Problem Set • Programming Exercises ..... 234

Chapter 6 Data Types 243

6.1

Introduction ................................................................................. 244

6.2 Primitive Data Types .................................................................... 246

6.3 Character String Types ................................................................. 250

History Note ..................................................................................... 251

6.4 User-Defined Ordinal Types ........................................................... 255

6.5 Array Types .................................................................................. 259

History Note ..................................................................................... 260

History Note ..................................................................................... 261

6.6 Associative Arrays ........................................................................ 272

Interview: ROBERTO IERUSALIMSCHY—Lua ........................... 274

6.7 Record Types ................................................................................ 276

6.8 Tuple Types .................................................................................. 280

6.9 List Types .................................................................................... 281

6.10 Union Types ................................................................................. 284

6.11 Pointer and Reference Types ......................................................... 289

History Note ..................................................................................... 293

6.12 Type Checking .............................................................................. 302

6.13 Strong Typing ............................................................................... 303

6.14 Type Equivalence.......................................................................... 304

6.15 Theory and Data Types ................................................................. 308

Summary • Bibliographic Notes • Review Questions • Problem Set •

Programming Exercises ........................................................................... 310

Contents xv

Chapter 7 Expressions and Assignment Statements 317

7.1

Introduction ................................................................................. 318

7.2 Arithmetic Expressions ................................................................ 318

7.3 Overloaded Operators ................................................................... 328

7.4 Type Conversions .......................................................................... 329

History Note ..................................................................................... 332

7.5 Relational and Boolean Expressions .............................................. 332

History Note ..................................................................................... 333

7.6 Short-Circuit Evaluation .............................................................. 335

7.7 Assignment Statements ................................................................ 336

History Note ..................................................................................... 340

7.8 Mixed-Mode Assignment .............................................................. 341

Summary • Review Questions • Problem Set • Programming Exercises ..... 341

Chapter 8 Statement-Level Control Structures 347

8.1

Introduction ................................................................................. 348

8.2 Selection Statements .................................................................... 350

8.3 Iterative Statements ..................................................................... 362

8.4 Unconditional Branching .............................................................. 375

History Note ..................................................................................... 376

8.5 Guarded Commands ..................................................................... 376

8.6 Conclusions .................................................................................. 379

Summary • Review Questions • Problem Set • Programming Exercises ..... 380

Chapter 9 Subprograms 387

9.1

Introduction ................................................................................. 388

9.2 Fundamentals of Subprograms ..................................................... 388

9.3 Design Issues for Subprograms ..................................................... 396

9.4 Local Referencing Environments ................................................... 397

9.5 Parameter-Passing Methods ......................................................... 399

History Note ..................................................................................... 407

History Note ..................................................................................... 407

xvi Contents

9.6 Parameters That Are Subprograms ............................................... 417

9.7 Calling Subprograms Indirectly ..................................................... 419

History Note ..................................................................................... 419

9.8 Overloaded Subprograms .............................................................. 421

9.9 Generic Subprograms ................................................................... 422

9.10 Design Issues for Functions .......................................................... 428

9.11 User-Defined Overloaded Operators ............................................... 430

9.12 Closures ...................................................................................... 430

9.13 Coroutines ................................................................................... 432

Summary • Review Questions • Problem Set • Programming Exercises ..... 435

Chapter 10 Implementing Subprograms 441

10.1

The General Semantics of Calls and Returns.................................. 442

10.2 Implementing “Simple” Subprograms ........................................... 443

10.3 Implementing Subprograms with Stack-Dynamic Local Variables ... 445

10.4 Nested Subprograms .................................................................... 454

10.5 Blocks ......................................................................................... 460

10.6 Implementing Dynamic Scoping .................................................... 462

Summary • Review Questions • Problem Set • Programming Exercises ..... 466

Chapter 11 Abstract Data Types and Encapsulation Constructs 473

11.1

The Concept of Abstraction .......................................................... 474

11.2 Introduction to Data Abstraction .................................................. 475

11.3 Design Issues for Abstract Data Types ........................................... 478

11.4 Language Examples ..................................................................... 479

Interview: BJARNE STROUSTRUP—C++: Its Birth,

Its Ubiquitousness, and Common Criticisms ............................................. 480

11.5 Parameterized Abstract Data Types ............................................... 503

11.6 Encapsulation Constructs ............................................................. 509

11.7 Naming Encapsulations ................................................................ 513

Summary • Review Questions • Problem Set • Programming Exercises ..... 517

Contents xvii

Chapter 12 Support for Object-Oriented Programming 523

12.1

Introduction ................................................................................. 524

12.2 Object-Oriented Programming ...................................................... 525

12.3 Design Issues for Object-Oriented Languages ................................. 529

12.4 Support for Object-Oriented Programming in Smalltalk ................. 534

Interview: BJARNE STROUSTRUP—On Paradigms and Better

Programming ......................................................................................... 536

12.5 Support for Object-Oriented Programming in C++ ......................... 538

12.6 Support for Object-Oriented Programming in Objective-C .............. 549

12.7 Support for Object-Oriented Programming in Java ......................... 552

12.8 Support for Object-Oriented Programming in C# ........................... 556

12.9 Support for Object-Oriented Programming in Ada 95 .................... 558

12.10 Support for Object-Oriented Programming in Ruby ........................ 563

12.11 Implementation of Object-Oriented Constructs ............................... 566

Summary • Review Questions • Problem Set • Programming Exercises .... 569

Chapter 13 Concurrency 575

13.1

Introduction ................................................................................. 576

13.2 Introduction to Subprogram-Level Concurrency ............................. 581

13.3 Semaphores ................................................................................. 586

13.4 Monitors ...................................................................................... 591

13.5 Message Passing .......................................................................... 593

13.6 Ada Support for Concurrency ....................................................... 594

13.7 Java Threads ................................................................................ 603

13.8 C# Threads .................................................................................. 613

13.9 Concurrency in Functional Languages ........................................... 618

13.10 Statement-Level Concurrency ....................................................... 621

Summary • Bibliographic Notes • Review Questions • Problem Set •

Programming Exercises ........................................................................... 623

xviii Contents

Chapter 14 Exception Handling and Event Handling 629

14.1

Introduction to Exception Handling .............................................. 630

History Note ..................................................................................... 634

14.2 Exception Handling in Ada ........................................................... 636

14.3 Exception Handling in C++ ........................................................... 643

14.4 Exception Handling in Java .......................................................... 647

14.5 Introduction to Event Handling ..................................................... 655

14.6 Event Handling with Java ............................................................. 656

14.7 Event Handling in C# ................................................................... 661

Summary • Bibliographic Notes • Review Questions • Problem Set •

Programming Exercises ........................................................................... 664

Chapter 15 Functional Programming Languages 671

15.1

Introduction ................................................................................. 672

15.2 Mathematical Functions ............................................................... 673

15.3 Fundamentals of Functional Programming Languages ................... 676

15.4 The First Functional Programming Language: LISP ..................... 677

15.5 An Introduction to Scheme ........................................................... 681

15.6 Common LISP ............................................................................. 699

15.7 ML .............................................................................................. 701

15.8 Haskell ........................................................................................ 707

15.9 F# ............................................................................................... 712

15.10 Support for Functional Programming in Primarily

Imperative Languages .................................................................. 715

15.11 A Comparison of Functional and Imperative Languages ................. 717

Summary • Bibliographic Notes • Review Questions • Problem Set •

Programming Exercises ........................................................................... 720

Chapter 16 Logic Programming Languages 727

16.1

Introduction ................................................................................. 728

16.2 A Brief Introduction to Predicate Calculus .................................... 728

16.3 Predicate Calculus and Proving Theorems ..................................... 732

Contents xix

16.4 An Overview of Logic Programming .............................................. 734

16.5 The Origins of Prolog ................................................................... 736

16.6 The Basic Elements of Prolog ....................................................... 736

16.7 Deficiencies of Prolog .................................................................. 751

16.8 Applications of Logic Programming .............................................. 757

Summary • Bibliographic Notes • Review Questions • Problem Set •

Programming Exercises ........................................................................... 758

Bibliography ................................................................................ 763

Index ........................................................................................... 773

This page intentionally left blank

2 Chapter 1 Preliminaries

B

efore we begin discussing the concepts of programming languages, we must

consider a few preliminaries. First, we explain some reasons why computer

science students and professional software developers should study general

concepts of language design and evaluation. This discussion is especially valu-

able for those who believe that a working knowledge of one or two programming

languages is sufficient for computer scientists. Then, we briefly describe the major

programming domains. Next, because the book evaluates language constructs and

features, we present a list of criteria that can serve as a basis for such judgments.

Then, we discuss the two major influences on language design: machine architecture

and program design methodologies. After that, we introduce the various categories

of programming languages. Next, we describe a few of the major trade-offs that

must be considered during language design.

Because this book is also about the implementation of programming languages,

this chapter includes an overview of the most common general approaches to imple-

mentation. Finally, we briefly describe a few examples of programming environments

an

d discuss their impact on software production.

1.1 Reasons for Studying Concepts of Programming Languages

It is natural for students to wonder how they will benefit from the study of pro-

gramming language concepts. After all, many other topics in computer science

are worthy of serious study. The following is what we believe to be a compel-

ling list of potential benefits of studying concepts of programming languages:

• Increased capacity to express ideas. It is widely believed that the depth at

which people can think is influenced by the expressive power of the lan-

guage in which they communicate their thoughts. Those with only a weak

understanding of natural language are limited in the complexity of their

thoughts, particularly in depth of abstraction. In other words, it is difficult

for people to conceptualize structures they cannot describe, verbally or in

writing.

Programmers, in the process of developing software, are similarly con-

strained. The language in which they develop software places limits on

the kinds of control structures, data structures, and abstractions they can

use; thus, the forms of algorithms they can construct are likewise limited.

Awareness of a wider variety of programming language features can reduce

such limitations in software development. Programmers can increase the

range of their software development thought processes by learning new

language constructs.

It might be argued that learning the capabilities of other languages does

not help a programmer who is forced to use a language that lacks those

capabilities. That argument does not hold up, however, because often, lan-

guage constructs can be simulated in other languages that do not support

those constructs directly. For example, a C programmer who had learned

the structure and uses of associative arrays in Perl (Wall et al., 2000) might

design structures that simulate associative arrays in that language. In other

1.1 Reasons for Studying Concepts of Programming Languages 3

words, the study of programming language concepts builds an appreciation

for valuable language features and constructs and encourages programmers

to use them, even when the language they are using does not directly sup-

port such features and constructs.

• Improved background for choosing appropriate languages. Many professional

programmers have had little formal education in computer science; rather,

they have developed their programming skills independently or through in-

house training programs. Such training programs often limit instruction to

one or two languages that are directly relevant to the current projects of the

organization. Many other programmers received their formal training years

ago. The languages they learned then are no longer used, and many features

now available in programming languages were not widely known at the time.

The result is that many programmers, when given a choice of languages for a

new project, use the language with which they are most familiar, even if it is

poorly suited for the project at hand. If these programmers were familiar with

a wider range of languages and language constructs, they would be better able

to choose the language with the features that best address the problem.

Some of the features of one language often can be simulated in another

language. However, it is preferable to use a feature whose design has been

integrated into a language than to use a simulation of that feature, which is

often less elegant, more cumbersome, and less safe.

• Increased ability to learn new languages. Computer programming is still a rela-

tively young discipline, and design methodologies, software development

tools, and programming languages are still in a state of continuous evolu-

tion. This makes software development an exciting profession, but it also

means that continuous learning is essential. The process of learning a new

programming language can be lengthy and difficult, especially for someone

who is comfortable with only one or two languages and has never examined

programming language concepts in general. Once a thorough understanding

of the fundamental concepts of languages is acquired, it becomes far easier

to see how these concepts are incorporated into the design of the language

being learned. For example, programmers who understand the concepts of

object-oriented programming will have a much easier time learning Java

(Arnold et al., 2006) than those who have never used those concepts.

The same phenomenon occurs in natural languages. The better you

know the grammar of your native language, the easier it is to learn a sec-

ond language. Furthermore, learning a second language has the benefit of

teaching you more about your first language.

The TIOBE Programming Community issues an index (http://www

.tiobe.com/tiobe_index/index.htm) that is an indicator of the

relative popularity of programming languages. For example, according to

the index, Java, C, and C++ were the three most popular languages in use

in August 2011.

1

However, dozens of other languages were widely used at

1. Note that this index is only one measure of the popularity of programming languages, and

its accuracy is not universally accepted.

4 Chapter 1 Preliminaries

the time. The index data also show that the distribution of usage of pro-

gramming languages is always changing. The number of languages in use

and the dynamic nature of the statistics imply that every software developer

must be prepared to learn different languages.

Finally, it is essential that practicing programmers know the vocabulary

and fundamental concepts of programming languages so they can read and

understand programming language descriptions and evaluations, as well as

promotional literature for languages and compilers. These are the sources

of information needed in order to choose and learn a language.

• Better understanding of the significance of implementation. In learning the con-

cepts of programming languages, it is both interesting and necessary to touch

on the implementation issues that affect those concepts. In some cases, an

understanding of implementation issues leads to an understanding of why

languages are designed the way they are. In turn, this knowledge leads to

the ability to use a language more intelligently, as it was designed to be used.

We can become better programmers by understanding the choices among

programming language constructs and the consequences of those choices.

Certain kinds of program bugs can be found and fixed only by a pro-

grammer who knows some related implementation details. Another ben-

efit of understanding implementation issues is that it allows us to visualize

how a computer executes various language constructs. In some cases, some

knowledge of implementation issues provides hints about the relative effi-

ciency of alternative constructs that may be chosen for a program. For

example, programmers who know little about the complexity of the imple-

mentation of subprogram calls often do not realize that a small subprogram

that is frequently called can be a highly inefficient design choice.

Because this book touches on only a few of the issues of implementa-

tion, the previous two paragraphs also serve well as rationale for studying

compiler design.

• Better use of languages that are already known. Many contemporary program-

ming languages are large and complex. Accordingly, it is uncommon for

a programmer to be familiar with and use all of the features of a language

he or she uses. By studying the concepts of programming languages, pro-

grammers can learn about previously unknown and unused parts of the

languages they already use and begin to use those features.

• Overall advancement of computing. Finally, there is a global view of comput-

ing that can justify the study of programming language concepts. Although

it is usually possible to determine why a particular programming language

became popular, many believe, at least in retrospect, that the most popu-

lar languages are not always the best available. In some cases, it might be

concluded that a language became widely used, at least in part, because

those in positions to choose languages were not sufficiently familiar with

programming language concepts.

For example, many people believe it would have been better if ALGOL

60 (Backus et al., 1963) had displaced Fortran (Metcalf et al., 2004) in the

1.2 Programming Domains 5

early 1960s, because it was more elegant and had much better control state-

ments, among other reasons. That it did not, is due partly to the program-

mers and software development managers of that time, many of whom did

not clearly understand the conceptual design of ALGOL 60. They found its

description difficult to read (which it was) and even more difficult to under-

stand. They did not appreciate the benefits of block structure, recursion,

and well-structured control statements, so they failed to see the benefits of

ALGOL 60 over Fortran.

Of course, many other factors contributed to the lack of acceptance of

ALGOL 60, as we will see in Chapter 2. However, the fact that computer

users were generally unaware of the benefits of the language played a sig-

nificant role.

In general, if those who choose languages were well informed, perhaps

better languages would eventually squeeze out poorer ones.

1.2 Programming Domains

Computers have been applied to a myriad of different areas, from controlling

nuclear power plants to providing video games in mobile phones. Because of

this great diversity in computer use, programming languages with very different

goals have been developed. In this section, we briefly discuss a few of the areas

of computer applications and their associated languages.

1.2.1 Scientific Applications

The first digital computers, which appeared in the late 1940s and early 1950s,

were invented and used for scientific applications. Typically, the scientific appli-

cations of that time used relatively simple data structures, but required large

numbers of floating-point arithmetic computations. The most common data

structures were arrays and matrices; the most common control structures were

counting loops and selections. The early high-level programming languages

invented for scientific applications were designed to provide for those needs.

Their competition was assembly language, so efficiency was a primary concern.

The first language for scientific applications was Fortran. ALGOL 60 and most

of its descendants were also intended to be used in this area, although they were

designed to be used in related areas as well. For some scientific applications

where efficiency is the primary concern, such as those that were common in the

1950s and 1960s, no subsequent language is significantly better than Fortran,

which explains why Fortran is still used.

1.2.2 Business Applications

The use of computers for business applications began in the 1950s. Special

computers were developed for this purpose, along with special languages. The

first successful high-level language for business was COBOL (ISO/IEC, 2002),

6 Chapter 1 Preliminaries

the initial version of which appeared in 1960. It is still the most commonly

used language for these applications. Business languages are characterized by

facilities for producing elaborate reports, precise ways of describing and stor-

ing decimal numbers and character data, and the ability to specify decimal

arithmetic operations.

There have been few developments in business application languages out-

side the development and evolution of COBOL. Therefore, this book includes

only limited discussions of the structures in COBOL.

1.2.3 Artificial Intelligence

Artificial intelligence (AI) is a broad area of computer applications charac-

terized by the use of symbolic rather than numeric computations. Symbolic

computation means that symbols, consisting of names rather than numbers,

are manipulated. Also, symbolic computation is more conveniently done with

linked lists of data rather than arrays. This kind of programming sometimes

requires more flexibility than other programming domains. For example, in

some AI applications the ability to create and execute code segments during

execution is convenient.

The first widely used programming language developed for AI applications

was the functional language LISP (McCarthy et al., 1965), which appeared

in 1959. Most AI applications developed prior to 1990 were written in LISP

or one of its close relatives. During the early 1970s, however, an alternative

approach to some of these applications appeared—logic programming using

the Prolog (Clocksin and Mellish, 2003) language. More recently, some

AI applications have been written in systems languages such as C. Scheme

(Dybvig, 2003), a dialect of LISP, and Prolog are introduced in Chapters 15

and 16, respectively.

1.2.4 Systems Programming

The operating system and the programming support tools of a computer sys-

tem are collectively known as its systems software. Systems software is used

almost continuously and so it must be efficient. Furthermore, it must have low-

level features that allow the software interfaces to external devices to be written.

In the 1960s and 1970s, some computer manufacturers, such as IBM,

Digital, and Burroughs (now UNISYS), developed special machine-oriented

high-level languages for systems software on their machines. For IBM main-

frame computers, the language was PL/S, a dialect of PL/I; for Digital, it was

BLISS, a language at a level just above assembly language; for Burroughs, it

was Extended ALGOL. However, most system software is now written in more

general programming languages, such as C and C++.

The UNIX operating system is written almost entirely in C (ISO, 1999),

which has made it relatively easy to port, or move, to different machines. Some

of the characteristics of C make it a good choice for systems programming.

It is low level, execution efficient, and does not burden the user with many

1.3 Language Evaluation Criteria 7

safety restrictions. Systems programmers are often excellent programmers

who believe they do not need such restrictions. Some nonsystems program-

mers, however, find C to be too dangerous to use on large, important software

systems.

1.2.5 Web Software

The World Wide Web is supported by an eclectic collection of languages,

ranging from markup languages, such as HTML, which is not a programming

language, to general-purpose programming languages, such as Java. Because

of the pervasive need for dynamic Web content, some computation capability

is often included in the technology of content presentation. This functionality

can be provided by embedding programming code in an HTML document.

Such code is often in the form of a scripting language, such as JavaScript or

PHP. There are also some markup-like languages that have been extended to

include constructs that control document processing, which are discussed in

Section 1.5 and in Chapter 2.

1.3 Language Evaluation Criteria

As noted previously, the purpose of this book is to examine carefully the under-

lying concepts of the various constructs and capabilities of programming lan-

guages. We will also evaluate these features, focusing on their impact on the

software development process, including maintenance. To do this, we need a set

of evaluation criteria. Such a list of criteria is necessarily controversial, because

it is difficult to get even two computer scientists to agree on the value of some

given language characteristic relative to others. In spite of these differences,

most would agree that the criteria discussed in the following subsections are

important.

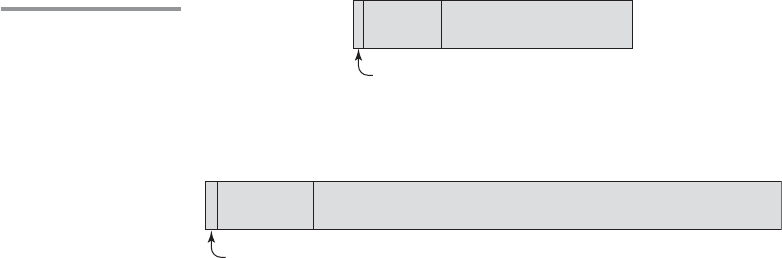

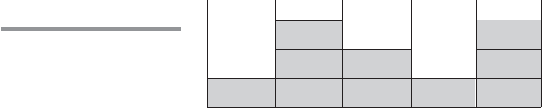

Some of the characteristics that influence three of the four most impor-

tant of these criteria are shown in Table 1.1, and the criteria themselves

are discussed in the following sections.

2

Note that only the most impor-

tant characteristics are included in the table, mirroring the discussion in

the following subsections. One could probably make the case that if one

considered less important characteristics, virtually all table positions could

include “bullets.”

Note that some of these characteristics are broad and somewhat vague,

such as writability, whereas others are specific language constructs, such as

exception handling. Furthermore, although the discussion might seem to imply

that the criteria have equal importance, that implication is not intended, and

it is clearly not the case.

2. The fourth primary criterion is cost, which is not included in the table because it is only

slightly related to the other criteria and the characteristics that influence them.

8 Chapter 1 Preliminaries

1.3.1 Readability

One of the most important criteria for judging a programming language is the

ease with which programs can be read and understood. Before 1970, software

development was largely thought of in terms of writing code. The primary

positive characteristic of programming languages was efficiency. Language

constructs were designed more from the point of view of the computer than

of the computer users. In the 1970s, however, the software life-cycle concept

(Booch, 1987) was developed; coding was relegated to a much smaller role, and

maintenance was recognized as a major part of the cycle, particularly in terms

of cost. Because ease of maintenance is determined in large part by the read-

ability of programs, readability became an important measure of the quality of

programs and programming languages. This was an important juncture in the

evolution of programming languages. There was a distinct crossover from a

focus on machine orientation to a focus on human orientation.

Readability must be considered in the context of the problem domain. For

example, if a program that describes a computation is written in a language not

designed for such use, the program may be unnatural and convoluted, making

it unusually difficult to read.

The following subsections describe characteristics that contribute to the

readability of a programming language.

1.3.1.1 Overall Simplicity

The overall simplicity of a programming language strongly affects its readabil-

ity. A language with a large number of basic constructs is more difficult to learn

than one with a smaller number. Programmers who must use a large language

often learn a subset of the language and ignore its other features. This learning

pattern is sometimes used to excuse the large number of language constructs,

Table 1.1

Language evaluation criteria and the characteristics that affect them

CRITERIA

Characteristic

READABILITY WRITABILITY RELIABILITY

Simplicity • • •

Orthogonality • • •

Data types • • •

Syntax design • • •

Support for abstraction • •

Expressivity • •

Type checking •

Exception handling •

Restricted aliasing •

1.3 Language Evaluation Criteria 9

but that argument is not valid. Readability problems occur whenever the pro-

gram’s author has learned a different subset from that subset with which the

reader is familiar.

A second complicating characteristic of a programming language is feature

multiplicity—that is, having more than one way to accomplish a particular

operation. For example, in Java, a user can increment a simple integer variable

in four different ways:

count = count + 1

count += 1

count++

++count

Although the last two statements have slightly different meanings from each

other and from the others in some contexts, all of them have the same mean-

ing when used as stand-alone expressions. These variations are discussed in

Chapter 7.

A third potential problem is operator overloading, in which a single oper-

ator symbol has more than one meaning. Although this is often useful, it can

lead to reduced readability if users are allowed to create their own overloading

and do not do it sensibly. For example, it is clearly acceptable to overload +

to use it for both integer and floating-point addition. In fact, this overloading

simplifies a language by reducing the number of operators. However, suppose

the programmer defined + used between single-dimensioned array operands

to mean the sum of all elements of both arrays. Because the usual meaning of

vector addition is quite different from this, it would make the program more

confusing for both the author and the program’s readers. An even more extreme

example of program confusion would be a user defining + between two vector

operands to mean the difference between their respective first elements. Opera-

tor overloading is further discussed in Chapter 7.

Simplicity in languages can, of course, be carried too far. For example,

the form and meaning of most assembly language statements are models of

simplicity, as you can see when you consider the statements that appear in the

next section. This very simplicity, however, makes assembly language programs

less readable. Because they lack more complex control statements, program

structure is less obvious; because the statements are simple, far more of them

are required than in equivalent programs in a high-level language. These same

arguments apply to the less extreme case of high-level languages with inad-

equate control and data-structuring constructs.

1.3.1.2 Orthogonality

Orthogonality in a programming language means that a relatively small set of

primitive constructs can be combined in a relatively small number of ways to

build the control and data structures of the language. Furthermore, every pos-

sible combination of primitives is legal and meaningful. For example, consider

10 Chapter 1 Preliminaries

data types. Suppose a language has four primitive data types (integer, float,

double, and character) and two type operators (array and pointer). If the two

type operators can be applied to themselves and the four primitive data types,

a large number of data structures can be defined.

The meaning of an orthogonal language feature is independent of the

context of its appearance in a program. (the word orthogonal comes from the

mathematical concept of orthogonal vectors, which are independent of each

other.) Orthogonality follows from a symmetry of relationships among primi-

tives. A lack of orthogonality leads to exceptions to the rules of the language.

For example, in a programming language that supports pointers, it should be

possible to define a pointer to point to any specific type defined in the language.

However, if pointers are not allowed to point to arrays, many potentially useful

user-defined data structures cannot be defined.

We can illustrate the use of orthogonality as a design concept by compar-

ing one aspect of the assembly languages of the IBM mainframe computers

and the VAX series of minicomputers. We consider a single simple situation:

adding two 32-bit integer values that reside in either memory or registers and

replacing one of the two values with the sum. The IBM mainframes have two

instructions for this purpose, which have the forms

A Reg1, memory_cell

AR Reg1, Reg2

where Reg1 and Reg2 represent registers. The semantics of these are

Reg1 ← contents(Reg1) + contents(memory_cell)

Reg1 ← contents(Reg1) + contents(Reg2)

The VAX addition instruction for 32-bit integer values is

ADDL operand_1, operand_2

whose semantics is

operand_2 ← contents(operand_1) + contents(operand_2)

In this case, either operand can be a register or a memory cell.

The VAX instruction design is orthogonal in that a single instruction can

use either registers or memory cells as the operands. There are two ways to

specify operands, which can be combined in all possible ways. The IBM design

is not orthogonal. Only two out of four operand combinations possibilities are

legal, and the two require different instructions, A and AR. The IBM design

is more restricted and therefore less writable. For example, you cannot add

two values and store the sum in a memory location. Furthermore, the IBM

design is more difficult to learn because of the restrictions and the additional

instruction.

1.3 Language Evaluation Criteria 11

Orthogonality is closely related to simplicity: The more orthogonal the

design of a language, the fewer exceptions the language rules require. Fewer

exceptions mean a higher degree of regularity in the design, which makes the

language easier to learn, read, and understand. Anyone who has learned a sig-

nificant part of the English language can testify to the difficulty of learning its

many rule exceptions (for example, i before e except after c).

As examples of the lack of orthogonality in a high-level language, consider

the following rules and exceptions in C. Although C has two kinds of struc-

tured data types, arrays and records (structs), records can be returned from

functions but arrays cannot. A member of a structure can be any data type

except void or a structure of the same type. An array element can be any data

type except void or a function. Parameters are passed by value, unless they

are arrays, in which case they are, in effect, passed by reference (because the

appearance of an array name without a subscript in a C program is interpreted

to be the address of the array’s first element).

As an example of context dependence, consider the C expression

a + b

This expression often means that the values of a and b are fetched and added

together. However, if a happens to be a pointer, it affects the value of b. For

example, if a points to a float value that occupies four bytes, then the value of b

must be scaled—in this case multiplied by 4—before it is added to a. Therefore,

the type of a affects the treatment of the value of b. The context of b affects

its meaning.

Too much orthogonality can also cause problems. Perhaps the most

orthogonal programming language is ALGOL 68 (van Wijngaarden et al.,

1969). Every language construct in ALGOL 68 has a type, and there are no

restrictions on those types. In addition, most constructs produce values. This

combinational freedom allows extremely complex constructs. For example, a

conditional can appear as the left side of an assignment, along with declarations

and other assorted statements, as long as the result is an address. This extreme

form of orthogonality leads to unnecessary complexity. Furthermore, because

languages require a large number of primitives, a high degree of orthogonality

results in an explosion of combinations. So, even if the combinations are simple,

their sheer numbers lead to complexity.

Simplicity in a language, therefore, is at least in part the result of a com-

bination of a relatively small number of primitive constructs and a limited use

of the concept of orthogonality.

Some believe that functional languages offer a good combination of sim-

plicity and orthogonality. A functional language, such as LISP, is one in which

computations are made primarily by applying functions to given parameters.

In contrast, in imperative languages such as C, C++, and Java, computations

are usually specified with variables and assignment statements. Functional

languages offer potentially the greatest overall simplicity, because they can

accomplish everything with a single construct, the function call, which can be

12 Chapter 1 Preliminaries

combined simply with other function calls. This simple elegance is the reason

why some language researchers are attracted to functional languages as the

primary alternative to complex nonfunctional languages such as C++. Other

factors, such as efficiency, however, have prevented functional languages from

becoming more widely used.

1.3.1.3 Data Types

The presence of adequate facilities for defining data types and data structures

in a language is another significant aid to readability. For example, suppose a

numeric type is used for an indicator flag because there is no Boolean type in the

language. In such a language, we might have an assignment such as the following:

timeOut = 1

The meaning of this statement is unclear, whereas in a language that includes

Boolean types, we would have the following:

timeOut = true

The meaning of this statement is perfectly clear.

1.3.1.4 Syntax Design

The syntax, or form, of the elements of a language has a significant effect on

the readability of programs. Following are some examples of syntactic design

choices that affect readability:

• Special words. Program appearance and thus program readability are strongly

influenced by the forms of a language’s special words (for example, while,

class, and for). Especially important is the method of forming compound

statements, or statement groups, primarily in control constructs. Some lan-

guages have used matching pairs of special words or symbols to form groups.

C and its descendants use braces to specify compound statements. All of

these languages suffer because statement groups are always terminated in the

same way, which makes it difficult to determine which group is being ended

when an

end or a right brace appears. Fortran 95 and Ada make this clearer

by using a distinct closing syntax for each type of statement group. For

example, Ada uses end if to terminate a selection construct and end loop

to terminate a loop construct. This is an example of the conflict between

simplicity that results in fewer reserved words, as in C++, and the greater

readability that can result from using more reserved words, as in Ada.

Another important issue is whether the special words of a language can

be used as names for program variables. If so, the resulting programs can

be very confusing. For example, in Fortran 95, special words, such as

Do

and End, are legal variable names, so the appearance of these words in a

program may or may not connote something special.

1.3 Language Evaluation Criteria 13

• Form and meaning. Designing statements so that their appearance at least

partially indicates their purpose is an obvious aid to readability. Semantics,

or meaning, should follow directly from syntax, or form. In some cases, this

principle is violated by two language constructs that are identical or similar

in appearance but have different meanings, depending perhaps on context. In

C, for example, the meaning of the reserved word static depends on the

context of its appearance. If used on the definition of a variable inside a func-

tion, it means the variable is created at compile time. If used on the definition

of a variable that is outside all functions, it means the variable is visible only in

the file in which its definition appears; that is, it is not exported from that file.

One of the primary complaints about the shell commands of UNIX

(Raymond, 2004) is that their appearance does not always suggest their

function. For example, the meaning of the UNIX command

grep can be

deciphered only through prior knowledge, or perhaps cleverness and famil-

iarity with the UNIX editor, ed. The appearance of grep connotes nothing

to UNIX beginners. (In ed, the command /regular_expression/ searches for a

substring that matches the regular expression. Preceding this with g makes

it a global command, specifying that the scope of the search is the whole

file being edited. Following the command with p specifies that lines with

the matching substring are to be printed. So g/regular_expression/p, which

can obviously be abbreviated as grep, prints all lines in a file that contain

substrings that match the regular expression.)

1.3.2 Writability

Writability is a measure of how easily a language can be used to create programs

for a chosen problem domain. Most of the language characteristics that affect

readability also affect writability. This follows directly from the fact that the

process of writing a program requires the programmer frequently to reread the

part of the program that is already written.

As is the case with readability, writability must be considered in the con-

text of the target problem domain of a language. It is simply not reasonable to

compare the writability of two languages in the realm of a particular application

when one was designed for that application and the other was not. For example,

the writabilities of Visual BASIC (VB) and C are dramatically different for

creating a program that has a graphical user interface, for which VB is ideal.

Their writabilities are also quite different for writing systems programs, such

as an operation system, for which C was designed.

The following subsections describe the most important characteristics

influencing the writability of a language.

1.3.2.1 Simplicity and Orthogonality

If a language has a large number of different constructs, some programmers

might not be familiar with all of them. This situation can lead to a misuse of

some features and a disuse of others that may be either more elegant or more

14 Chapter 1 Preliminaries

efficient, or both, than those that are used. It may even be possible, as noted

by Hoare (1973), to use unknown features accidentally, with bizarre results.

Therefore, a smaller number of primitive constructs and a consistent set of

rules for combining them (that is, orthogonality) is much better than simply

having a large number of primitives. A programmer can design a solution to a

complex problem after learning only a simple set of primitive constructs.

On the other hand, too much orthogonality can be a detriment to writ-

ability. Errors in programs can go undetected when nearly any combination of

primitives is legal. This can lead to code absurdities that cannot be discovered

by the compiler.

1.3.2.2 Support for Abstraction

Briefly, abstraction means the ability to define and then use complicated

structures or operations in ways that allow many of the details to be ignored.

Abstraction is a key concept in contemporary programming language design.

This is a reflection of the central role that abstraction plays in modern pro-

gram design methodologies. The degree of abstraction allowed by a program-

ming language and the naturalness of its expression are therefore important to

its writability. Programming languages can support two distinct categories of

abstraction, process and data.

A simple example of process abstraction is the use of a subprogram to

implement a sort algorithm that is required several times in a program. With-

out the subprogram, the sort code would need to be replicated in all places

where it was needed, which would make the program much longer and more

tedious to write. Perhaps more important, if the subprogram were not used, the

code that used the sort subprogram would be cluttered with the sort algorithm

details, greatly obscuring the flow and overall intent of that code.

As an example of data abstraction, consider a binary tree that stores integer

data in its nodes. Such a binary tree would usually be implemented in a language

that does not support pointers and dynamic storage management with a heap,

such as Fortran 77, as three parallel integer arrays, where two of the integers are

used as subscripts to specify offspring nodes. In C++ and Java, these trees can be

implemented by using an abstraction of a tree node in the form of a simple class

with two pointers (or references) and an integer. The naturalness of the latter

representation makes it much easier to write a program that uses binary trees

in these languages than to write one in Fortran 77. It is a simple matter of the

problem solution domain of the language being closer to the problem domain.

The overall support for abstraction is clearly an important factor in the

writability of a language.

1.3.2.3 Expressivity

Expressivity in a language can refer to several different characteristics. In a

language such as APL (Gilman and Rose, 1976), it means that there are very

powerful operators that allow a great deal of computation to be accomplished

1.3 Language Evaluation Criteria 15

with a very small program. More commonly, it means that a language has

relatively convenient, rather than cumbersome, ways of specifying computa-

tions. For example, in C, the notation count++ is more convenient and shorter