Use of Khan Academy Official SAT Practice and SAT Achievement:

An Observational Study

T

echnical Report

First released: 17 August 2020

For questions related to the study, please contact [email protected].

For press related questions, please contact [email protected].

Click here to access an ADA accessible version of this report.

2

Acknowledgments

We want to thank our methodological reviewers, Derek Briggs and Laura O’Dwyer, for their detailed

reviews and feedback on this report, and to thank our external panel of reviewers, Richard Bowman, Judie

Cherenfant, Christopher Dupuis, Erica Ramirez Horvath, Mercedes Pour, Simone Rahotep, and Ferdinand

Wipachit, for their guidance and feedback on this report.

Disclaimer

All statements and conclusions, unless specifically attributed to another source, are those of the authors

and do not necessarily reflect the policies or positions of the other organizations or references noted in

this report. For questions or comments about this study, please contact efficacy@khanacademy.org.

The Khan Academy Efficacy & Research team releases our technical reports as working papers to quickly

share the results of our latest studies. As such, these working papers have not undergone blind peer

review, but they were reviewed externally by experts before their release. We release our results early as a

way to obtain feedback and discussion from the research community before submitting them for

publication in a peer-reviewed journal.

Authors

Kodi Weatherholtz, Phillip Grimaldi,* Catherine Hicks,* Kelli Millwood Hill

Khan Academy

* these authors contributed equally to this work

Cassie Freeman, Bercem Akbayin-Sahin, Crystal Coker, Jennifer Ma, Lara Henneman

College Board

Suggested Citation

Weatherholtz, K., Grimaldi, P., Hicks, C., Hill, K.M., Freeman, C., Akbayin-Sahin, B., Coker, C., Ma, J.,

& Henneman, L. (2020). Use of Khan Academy Official SAT Practice and SAT Achievement: An

Observational Study. Mountain View, CA: Khan Academy.

3

Contents

Exec

utive Summary 4

Introduction 6

Background 6

Prior Research on Test Preparation Effectiveness 7

Official SAT Practice: Product Features and Functionality 8

Defining ‘Best Practice Behaviors’ on OSP 11

Methods and Results 12

Sample 12

1. Descriptive Findings of OSP Usage Patterns 14

1a. What does student usage on OSP look like? 14

1b. When are students using OSP? 16

1c. How are students engaging in best practices? 17

2. Associations Between OSP and SAT Performance 20

2a. Does time spent using OSP relate to SAT achievement? 20

2b. Do all students benefit equally from their time spent on OSP? 23

2c. Are best practice behaviors associated with improved SAT performance? 25

2d. Are certain students more likely to engage in best practices? 30

Discussion 31

Limitations and Future Work 31

Conclusion 32

References 33

Appendix A. Table of Variables 36

Appendix B. Modeling the Relationship Between Time Using OSP and SAT Achievement 37

Appendix C. Modeling the Relationship Between Time Using OSP and SAT Achievement As a Function

of Student Characteristics 43

Appendix D. Modeling the Relationship Between Best Practice Behaviors on OSP and SAT Performance

48

Appendix E. Modeling the Relationship Between Best Practice Behaviors on OSP and SAT Performance

as a Function of Student Characteristics 52

Appendix F. Sensitivity Analysis 57

Appendix G. Modeling the Relationships Between Student Characteristics and the Likelihood of

Engaging in Best Practice Behaviors 60

4

Executive Summary

At a time when more education is taking place online than ever before, students and educators need

targeted guidance to make the most of their instructional time. SAT

®

preparation and the journey to

college is no exception. Since 2015, Official SAT Practice (OSP) on Khan Academy

®

has provided free,

personalized practice in an online format to help all students build their skills and prepare for the SAT.

More than 10 million students have used OSP since the launch.

In this report, Khan Academy and College Board jointly analyze OSP usage by more than half a million

students in the class of 2019 between their PSAT/NMSQT

®

and their first SAT in order to associate the

use of OSP with their SAT performance. This builds on our prior work that showed a positive association

between OSP use and higher scores. While our previous work focused on practice between the

PSAT/NMSQT and the last SAT, we are now focusing our interval to the first SAT in order to better

isolate the impact of OSP, particularly from the intervening SAT assessments a student may have taken.

In our 2017 study with approximately 250,000 “early adopter” students, we observed a 90-point score

increase overall (from PSAT/NMSQT to the last SAT) for students using OSP. After removing the typical

growth between PSAT/NMSQT and the last SAT to examine the impact of OSP directly, the specific

added growth from spending six to eight hours practicing on OSP was 30 additional points on their last

SAT compared to students who did not use OSP. In this current study, with wider-spread adoption of

OSP, we found similar results for the PSAT/NMSQT to the first SAT interval. Specifically, we find that

students who spent six or more hours of practice on OSP scored an additional 21 points higher on their

first SAT than students who did not use OSP, but 39 points higher when students used at least one of the

best practice behaviors (described below). These findings hold true across student demographics,

including gender, race/ethnicity, and level of parental education.

This report looks at how OSP works for a broader population of students and outlines new insights on

three best practices of meaningful OSP use, which can optimize student time on the platform. OSP best

practice behaviors are actions any student can take during their practice that are associated with greater

scores on the SAT. These behaviors were operationalized based on how the product is designed, the data

elements available, and prior research concerning the effectiveness of test preparation strategies. We also

find that the best practice behaviors are correlated, but students show selective strategies of how they

engage with OSP. The three best practice behaviors include:

● Leveling up skills: As students progress through OSP material, they can achieve new levels in

the skills practiced. Overall, leveling up provides a signal that students are consistently advancing

in the content tested on the SAT, and is a marker for learning progress on OSP. This best practice

behavior also helps students learn how to monitor their progress.

● Taking a full-length practice test: Taking a full-length practice test simulates the real test

experience and helps students see what they do know and don’t know. Eight full-length online

practice tests, which can be taken in one sitting or over time, are available on OSP.

● Following personalized skill recommendations: OSP provides personalized skill

recommendations based on a student’s previous scores and performance on any PSAT-related test

or SAT assessment or through mini-diagnostic quizzes. Following the personalized skill

recommendations helps a student learn how to stay focused when they study and work on areas

where they need the most help.

5

More time spent on OSP is associated with higher scores on the SAT. However, time spent is not enough.

Best practice behaviors can help guide students and ensure that the time spent on practice is productive.

Although engaging in best practice behaviors was associated with improved performance, students varied

considerably in their adoption. Indeed, the majority of students in our sample unfortunately did not

engage in any of the best practice behaviors (roughly 8% of students in our sample spend six or more

hours and complete a best practice on OSP). Differences in student background, household, and

demographics were associated with the likelihood to engage in a best practice behavior. Although these

characteristics are associated with student behavior, they are likely rough indicators of other factors,

including different educational environments, that may impact how students practice. It is also

important to note that these between-group differences were small and did not result in meaningful

differences between groups in terms of their benefits from using OSP. These results signal that more work

is needed to point students to best practice behaviors and to motivate their usage across the platform. In

coming years, College Board and Khan Academy will work diligently with our partners across the

country through programmatic supports and platform refinements to ensure all students can follow these

best practices.

Although the data associating best practices with score increases are promising, we need more research on

implementation to ensure that when best practices are used more broadly, the associations remain as

strong. Further research will help to build our understanding of student progress; any differences in

adoption of best practice behaviors; and how supports such as school-day implementation and educator

tools can help keep all students engaged and on track. In the ever-evolving educational landscape, it is our

hope that sharing and continuing this research on the evidence for the use of best practice behaviors can

make a difference for the millions of students who use the platform on their path to college, and that

students can make the most effective use of their time on OSP and, ultimately, be successful in their

efforts.

6

Introduction

The mission of Khan Academy is to provide a free, world-class online education to anyone, anywhere. It

is available in 40 different languages and 18 million people use Khan Academy each month. In 2019, the

Khan Academy website included 429 courses, 4,347 articles, 74,507 problems, and 13,327 videos for

learners worldwide. These resources span K–16 subjects, including grade-specific K–12 courses in math,

science and engineering, computing, arts and humanities, economics and finance, test prep, and college

and careers.

In spring 2014, Khan Academy entered a partnership with College Board, the administrator of the SAT

®

,

to provide free SAT practice. By summer 2015, Khan Academy released Official SAT Practice (OSP).

OSP creates a personalized plan that will help each student prepare for the SAT. Included are thousands

of interactive questions with instant feedback, video lessons, eight full-length practice tests, and more. To

receive a personalized practice plan, students can either take a series of diagnostic quizzes or link their

College Board and Khan Academy accounts. Additional details on the OSP features are discussed later in

the Product Features

section.

The purpose of this report is to describe findings from a large-scale analysis—conducted jointly by

researchers at Khan Academy and College Board—concerning the relationship between students’ use of

Khan Academy OSP and their SAT achievement. We investigate the following research questions:

Descriptive findings of OSP usage patterns

1a. What does student usage on OSP look like?

1b. When are students using OSP?

1c. How are students engaging in best practices?

Associations between OSP and SAT performance

2a. Does time spent using OSP relate to SAT achievement?

2b. Do all students benefit equally from their time spent on OSP?

2c. Are best practice behaviors associated with improved SAT performance?

2d. Are certain students more likely to engage in best practices?

In addressing these questions, we have the following goals: (i) to study the association between OSP

usage and SAT scores; (ii) to illuminate particular types of behaviors on OSP that vary in their association

with SAT performance and to identify whether certain groups of students are more/less likely to benefit

from OSP.

Background

Each year, millions of students take the SAT for college admissions and scholarship opportunities

(College Board, 2019a). To prepare for the SAT, students face a wide array of products and services that

vary in cost, personalization, and quality. Free options include released exams, library books, courses

offered by nonprofits, courses offered at school, promotional sessions by test prep companies, and OSP

on Khan Academy. In addition to free offerings, some families can afford paid access to online resources,

test prep books, courses, and individual tutors. With an ever-growing industry, the cost of courses and

tutoring can be substantial (Buchmann et al., 2010; U.S. News and World Report, 2020). Previous studies

on test prep have focused on products (e.g., courses) and services (e.g., tutoring), as well as dosage (e.g.,

number of sessions, hours). Generally, these studies found that test prep had a positive impact on

students’ test scores, although the estimated impact varied in magnitude and was typically within the

margin of error of the assessment (Appelrouth et al., 2018; Briggs, 2009; Buchmann et al., 2019, Moore

et al., 2019).

7

Prior Research on Test Preparation Effectiveness

Previous research on test preparation effectiveness has focused on the attributes of students who have

access to preparation as well as the impact of that preparation on their ACT and SAT outcomes.

Differences in who has access to various types of ACT and SAT preparation have been associated with

family income, race of the student, parental education, and high school environment. Students from

higher income families are consistently more likely to enroll in paid in-person coaching classes and

private tutoring. However, the role of race and parental education is more unclear. Recent studies found

that East Asian and Black students were more likely than their White peers to take paid coaching classes

and students with higher parental education levels were more likely to take paid coaching classes, but not

private tutoring (Buchmann et al., 2010; NACAC, 2008; Briggs, 2001; Byun & Park, 2012; Park & Beck,

2015). Students also generally engage in more than one type of test preparation, which makes it difficult

to estimate the impact of any one particular preparation type. In a survey of spring 2018 SAT test takers,

71% of respondents said they used at least two approaches to practice, with the combination of Official

SAT Practice and test prep books being the most frequent; 18% of all respondents used this combination

(College Board 2018a). In a survey of recent ACT retesters, 34% reported engaging in four or more test

preparation activities (ranging from free online programs to paid tutoring), with low-income and minority

students engaging in fewer test preparation activities (Moore et al., 2019).

The impacts of preparation vary across interventions and studies, with coaching associated with about 18

to 33 additional points on the math portion of the SAT and about 8 to 24 additional points on the verbal

portion (Briggs, 2009; Montgomery & Lilly, 2012). Coaching is broadly defined as systematic test

preparation involving content review, question drills, specific test-taking strategies (e.g., eliminating

answers, active reading, plugging in numbers), and general knowledge about the structure of the exam, all

with the aim of increasing test scores (Briggs, 2002). These impacts of coaching, generally within .25

standard deviations, are modest when compared to claims made by test prep companies (Briggs, 2009;

U.S. News and World Report, 2020). Impacts associated with preparation may matter practically,

especially when colleges use score cut-points in admissions decisions (Briggs, 2009).

Across preparation modalities, the dosage of preparation has been an area of interest. One analysis found

that each additional hour of tutoring was associated with an increase of 2.34 SAT points (Appelrouth et

al., 2015) and another analysis found that six to eight hours of OSP usage was associated with an

additional 30-point score increase from the PSAT/NMSQT

®

to students' last SAT (College Board,

2018b). More recently, Moore and colleagues reported that 11 hours or more of tutoring between a first

and second ACT was associated with a 0.60-point increase, on a scale of 1 to 36, compared to students

who did not have tutoring (Moore et al., 2019).

With the exception of retesting, few studies have closely examined the impact of particular preparation

activities (practice problems vs. lessons, mini-sections, timed tests) and cadence on SAT outcomes

(Moore et al., 2019; Appelrouth et al., 2018). Evidence from the Appelrouth et al. (2015, 2018) studies of

students in tutoring suggests that starting spaced preparation early (before June of a student's junior year),

timed practice tests, multiple official SAT tests, and sufficient instructional time all have positive

associations with SAT outcomes, ranging from 20 additional points for an official SAT practice test to 42

additional points for completing all recommended homework.

Although the reports referenced above provide some evidence of the impact of test preparation on

outcomes, there are important limitations in the literature. These limitations include the quality of study

designs, possible heterogeneity in coaching and coaching impacts, and changes in the tests themselves

(Briggs, 2009). While many test-prep studies have focused predominantly on paid coaching and private

tutoring (e.g., Briggs, 2009; Appelrouth et al., 2018), the increase of free online test preparation requires

8

greater attention as online features such as adaptive learning environments and scaled instructional

content have the potential to democratize access to an array of educational opportunities, including test

preparation (e.g., Means et al., 2010). Finally, there is a paucity of research on the impact of preparation

on SAT scores for the revised SAT that launched in 2016, with one analysis demonstrating score gains

from PSAT/NMSQT to SAT associated with the use of OSP (College Board, 2018b). The current analysis

aims to address important gaps in the literature by providing a detailed description of the practice

behaviors of students using OSP and the association of specific practice behaviors with outcomes on the

revised SAT.

Official SAT Practice: Product Features and Functionality

In this section, we explain how the skill levels of students are initialized in order to personalize their

practice on OSP. The OSP features and functionality described are based on what was available at the

time of this study. The numbers in the annotated screengrab of OSP below (see Figure 1) correspond to

the subsections below where we describe the core features and functionality of OSP.

9

Figure 1. Overview of Khan Academy Official SAT Practice features and functionality (number

annotations correspond to product features).

1. Skill levels. Content skills and skill levels are a central part of the OSP experience. The content

areas assessed on the SAT are discretized into 69 skills on OSP Khan Academy Official SAT

Practice: 41 math skills, 7 passage-based reading and writing skills, and 21 grammar skills. For

each skill, there are four difficulty levels. Level 1 is foundational (below SAT-level difficulty),

and levels 2, 3, and 4 correspond to easy, medium, and hard levels on the SAT, respectively. Skill

levels are fundamental to OSP because a student’s current level of each skill determines the

difficulty of the content that is presented to them on practice exercises. Skill levels are initialized

in one of three ways: (1) complete a series of 8 short 10-question diagnostic quizzes on Official

SAT Practice (four in math and four in reading and writing). Each quiz assesses a subset of skills

in the corresponding domain; (2) complete a full-length practice exam on OSP—item-level

10

performance for each tested skill is used to determine the student’s current level on that skill; and

(3) students can link their Khan Academy and College Board accounts. When students link these

accounts, they consent to data sharing between Khan Academy and College Board, which enables

Khan Academy to access the students’ latest SAT Suite of Assessments score data (if any).

Specifically, Khan Academy imports item-level data, similar to what College Board reports to

students on the Question-Level Feedback portion of their SAT Suite score summary report. The

Question-Level Feedback report indicates the difficulty level of each item (easy, medium, or

hard) and the student’s performance (correct or incorrect). Khan Academy imports the item-level

data, coupled with information about the content skill assessed by each item, and uses this data to

initialize (or update) the student’s corresponding skill levels on Official SAT Practice. To protect

student privacy, Khan Academy does not save or store students’ SAT Suite data; the Khan

Academy API that imports College Board data automatically deletes the imported data once the

student’s skill levels are set.

2.

Practice exercises. The practice library contains practice exercises for each of the skills tested on

the SAT. Students have the option to direct their study by choosing which skills to practice.

Within each practice exercise, if students get stuck or need a refresher, they can access step-by-

step hints for each math question (at this time ERW questions do not contain hints, but they will

in the future), as well as instructional videos that include worked examples. When a practice

exercise is completed, the student’s performance on that exercise is used to adjust their level on

the corresponding skill.

3.

Personalized task recommendation. Although students have the option to practice any skill in

the practice library, the OSP recommendation engine is designed to help them focus on the skills

they would benefit most from practicing. Specifically, the recommendation engine is designed to

maximize utility by identifying and prioritizing the skills that a user currently has a low

performance on and that are the most likely to occur on the SAT. The recommendation queue for

math and for reading and writing always contains four items: three practice exercises and a timed

mini-section (explained in the next section). Once those four tasks are completed, the queue is

reset with four new tasks: three new practice exercises and a new timed mini-section.

4. Timed mini-sections. As the name suggests, timed mini-sections are short mixed-skill practice

tasks with a time limit. Timed mini-sections are meant to help students build stamina and practice

time management and pacing skills. Timed mini-sections are always part of the recommendation

queue but they are initially “locked.” In order to unlock a timed mini-section, the student must

first complete the three recommended practice exercises in the recommendation queue.

5.

Full-length practice exam. There are 8 full-length practice exams available on OSP. Six of these

practice tests were previously live operational SAT tests; two are never-before-released tests.

Students can complete the full practice exams online and pause between sections as needed. Thus,

it is not necessary to complete the full 3-hour practice exam in one sitting. Taking a practice exam

in one continuous sitting more closely simulates the real test experience. However, the ability to

pause between sections makes the full functionality of the practice exams accessible to students

who want to take them but who might not have three consecutive hours of access to OSP.

6.

Task review. Students can review their previously completed practice exercises, timed mini-

sections, and full-length exam answers and scores. Once a previous task is selected a student can

view all of the problems along with rationales for each answer choice. Math problems also

include a fully worked solution to the problem.

11

7. Instructional content. In addition to the in-depth solution steps, hints, and explanations for each

question, OSP features worked example videos and lessons and strategy articles. The videos are

short, narrated segments showing worked examples of SAT-type problems. Each math and

grammar skill in the OSP library has two associated “worked example” videos—one focusing on

a more basic problem and one focusing on a more advanced problem. The passage-based ELA

skills also have worked example videos. The “Tips and Strategies” tab features a range of articles

and videos covering topics such as SAT format, content, question types, and scoring, as well as

strategies for time management, active reading, and how to avoid careless errors.

8. P

ractice schedule and goal setting. Students can optionally create a practice schedule based on

when they are planning to take the SAT, how many full-length practice tests they want to take

before the SAT, and when/how long they want to practice (i.e., which days and how long per

day). Khan Academy sends email reminders to students (if they opt in) about their practice

schedule, and the schedule is available on their Official SAT Practice dashboard.

Defining ‘Best Practice Behaviors’ on OSP

As outlined above, OSP offers a rich array of features and functionality to help students prepare for the

SAT. Given this functionality and the varied needs of individual students, there is no single “best” or

ideal way to use OSP. However, we believe there are several OSP behaviors that are broadly applicable

based on how the product is designed, the data elements available, and prior research concerning the

effectiveness of test preparation strategies. These behaviors constitute a working operational definition of

best practices, not a comprehensive definition. There are several notable behaviors that are not included,

such as whether a student set and followed a practice schedule and whether a student spent time

reviewing their incorrect responses. These omissions are due to logistical issues; specifically, due to the

nature and granularity of data instrumentation for OSP, some usage behaviors are challenging to reliably

isolate and quantify. In this study, we operationalize three best practice behaviors on OSP including:

● Leveling up skills. Students with linked accounts begin their path through OSP content with a

skill level initialized from their previous PSAT/NMSQT performance, as such students are placed

at their learning edge when they begin practice on OSP. As students progress through OSP

material, they can achieve new levels in the skills practiced, up to a level 4. There are 69 different

skills, and not all students will level up on skills in the same way. For example, students may

practice very broadly across many skills, without spending enough time on one skill to “level up.”

However, overall leveling up in at least some skills provides a general signal that students are

consistently advancing in a domain tested on the SAT and is a marker for learning progress on

OSP. We operationalize this best practice as students leveling up 15 or more skills (out of 69

total skills) on OSP.

● F

ollowing skill recommendations. The OSP task recommender aims to identify the skills a

student would benefit the most from practicing by considering the student’s past performance and

the frequency with which each skill occurs on the SAT. While there are plenty of reasons why a

student might also need or want to practice specific skills that are not in their personalized

recommendation queue, progressing in recommended skills is a best practice because it guides a

student toward efficient use of their OSP time by focusing on skills that are highly relevant to

SAT questions and are in the greatest need of attention for an individual learner. We

operationalize this best practice as students completing 10 (or more) practice tasks with the

majority of tasks recommended to them.

12

● C

ompleting a full-length practice exam. Previous research has repeatedly shown that practic

e

t

ests are an effective strategy for improving test performance and are often more effective than

other non-testing learning conditions, such as restudying or exclusive practice (see Adesope et al.,

2017 for a recent meta-analysis). The effectiveness of practice tests lies in a combination of

cognitive, metacognitive, and noncognitive benefits that occur when simulating the real test, as

discussed above. We operationalize this best practice as students completing at least one full-

length practice exam on OSP.

Methods and Results

Sample

Participants in this study were students in the 2019 U.S. high school graduating cohort who met the

following three criteria:

1. Took the PSAT/NMSQT(National Merit Scholarship Qualifying Test) in October of their junior

year

2. Took a subsequent SAT prior to graduating (either during their junior or senior year)

3. Linked their Khan Academy and College Board accounts

The first two criteria enable an analysis of SAT performance while adjusting

for prior achievement.

Students in the 2019 graduating cohort took the 11th-grade PSAT/NMSQT during October 2017. The

majority (71%) of these students then took the SAT for the first time the following spring, during the

March, April, May, or June test administration dates. On average, the duration of the interval between the

students’ 11th-grade PSAT/NMSQT and their first SAT was about 26 weeks.

The third criterion functions as a consenting mechanism. When using OSP, a student has the option to

link their Khan Academy account to their College Board account. As part of the account-linking process,

a student is asked whether they consent to Khan Academy sharing their usage data with College Board.

The main user-facing benefit of linking accounts is that doing so is an efficient way to personalize

practice on OSP. When a student links accounts, Khan Academy Official SAT Practice is able to access

the student’s latest PSAT™ or SAT data from College Board. Item-level PSAT and SAT data are then

used to make personalized practice recommendations and to present content at the appropriate difficulty

level for each skill. Deciding not to link accounts is nonpunitive. If a student chooses not to link

accounts, the same degree of personalization within OSP is possible once the student initializes skill

levels through one of the alternate means outlined above (e.g., completing diagnostic quizzes or a full-

length practice exam on OSP).

Table 1 shows descriptive statistics of the analytic sample for this study, compared to the full population

of SAT test takers from the 2019 U.S. high school graduating cohort. About 2.2 million students in the

2019 graduating cohort took the SAT at least once, and 1.3 million took the PSAT/NMSQT in October of

their junior year, prior to taking the SAT. Of those 1.3 million students, 545,640 linked their College

Board and Khan Academy accounts. Therefore, the linked sample reflects 25% of the full population of

SAT test takers and 42% of the population of students who could have used OSP with personalized

practice based on their PSAT/NMSQT data. The linked sample is very similar to the full population of

PSAT/NMSQT+SAT test takers in terms of race/ethnicity, parental education, and PSAT/NMSQT

performance (with the exception that the linked sample has a higher percentage of females and lower

percentage of 11th-grade students scoring in the first quartile of the PSAT/NMSQT). All following

analyses were conducted with the analytic sample.

13

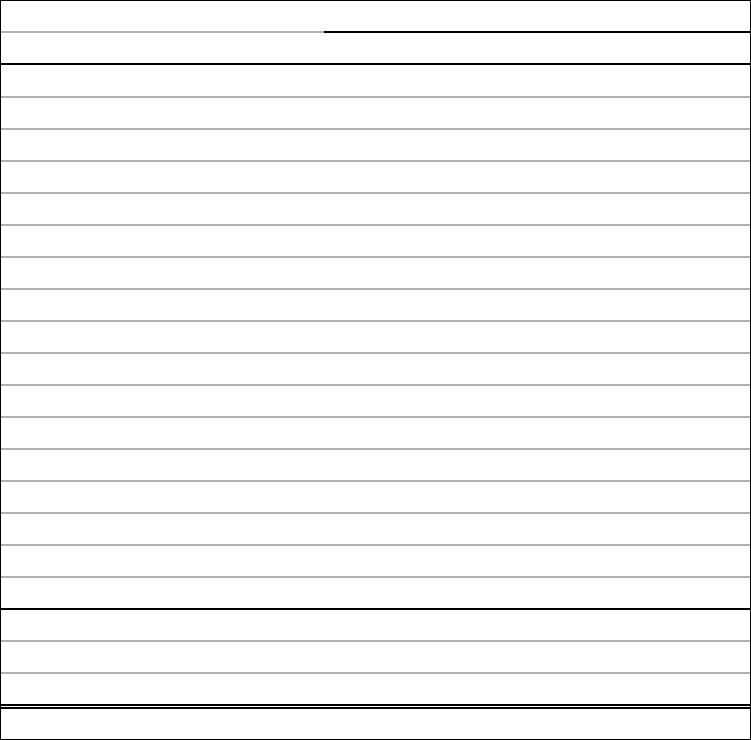

Table 1. Demographics of SAT Test Takers from 2019 High School Graduating Cohort

Subgroup SAT Test Takers

SAT test takers who

took 11th-grade

PSAT/NMSQT

Analytic Sample: SAT test takers

who took 11th-grade

PSAT/NMSQT and linked Khan

Academy and College Board

accounts

Total 2,220,087 1,291,916 545,640

Gender

Female 52% 53% 58%

Male 48% 47% 42%

Race/

American Indian / Alaska

Native

1% <1% <1%

Ethnicity Asian 10% 10% 11%

Black / African American 12% 11% 11%

Hispanic / Latino 25% 27% 27%

Native Hawaiian / Other

Pacific Islander

<1% <1% <1%

White 43% 46% 44%

Two or More Races 4% 4% 4%

No Response 5% 2% 2%

Parental

Education

No High School Diploma 9% 9% 9%

High School Diploma 27% 27% 27%

Associate Degree 7% 7% 7%

Bachelor’s Degree 28% 31% 31%

Graduate Degree 21% 24% 24%

No Response 8% 3% 2%

11th Grade

PSAT

Quartile

Q1 [320–910] --- 29% 24%

Q2 [920–1050] --- 25% 26%

Q3 [1060–1180] --- 22% 25%

Q4 [1190–1520] --- 24% 26%

14

1. Descriptive Findings of OSP Usage

Patter

ns

In this section, we explore the overall usage patterns observed

on OSP to understand how students utilized OSP features and

to obtain insight into potential group differences in OSP usage.

For definitions of variables, see Appendix A.

We explore the

depth of overall usage by examining the frequency with which

students use different features of the tool, how students’ usage

differs across different best practices, and if certain subgroups

of students are more likely to engage in best practices.

1a. What does student usage on OSP look like?

Roughly 10% of students spend six or more hours

or complete a best practice on OSP.

F

igure 2, the Depth of Usage chart, shows the percentage of

students engaging in various best practice behaviors on OSP

along with the percentage spending significant time on the platform. We show this usage both for all

linkers, as well as for linkers who go through at least one problem on OSP, as a significant subgroup of

students take the initial step of linking their accounts but never complete any time on OSP.

F

igure 2. OSP depth of usage.

T

able 2 shows an overall summary of how students interacted with OSP, broken down by demographic

groups and PSAT performance groups. This table provides a reference for how the overall usage varies

across subgroups within our sample. We provide this table as a descriptive reference; in section 2b

we

present analyses to determine if there are meaningful differences in usage across subgroups.

Key Findings

Roughly 10% of students spend 6+

hours or complete a best practice on

OSP.

Students use OSP primarily within

the two months before they take the

SAT.

Best practice behaviors are

correlated, but students show

selective strategies for how they

engage with OSP.

15

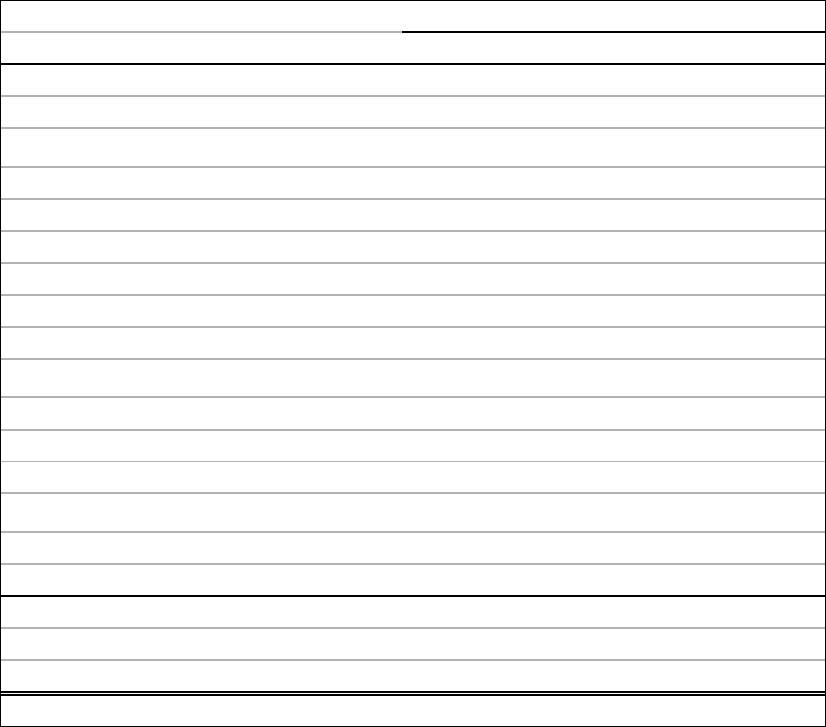

Table 2.

OSP Usage by Subgroups for Linkers who Completed at least One Problem.

Target OSP usage behavior

group

subgroup

n

prct

Median

hours

6+

hours

Leveled

Up

Skills

Completed

Practice

Exam

Followed Skill

Recommendations

Total (Linkers

with at least 1

problem)

299,315

100%

1.8

18%

14%

13%

18%

Gender

Male

121,910

41%

1.9

18%

14%

13%

18%

Female

177,405

59%

1.8

17%

14%

13%

18%

Race/Ethnicity

White

132,388

44%

1.7

16%

15%

14%

19%

American Indian

or Alaska Native

1,100

0%

1.5

13%

6%

10%

14%

Asian

33,722

11%

2.7

28%

20%

17%

24%

Black or African

American

34,383

11%

2

20%

10%

11%

15%

Hispanic or Latino

78,724

26%

1.7

16%

10%

10%

15%

Native Hawaiian

or Other Pacific

Islander

524

0%

1.5

15%

8%

9%

17%

No response

4,939

2%

2

21%

15%

13%

21%

Two or more

races

13,535

5%

1.8

18%

15%

14%

20%

Parental

education

No High School

Diploma

25,451

9%

1.7

16%

9%

10%

14%

High School

Diploma

40,963

14%

1.6

15%

10%

10%

15%

Associate Degree

60,856

20%

1.7

15%

11%

11%

15%

Bachelor's Degree

93,576

31%

1.8

18%

15%

14%

19%

Graduate Degree

72,316

24%

2.1

22%

19%

16%

22%

No response

6,153

2%

1.6

16%

10%

10%

16%

PSAT quartile

Quartile 1 [ 320,

920)

66,641

22%

1.6

14%

5%

8%

13%

Quartile 2 [

920,1060)

76,932

26%

1.6

15%

9%

10%

15%

Quartile 3

[1060,1190)

75,069

25%

1.8

18%

15%

13%

18%

Quartile 4

[1190,1520]

80,673

27%

2.2

23%

24%

19%

25%

Commensurate with the low rates of completion and high rates of dropoff that research has documented

across many free online learning platforms with large usage (Gütl, Rizzardini, Chang & Morales, 2014;

Kizilcec & Halawa, 2015), a relatively small percentage of students in our sample spent six or more hours

16

on OSP (10% of all linkers). However, for students who engaged with the platform beyond linking and

completed at least one problem, this six or more hours usage group rises to 18%. Considering best

practice behaviors other than time spent on OSP, we see that as the time required to complete them

increases, smaller percentages of students engage in these more time-consuming behaviors (e.g.,

completing a full-length practice exam vs. completing 10 recommended tasks).

1b. When are students using OSP?

Primarily within the two months before they take the SAT.

As testing dates and OSP usage are subject to student and school choices, there are variable amounts of

time between the students’ PSAT/NMSQT and first SAT test dates. One concern when evaluating OSP

usage as a contributor to SAT performance is that it is possible that some students’ time on OSP occurs

far in advance of their SAT testing date. In this case, we might question whether OSP truly serves as an

effective intervention for SAT performance.

Figure 3 shows the percentage of students’ minutes on OSP, and how those percentages are allocated in

the lead-up to the SAT. Crucially, this figure illustrates that most student activity on OSP is concentrated

in the weeks immediately leading up to the SAT.

1

Among students who used OSP for six or more hours,

the average student spent 80% of their total OSP time within 8 weeks of the SAT and nearly all (98%) of

their time within 12 weeks.

1

It is still important to note the variance in students’ time between PSAT/NMSQT and SAT across this sample, which may drive

differences in students’ performance between their PSAT/NMSQT and SAT scores (e.g., students will necessarily have different

amounts of time to study between tests). We consider this further by including the number of weeks between test dates as a

covariate in our predictive models.

17

Figure 3. Concentration of OSP practice minutes between PSAT/NMSQT and SAT, among linkers who

attempted at least 1 problem.

1c. How are students engaging in best practices?

Best practice behaviors are correlated, but students show selective strategies for how

they engage with OSP.

In this subsection, we explore how students engaged with the above described best practice

behaviors

within overall usage. Figure 4 shows the strength of co-occurrence between any two of the OSP usage

variables for linkers who completed at least one problem on OSP. There is a positive correlation between

spending six or more hours and each of the other best practice behaviors (completing a full-length

practice exam; consistently following practice task recommendations; and leveling up).

2

On the surface,

this is not surprising since those practice behaviors require time. Notably, however, the magnitude of

these correlations is moderate, indicating that some students who spend six or more hours do not do any

2

In order to show the best practice behaviors as defined above, Figure 4 presents the bivariate correlations between several

dichotomized variables: i.e., the best practice measures are calculated from the raw platform usage data and assigned as

categorical measures to students. It is possible that correlating dichotomized variables may dampen the strength of the

relationship between measures. However, in this case, when we examined correlations between the raw variables which make up

the best practice behaviors we found closely similar results.

18

of the best practice behaviors and/or that some students do the best practice behaviors without spending

six or more hours.

F

igure 4. Bivariate correlation between OSP usage variables for linkers who completed at least 1

problem.

Next, we examined the frequency of all possible combinations of the best practice behaviors occurring.

As OSP provides a wide range of possible uses, students with different needs may choose to emphasize

different features. Thus, it is important to understand the different ways students engage in best practice

behaviors.

Best practice behaviors are not expected to be distinct and independent user patterns on OSP, as many of

these behaviors overlap, and working toward one best practice may impact another. Increased time spent

on the platform is also necessarily associated with certain best practices: for example, completing a full-

length practice exam requires students to spend three hours on OSP, and leveling up in initial skills

requires a minimum amount of time spent practicing those skills. However, students who engaged in one

best practice behavior would not necessarily engage in the others equally, nor would best practice

behaviors increase equally with increased OSP usage.

Figure 5 provides a more granular view of the frequency with which each behavior co-occurs with the

others across the entire sample. In this figure, we divide usage into several groups that will be repeated in

section 2c

as an exploration of the best practices.

19

The vertical bars in this figure map to our overall sample and display a funnel of usage, with dark grey

representing students with no OSP usage and lighter grey representing students who spent less than six

hours on OSP and did not complete at least one best practice behavior. The colorful bars show students

who engaged more with OSP: the red bar represents learners who spent six or more hours on OSP but

who completed no best practices, gold bars are learners who similarly spent six or more hours but did

show at least one best practice, and blue bars are learners who showed at least one best practice but within

an overall usage of less than six hours.

Within these usage groups, we can also see the frequency of all possible combinations of behaviors, and

the connected closed circles indicate the combinations of the best practice behaviors co-occurring. The

horizontal bars summarize the frequency of each best practice behavior in the overall data. This

intersection plot shows the relationships between the best practice behaviors that we have operationalized

as useful signals in this study, mapping the frequency of that behavior along with its co-occurrence. This

shows the number of students who demonstrated given combinations of best practice behaviors.

Figure 5. Intersection of best practice behaviors for linkers.

A few relevant patterns emerge from these descriptives: a sizable subgroup of students completed at least

one practice exam, but did not spend six or more hours on OSP, suggesting that they were primarily using

the tool to access practice exams. Another sizable subgroup spent six or more hours on OSP without

engaging in any of the other best practice behaviors, suggesting that they did not focus on recommended

tasks, complete practice exams, or level up skills. Another group of students leveled up in more than 15

skills but did not spend six or more hours on OSP, suggesting that they may be leveling up quickly in

many skills but not pursuing the most challenging content for these students.

Overall, best practices are positively associated with each other, as expected (see Figure 4). Yet, there was

some suggestive evidence that students could fall into distinct groups that focus on different best

practices. It is likely that these different usage patterns emerge because students prioritize OSP features

differently depending on their needs and goals. It is possible, for instance, that some students were

20

e

ncouraged or even required to complete a practice exam on OSP, while other students may have had

access to practice exams outside of the platform. Without a deeper understanding of learner contexts, it is

out of the scope of this report to fully investigate what determined this pattern of OSP usage. But this

pattern suggests that many students will engage differently with a feature-rich platform, and that

explicitly encouraging best practice behaviors may be a useful strategy to help learners.

2. Associations Between OSP and SAT Performance

In this section, we broadly examine how use of OSP is

associated with SAT performance. We explore this

association by examining the relationships between

SAT scores and the amount of time that students spent

on OSP, as well as the types of behaviors performed by

students on OSP during that time. We further break

down the relative contribution of best practice

behaviors. Throughout, these analyses include

important student characteristics such as parental

education and gender, along with administrative

characteristics of the tests themselves (e.g., the amount

of time between a student’s PSAT/NMSQT and SAT).

Finally, this section explores how student

characteristics interact with OSP, allowing us to

examine if these interactions work together to

strengthen or weaken the relationship to SAT

performance.

A

s we examine questions in this section, we present

observational analyses of the data designed to leverage

the natural fluctuations in student usage of OSP to

make inferences about the effectiveness of OSP. We

implement various statistical controls to account for the

influence of confounding factors. While a true

experiment with random assignment to conditions

would provide the highest standard of evidence, it was

not practically possible to implement such a design in this setting. Nevertheless, this analysis is an

important first step in establishing the effectiveness of OSP.

2a. Does time spent using OSP relate to SAT achievement?

Time spent using OSP was associated with positive improvements to SAT performance.

We hypothesize that use of OSP improves SAT performance, as such it is reasonable to predict that there

should be a positive relationship between the amount of time that students use OSP and their SAT scores.

At a high level, we examined this relationship using a series of multiple linear regression models. We

examined composite SAT scores, as well as the math and ERW (evidence-based reading and writing)

subscores. The goal of this analysis was to estimate the difference in SAT score for students who spent

more time using OSP while controlling for as many confounding factors as possible. The most important

factor we accounted for was PSAT/NMSQT performance, which represents students’ prior achievement

before their practice on OSP. We used the composite, math, and ERW PSAT/NMSQT scores when

Key Findings

Spending time on OSP is associated with

greater scores on the SAT; 6 hours is

associated with an additional 21 points (.11

effect size) more than students who did not

use OSP. These findings hold true regardless

of student demographic characteristics.

How students spent their time on OSP

matters: Students who used OSP for 6+

hours and demonstrated at least 1 best

practice behavior scored an additional 39

points (.20 effect size) more than students

who did not use OSP. This holds true

regardless of student demographic

characteristics.

Not all subgroups of students are as likely

to use best practice behaviors on OSP.

21

predicting the composite, math, and ERW SAT scores, respectively. We controlled for several

demographic factors; specifically gender, race/ethnicity, and highest level of parental education (e.g., high

school diploma, some college, etc.). We also controlled for test-taking conditions, such as whether

students took the exam during a school day administration or weekend, and the time interval between the

PSAT/NMSQT and the SAT.

For the purposes of this report, we focus our discussion on the estimated impact of time using OSP on

SAT performance. For a full breakdown of our statistical modeling procedures and complete results from

our regression analysis, see Appendix B

. The model estimates for hours spent using OSP are represented

graphically on Figure 6. This figure shows the estimated improvement to SAT performance for composite

(Panel A), math (Panel B), and ERW (Panel C) scales, as a function of the number of hours spent using

OSP, controlling for the confounding variables in the model. In general, we see that the amount of time

spent using OSP was positively associated with higher SAT performance. This positive relationship was

found at the composite level, as well as each of the math and ERW subscales. However, we do note that

the overall impact of OSP usage was slightly larger for math than for ERW, which is consistent with past

studies evaluating the effects of test prep (Briggs, 2009). Moreover, the benefits of OSP use taper off

more quickly for ERW than math, suggesting that the ceiling of benefits from using OSP is reached more

quickly for ERW than for math. It is not clear whether this represents a limit of the OSP platform, or a

general limit on the ability to improve ERW performance. It is also worth noting that the top of each

panel in Figure 6 represents the frequencies with which students use OSP at given time intervals. The vast

majority of students (approximately 80%) use OSP for less than 3 hours. Thus, while increased usage of

OSP was associated with increased SAT performance, the majority of students are not using OSP enough

to obtain meaningful benefits to their performance.

22

Figure 6. The estimated change in SAT scores as a function of hours using OSP, after controlling for

students’ PSAT score and demographic characteristics. The effects on composite, math, and evidence

based reading and writing (ERW) are shown in panels A, B, and C, respectively. The plots show the

increase in SAT points achieved, relative to students who use OSP for 0 hours. The effect size is the

change in SAT divided by the overall standard deviation.

In order to estimate the magnitude of the effect of OSP usage, effect sizes are included on the right side of

the Y axis in each panel in Figure 6. The effect size is the estimated change in SAT points divided by

standard deviation of SAT. In the context of educational interventions, less than .05 is considered small,

.05 to .20 is considered medium, and greater than .20 is considered large (Kraft, 2018). For the composite,

math, and ERW scales, a large effect size of .20 was achieved at 12.3, 11.1, and 13.3 hours of OSP usage,

respectively. At the six hour point, the effect sizes were for 0.11, 0.12, and 0.11 for composite, math, and

ERW scales, respectively. These results suggest that OSP usage has slightly greater benefits for math than

for ERW, which is consistent with past research on the benefits of test prep. It is important to note that

limitations in our data set prevent us from making strong claims on this matter. In order to properly

evaluate the differential effects of usage on the SAT subscales, we would need to be able to match the

topic domain of the OSP practice data to that of the outcome. Unfortunately, our OSP usage data does not

specify the domain in which a student was using OSP, though we do plan on conducting such analysis

23

when the data become available. For this reason, we will only focus on the effects of OSP at the

composite level for the remainder of this report.

3

2b. Do all students benefit equally from their time spent on OSP?

Students who use OSP appear to show positive benefits, regardless of gender, ethnicity,

parental education, or PSAT level.

As an organization, the mission of Khan Academy is to provide a free, world-class education to anyone,

anywhere. For this reason, it is critically important to evaluate whether all students benefit equally from

using OSP. To this end, we conducted a series of analyses designed to examine whether demographic and

prior achievement factors interacted with use of OSP. More specifically, we wanted to know whether

students from different groups who spent approximately the same amount of time on OSP achieved

different outcomes. Similar to the analysis in Section 2a, we estimated the impact of OSP over time,

except we explored whether the impact changed as a function of various student characteristics. As in

previous analyses, we controlled for the same confounding variables (PSAT, gender, ethnicity, parental

education, test day, weeks since PSAT). For the purposes of brevity, we focus only on the key findings

here, specifically, the most highly observed subgroups in each category (e.g., Asian, Black, Latinx, and

White for race/ethnicity). Estimates for under observed categories had too much uncertainty to draw

reasonable conclusions. The full details of our analysis and model results are in Appendix C

.

The impact of OSP across levels of gender, ethnicity, and parental education are shown on panels A,B,

and C of Figure 7, respectively. In each of the panels, we can observe several performance differences at

the group level. These differences are commonly observed in the education literature, and theorized to

result from the “opportunity gaps,” which are also documented for these groups. Therefore, it is not

surprising to replicate this difference in our sample. However, it is still important to examine whether

there is evidence that OSP usage is associated with different outcomes when comparing between these

groups.

For evaluating the impact of OSP specifically, the critical information is contained in the slope and shape

of the curves representing the rate of increase in SAT performance over time. When examining the

overall slope of these curves, we see that all subgroups derive a positive benefit from increased usage of

OSP regardless of gender, ethnicity, parental education, or PSAT score. Any differences in the benefits

are only slight. For example, in Figure 7a, the increasing benefits of usage for females tapers off more

rapidly than males. However, it can be difficult to gauge how meaningful this difference actually is. To

aid in this regard, we included point estimates of the benefit for each group at the six-hour point.

Remembering that six hours is the recommended minimum amount of time to spend using OSP serves as

a useful checkpoint for comparing the relative effects observed across the groups. For example, the

biggest difference between ethnicity groups at the six hour mark is only an estimated 7 points. Through

this lens, any of the observed between group differences are not practically significant.

3

One possible concern with the composite SAT measure is whether using a composite score will mask divergent subscores in

math and ERW: i.e., a student with a high ERW score and a low math score might have the same composite score as a student

who scored near the mean for both subsections. However, math and ERW scores in our sample were strongly positively

associated (.78), indicating that overall students’ subscores were not systematically divergent.

24

Figure 7. The estimated impact of using OSP as a function of student characteristics; gender (Panel A),

ethnicity (Panel B), parental education (Panel C) and PSAT level (Panel D). Estimated marginal means

are shown on the Y axes, which correct for other confounding variables. Effect sizes (ES) for each group

are shown at the six-hour point. Effect size is the change in SAT from 0 hours of use to 6 hours, divided

by the overall standard deviation (see Appendix B for calculation). Shaded regions are 95% confidence

intervals.

Next, we consider the impact of OSP across levels of prior achievement, as measured by PSAT/NMSQT

scores. Given the wide range of possible PSAT/NMSQT values, plotting this interactive effect is not as

straightforward as the demographic variables. To aid in this regard, we plot the effects of OSP usage at

three levels of composite PSAT/NMSQT scores; one standard deviation below the mean (872), the mean

(1059), and one standard deviation above the mean (1246). The effects across each of these three groups

are shown on Figure 7d. Again, we see that all groups showed positive effects of using OSP. There were

small differences on the overall slopes for each of these levels. In general, students scoring 1 standard

25

devia

tion above the mean PSAT/NMSQT scores showed the steepest increases in SAT achievement,

followed by those scoring at average PSAT/NMSQT, than those scoring 1 standard deviation below the

mean PSAT/NMSQT. Comparing performance at the six hour mark for reference, the 1 standard

deviation above the mean students gained roughly 26 points, whereas students with 1 standard deviation

below the mean PSAT/NMSQT scores gained a more modest 18 points. However, these differences are

very small, and probably do not reach a threshold of being practically meaningful since the

PSAT/NMSQT is scored in increments of 10. The important thing to note is that students at all levels

demonstrated an ability to improve their performance by using OSP.

2c. Are best practice behaviors associated with improved SAT performance?

Students who used at least one-best practice behavior outperformed students who

spent a similar amount of time on OSP.

In the pr

evious section, we demonstrated that the overall amount of time using OSP was related to higher

SAT performance. Of course, time alone does not cause a person to learn. We hypothesize that how

people spend their time on OSP has differential associations with learning. To test this, we examine if

students who spend their time engaging with best practice behaviors achieve better outcomes on the SAT

than students who do not. In the above section

, we outlined three specific best practice behaviors: (1)

leveling up skills, (2) completing a full-length practice exam, and (3) following skill practice

recommendations. In this section, we will examine how these best practice behaviors contribute to overall

achievement on the SAT.

To exa

mine the overall impact of the best practice learning behaviors, we used a linear regression model

similar to those used in Section 2a

. The goal of this analysis was to determine if students with varying

hours of usage on OSP and at least one of the three best practice behaviors perform better on the SAT

than students who do not. Specifically, we classified students into one of five groups:

1. Linked, but

no OSP Practice

2. Less than six hours OSP, no best practice behaviors

3. Less than six hours OSP, at least one best practice behavior

4. Six or more hours of OSP, no best practice behaviors

5. Six or more hours of OSP, at least one best practice behavior

At least one best practice behavior meant that the student met the minimum threshold required for any

of the three best practice behaviors. In our analysis, we used the same control variables as those used in

the previous analysis (i.e., PSAT/NMSQT score, demographics, test conditions). Note that due to

limitations in our ability to tie the specific learning behaviors to a topic domain, we only focused on

composite SAT achievement in this analysis.

For the purposes

of this report, we focus our discussion on the estimated additional SAT score increase

seen in the respective OSP practice groups. For a full breakdown of our statistical modeling procedures

and complete results from our regression analysis,

see A

ppendix D

.

Model estimates for the

practice

groups, relative to the “No OSP Practice,” are shown in Figure 8. The Y axis on the left shows the

additional SAT points predicted from the OSP usage groups defined above, relative to the no OSP

practice group. Focusing first on students who used no best practice behaviors (gray bars), we see the

same relationship between time spent on OSP and achievement that we presented

in

Section 2a:

more

time using OSP was a

ssociated with higher SAT scores. However, when we also examine students who

used at least one best practice behavior (green bars), we see that how time is spent using OSP matters

greatly. Students who spent fewer than six hours on OSP but used at least one best practice behavior

26

a

chieved roughly the same benefit as students who spent more than six hours but did not use a best

practice behavior. Moreover, for students who spent more than six hours on OSP, the benefit to their SAT

score of that time spent nearly doubled when they performed at least one best practice behavior. These

students were estimated to have gained approximately 39.2 additional SAT points relative to the No OSP

Practice group—an effect size of .20. We conducted a follow-up analysis to determine whether this

benefit was observed for all students, similar to Analysis 2b. The details of this analysis are in

Appendix

E. To summarize, we did not observe substantive differences in the effects of meeting the six hour and

one best practice behavior for students of various background characteristics.

Figure 8. The estimated change in composite SAT scores as a function of OSP usage, after controlling for

students’ PSAT score and demographic characteristics. The plot shows the increase in SAT points

achieved, relative to students who use OSP for 0 hours. Effect size is the change in SAT divided by the

overall standard deviation.

I

t is worth briefly discussing the sample sizes of each of the best practice groups. The reference level “No

Practice Group” (not shown in Figure 8), was the largest group in this sample (n = 246,325). For students

who used OSP for less than six hours, the majority of students did not engage in one of the best practice

behaviors. The situation was reversed for students with more than six hours using OSP, where the

majority of students did engage with one of the best practice behaviors. The relationship between time

and engaging in a best practice behavior is complicated and was previously discussed in Question 1c

.

I

n this analysis, we attempted to control for the effects of confounding variables using linear regression to

fully partial out the effects of these covariates. To scrutinize these results with additional rigor, we

repeated this analysis using several propensity score based approaches, which are in Appendix F

. The

results from these covariate-balanced models are consistent with the effect reported in Figure 8. Thus, we

are reasonably confident in the reliability of this estimate.

Relative contribution of the best practice behaviors

Wh

ile the above analysis makes a strong case that the overall amount of time spent on OSP matters less

than the way in which that time is spent, it does not speak to the relative benefits of the specific best

practice behaviors. For example, does taking a practice exam produce the same level of benefits as

leveling up skills? To this end, we also examined the relative contribution of each by using linear

regression to estimate the effects of these behaviors on overall SAT performance, while controlling for

27

the overall time using OSP and other confounding variables. The full details and results of this analysis

are in Appendix D

. The key results are shown on Figure 9.

Figure 9. The estimated effect of specific practice behaviors on composite SAT scores, after controlling

for confounding variables. The bars show 95% confidence intervals.

Figure 9 shows the estimated marginal effects of each best practice behavior while holding the other

behaviors and confounding variables constant. We see in the figure that leveling up skills produced the

biggest benefit to SAT performance, an estimated 19.5 additional composite SAT points (ES = 0.10).

Comparatively, following practice recommendations tasks resulted in the smallest benefit for SAT

performance (+4.56 points; ES = .02). Recall that following practice recommendations meant that

students were specifically practicing skills that were recommended to them by OSP, while leveling up

skills was more general, in that it applied to any of the skills they leveled up. Thus, the relatively small

effect of following recommended practice makes sense given that it is essentially a modification of an

already beneficial practice. Moreover, it is important to note that the estimates of these effects take into

account the effects of the other variables, and there is certainly a degree of redundancy there. For

example, following best practice recommendations may also lead to more leveling up, thus making it

difficult to completely tease apart the relative contributions of each. Recall that overall,

best practice

behaviors are moderately correlated. Nevertheless, the important takeaway is that while more time spent

using OSP may be related to SAT performance, the manner in which that time is spent matters

considerably. Engaging in practice, via leveling up skills or completing a full-length practice exam, was

28

asso

ciated with the largest effects, whereas following practice recommendations provided small but

positive benefits.

Figure 10 illustrates the above points. In Figure 10, the estimated marginal mean score gain across our

usage groups are shown. Figure 10 repeats the information found in Figure 5 above

, where we outline the

relative frequency of best practice behaviors and their combinations within the five usage groups of our

sample (including no OSP practice). However, Figure 10 includes the additional SAT points that we

estimate for these combinations of both time on the platform and completion of one or more best

practices. Visually, this figure shows the increasing benefit of effectively spent time on OSP.

Figure 10. Intersection plot of both sample representation and estimated marginal mean score gain across

usage groups showing different best practice combinations.

Leveling up skills deeper dive

In the first analysis in this subsection, we showed that students who engaged in at least one of the best

practice behaviors showed better outcomes on the SAT than students who did not engage in one of these

behaviors. Additionally, in the previous subsection, we discussed the interconnectedness of the best

practices. One criticism of the best practice behavior, leveling up, is that it may stack the deck in favor of

the best practice group. We used leveling up skills as an indication that students are engaging in the

meaningful study and targeted practice necessary to improve performance. However, the measure itself is

intrinsically a measure of performance—in order to level up, students need to answer problems correctly.

29

In this sense the variable may simply be picking up on a latent measure of general ability, and therefore it

is not inherently surprising that students in the “best practice” group performed at higher levels.

The critique against leveling up skills is certainly valid. However, there are multiple reasons to suspect it

is not unfairly biasing the best practice groups. Recall that in OSP, the difficulty of problems are scaled to

the level of the learner based on PSAT item performance. Thus, leveling up should theoretically be just as

viable for low-performing students as for high-performing ones. This can be observed in Figure 11, which

shows in detail the descriptive data on leveling up skills across the four usage groups. The arches in the

figure illustrate the movement of a skill from the initial level given to a student—the thicker the line the

more skills were advanced from that level. The major takeaway in this figure is that leveling up happens

in all of the groups. Even learners who spend a small amount of time on OSP and do not use a best

practice are still able to level up in skills. Not surprisingly, the groups with one best practice behavior

tend to level up more skills then the groups with no best practice behaviors. However, when we examine

the table at the bottom of Figure 11, these students are also far more likely to attempt leveling up more

skills. For example, looking only at students with six or more hours of OSP, students with one best

practice behavior attempted a median 32 skills, whereas students with no best practice behaviors

attempted a median 16 skills. Students in the best practice groups do appear to answer problems with

greater accuracy, which would lead to more leveling up. However, it is difficult to determine whether this

is a systematic bias or simply the product of learning via greater overall activity.

Figure 11. Skill level changes on OSP by each usage group. Lines denote the average (mean) number of

skills per user.

30

2d. Are certain students more likely to engage in best practices?

Some students subgroups spend more time on OSP, but are slightly less likely to engage

in best practices.

In this subsection, we examine whether there are group differences in students who are more or less likely

to engage in best practice behaviors. A full breakdown of the models are in Appendix G. As with the

above analyses (Figure 7), we focus on the most highly observed subgroups in each category. Figure 12

breaks down the likelihood of engaging in best practice behaviors and spending six plus hours by

different student characteristics: gender, race, and parental education. It also shows the likelihood of best

practice behavior against the number of hours on OSP and PSAT/NMSQT scores. We see that the greater

time on OSP is related to more best practice behaviors and that students who score higher on the PSAT

are more likely to engage in best practice behaviors. However, one important pattern demonstrated in this

figure is that while some groups (e.g., Asian and Black or African American students) are more likely to

spend at least six hours on OSP, that time is not necessarily spent engaging in best practices, which they

are less likely to complete.

Figure 12. Odds of engaging in best practices by subgroups.

This pattern is an important potential difference in how these groups have engaged with OSP and

suggests that further work is needed to understand why some groups are less likely to engage with these

OSP features. However, it is also important to note that the empirical differences in these groups’ best

practice behaviors are small. In Table 2 from section 1a

we show the percentages for target usage broken

down by ethnicity. Although the visualization of the odds ratio above does show a statistically meaningful

difference while holding time constant, the practical difference represents a very small percentage point

gap between these groups.

31

Nevertheless, evaluating the impact of OSP for these student groups, particularly groups that are

traditionally underserved in education, is an important focus of this report. While this signal is small, it

does suggest that some students may not be as likely to utilize the best practice behaviors, which future

work will continue to examine.

Discussion

In accordance with the call for more rigorous research on the effects of test preparation for college

admission exams (Briggs, 2009), this report provides a comprehensive description of students who

prepared for the revised SAT using a free online practice tool: OSP. Nearly 25% of the class of 2019 SAT

test takers linked their Khan Academy and College Board accounts, and were demographically similar to

the population of students who took a PSAT/NMSQT and the subsequent SAT. Students spent relatively

little time on the platform, with 38% of students spending at least 1 hour or doing 50+ problems and only

10% practicing for six or more hours, consistent with other studies finding low rates of completion and

high rates of dropoff for large, free online learning platforms (Gütl, Rizzardini, Chang & Morales, 2014;

Kizilcec & Halawa, 2015). When students did practice, they did most of their practice in the two months

leading up to taking the SAT.

Previous studies have associated the amount of time spent preparing with impacts on scores. One analysis

found that each additional hour of tutoring was associated with an increase of 2.34 SAT points

(Appelrouth et al., 2015) and another analysis found that 6 to 8 hours of OSP usage was associated with

an additional 30-point score increase from PSAT/NMSQT to students' last SAT (College Board 2018b).

The current analysis also shows a positive association between hours on OSP and SAT performance for

composite SAT as well as math and ERW sections, although the majority of students spent 3 hours or less

on OSP. Because time spent on OSP is only a high-level description of use, we also examined particular

behaviors that were associated with better SAT outcomes.

This analysis demonstrates that practicing on OSP for at least six hours, along with one of the best

practices, is associated with 39 additional points on the SAT composite score, an effect size of 0.20. This

positive effect of test preparation is similar to the magnitude reported in studies of coaching classes and

tutoring. Like previous studies, the 39-point difference is similar to other analyses of coaching associated

with the SAT (Briggs, 2009; Montgomery & Lilly, 2012). This difference may have practical significance

for colleges who use cut scores to make admissions decisions and other researchers have found that even

small differences in SAT scores can have an impact on college options, particularly for lower-scoring

students (Briggs, 2009; Goodman et al., 2017).

While there were no substantive differences by race or parental education in who used OSP, there were

differences in the likelihood to use best practices. Asian students and Black students were more likely to

practice for at least six hours, but somewhat less likely to do any of the best practices. This finding has

direct product improvement implications. We are refreshing OSP to create an updated experience. As part