The Little Book of Semaphores

Allen B. Downey

2

The Little Book of Semaphores

First Edition

Copyright (C) 2003 Allen B. Downey

Permission is granted to copy, distribute and/or modify this document under

the terms of the GNU Free Documentation License, Version 1.1 or any later ver-

sion published by the Free Software Foundation; this book contains no Invariant

Sections, no Front-Cover Texts, and with no Back-Cover Texts.

You can obtain a copy of the GNU Free Documentation License from

www.gnu.org or by writing to the Free Software Foundation, Inc., 59 Temple

Place - Suite 330, Boston, MA 02111-1307, USA.

The original form of this book is LaTeX source code. Compiling this LaTeX

source has the effect of generating a device-independent representation of a

book, which can be converted to other formats and printed.

The LaTeX source for this book, and more information about the Open

Source Textbook project, is available from allendowney.com/semaphores.

This book was typeset by the author using latex, dvips and ps2pdf, among

other free, open-source programs.

Contents

1 Introduction 1

1.1 Synchronization . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.2 Execution model . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.3 Serialization with messages . . . . . . . . . . . . . . . . . . . . . 3

1.4 Non-determinism . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.5 Shared variables . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.5.1 Concurrent writes . . . . . . . . . . . . . . . . . . . . . . 4

1.5.2 Concurrent updates . . . . . . . . . . . . . . . . . . . . . 5

1.5.3 Mutual exclusion with messages . . . . . . . . . . . . . . 6

2 Semaphores 7

2.1 Definition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

2.2 Syntax . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

2.3 Why semaphores? . . . . . . . . . . . . . . . . . . . . . . . . . . 9

3 Basic synchronization patterns 11

3.1 Signaling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

3.2 Rendezvous . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

3.2.1 Rendezvous hint . . . . . . . . . . . . . . . . . . . . . . . 13

3.2.2 Rendezvous solution . . . . . . . . . . . . . . . . . . . . . 15

3.2.3 Deadlock #1 . . . . . . . . . . . . . . . . . . . . . . . . . 15

3.3 Mutex . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 16

3.3.1 Mutual exclusion hint . . . . . . . . . . . . . . . . . . . . 17

3.3.2 Mutual exclusion solution . . . . . . . . . . . . . . . . . . 19

3.4 Multiplex . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

3.4.1 Multiplex solution . . . . . . . . . . . . . . . . . . . . . . 21

3.5 Barrier . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

3.5.1 Barrier hint . . . . . . . . . . . . . . . . . . . . . . . . . . 23

3.5.2 Barrier non-solution . . . . . . . . . . . . . . . . . . . . . 25

3.5.3 Deadlock #2 . . . . . . . . . . . . . . . . . . . . . . . . . 27

3.5.4 Barrier solution . . . . . . . . . . . . . . . . . . . . . . . . 29

3.5.5 Deadlock #3 . . . . . . . . . . . . . . . . . . . . . . . . . 31

3.6 Reusable barrier . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

3.6.1 Reusable barrier hint . . . . . . . . . . . . . . . . . . . . . 33

ii CONTENTS

3.6.2 Reusable barrier non-solution #1 . . . . . . . . . . . . . . 35

3.6.3 Reusable barrier problem #1 . . . . . . . . . . . . . . . . 37

3.6.4 Reusable barrier non-solution #2 . . . . . . . . . . . . . . 39

3.6.5 Reusable barrier hint . . . . . . . . . . . . . . . . . . . . . 41

3.6.6 Reusable barrier solution . . . . . . . . . . . . . . . . . . 43

3.6.7 Barrier objects . . . . . . . . . . . . . . . . . . . . . . . . 44

3.6.8 Kingmaker pattern . . . . . . . . . . . . . . . . . . . . . . 44

3.7 FIFO queue . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

3.7.1 FIFO queue hint . . . . . . . . . . . . . . . . . . . . . . . 49

3.7.2 FIFO queue solution . . . . . . . . . . . . . . . . . . . . . 51

4 Classical synchronization problems 53

4.1 Producer-consumer problem . . . . . . . . . . . . . . . . . . . . . 53

4.1.1 Producer-consumer hint . . . . . . . . . . . . . . . . . . . 55

4.1.2 Producer-consumer solution . . . . . . . . . . . . . . . . . 57

4.1.3 Deadlock #4 . . . . . . . . . . . . . . . . . . . . . . . . . 59

4.1.4 Producer-consumer with a finite buffer . . . . . . . . . . . 59

4.1.5 Finite buffer producer-consumer hint . . . . . . . . . . . . 61

4.1.6 Finite buffer producer-consumer solution . . . . . . . . . 63

4.2 Readers-writers problem . . . . . . . . . . . . . . . . . . . . . . . 63

4.2.1 Readers-writers hint . . . . . . . . . . . . . . . . . . . . . 65

4.2.2 Readers-writers solution . . . . . . . . . . . . . . . . . . . 67

4.2.3 Starvation . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

4.2.4 No-starve readers-writers hint . . . . . . . . . . . . . . . . 71

4.2.5 No-starve readers-writers solution . . . . . . . . . . . . . 73

4.2.6 Writer-priority readers-writers hint . . . . . . . . . . . . . 75

4.2.7 Writer-priority readers-writers solution . . . . . . . . . . . 77

4.3 No-starve mutex . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

4.3.1 No-starve mutex hint . . . . . . . . . . . . . . . . . . . . 81

4.3.2 No-starve mutex solution . . . . . . . . . . . . . . . . . . 83

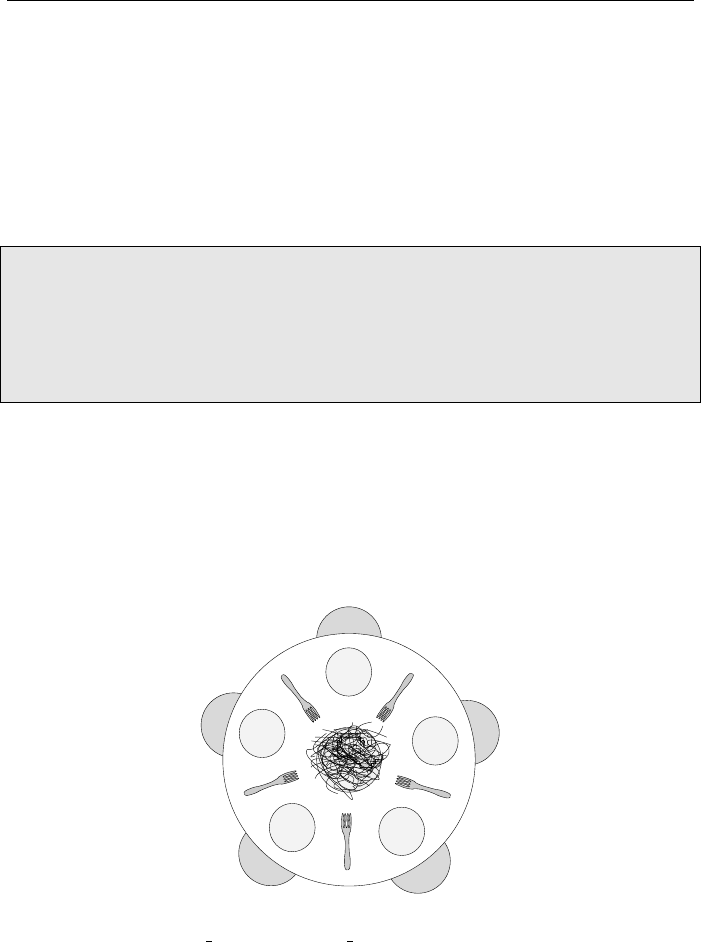

4.4 Dining philosophers . . . . . . . . . . . . . . . . . . . . . . . . . 85

4.4.1 Deadlock #5 . . . . . . . . . . . . . . . . . . . . . . . . . 87

4.4.2 Dining philosophers hint #1 . . . . . . . . . . . . . . . . . 89

4.4.3 Dining philosophers solution #1 . . . . . . . . . . . . . . 91

4.4.4 Dining philosopher’s solution #2 . . . . . . . . . . . . . . 93

4.4.5 Tanenbaum’s solution . . . . . . . . . . . . . . . . . . . . 95

4.4.6 Starving Tanenbaums . . . . . . . . . . . . . . . . . . . . 97

4.5 Cigarette smokers’ problem . . . . . . . . . . . . . . . . . . . . . 99

4.5.1 Deadlock #6 . . . . . . . . . . . . . . . . . . . . . . . . . 103

4.5.2 Smoker problem hint . . . . . . . . . . . . . . . . . . . . . 105

4.5.3 Smoker problem solution . . . . . . . . . . . . . . . . . . 107

4.5.4 Generalized Smokers’ Problem . . . . . . . . . . . . . . . 107

CONTENTS iii

5 Not-so-classical synchronization problems 109

5.1 Building H

2

O . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

5.1.1 H

2

O hint . . . . . . . . . . . . . . . . . . . . . . . . . . . 111

5.1.2 H

2

O solution . . . . . . . . . . . . . . . . . . . . . . . . . 113

5.2 River crossing problem . . . . . . . . . . . . . . . . . . . . . . . . 114

5.2.1 River crossing hint . . . . . . . . . . . . . . . . . . . . . . 115

5.2.2 River crossing solution . . . . . . . . . . . . . . . . . . . . 117

5.3 The unisex bathroom problem . . . . . . . . . . . . . . . . . . . . 118

5.3.1 Unisex bathroom hint . . . . . . . . . . . . . . . . . . . . 119

5.3.2 Unisex bathroom solution . . . . . . . . . . . . . . . . . . 121

5.3.3 No-starve unisex bathroom problem . . . . . . . . . . . . 123

5.3.4 No-starve unisex bathroom solution . . . . . . . . . . . . 125

5.3.5 Efficient no-starve unisex bathroom problem . . . . . . . 125

5.3.6 Efficient no-starve unisex bathroom hint . . . . . . . . . 127

5.3.7 Efficient unisex bathroom solution . . . . . . . . . . . . . 129

5.4 Baboon crossing problem . . . . . . . . . . . . . . . . . . . . . . 130

5.5 The search-insert-delete problem . . . . . . . . . . . . . . . . . . 131

5.5.1 Search-Insert-Delete hint . . . . . . . . . . . . . . . . . . 133

5.5.2 Search-Insert-Delete solution . . . . . . . . . . . . . . . . 135

5.6 The dining savages problem . . . . . . . . . . . . . . . . . . . . . 137

5.6.1 Dining Savages hint . . . . . . . . . . . . . . . . . . . . . 139

5.6.2 Dining Savages solution . . . . . . . . . . . . . . . . . . . 141

5.7 The barbershop problem . . . . . . . . . . . . . . . . . . . . . . . 143

5.7.1 Barbershop hint . . . . . . . . . . . . . . . . . . . . . . . 145

5.7.2 Barbershop solution . . . . . . . . . . . . . . . . . . . . . 147

5.8 Hilzer’s Barbershop problem . . . . . . . . . . . . . . . . . . . . . 149

5.8.1 Hilzer’s barbershop hint . . . . . . . . . . . . . . . . . . . 150

5.8.2 Hilzer’s barbershop solution . . . . . . . . . . . . . . . . . 150

5.9 The room party problem . . . . . . . . . . . . . . . . . . . . . . . 152

5.9.1 Room party hint . . . . . . . . . . . . . . . . . . . . . . . 153

5.9.2 Room party solution . . . . . . . . . . . . . . . . . . . . . 155

5.10 The Santa Claus problem . . . . . . . . . . . . . . . . . . . . . . 157

5.10.1 Santa problem hint . . . . . . . . . . . . . . . . . . . . . . 159

5.10.2 Santa problem solution . . . . . . . . . . . . . . . . . . . 161

5.11 The roller coaster problem . . . . . . . . . . . . . . . . . . . . . . 163

5.11.1 Roller Coaster hint . . . . . . . . . . . . . . . . . . . . . . 165

5.11.2 Roller Coaster solution . . . . . . . . . . . . . . . . . . . . 167

5.11.3 Multi-car Roller Coaster hint . . . . . . . . . . . . . . . . 169

5.11.4 Multi-car Roller Coaster solution . . . . . . . . . . . . . . 171

5.12 The Bus problem . . . . . . . . . . . . . . . . . . . . . . . . . . . 173

5.12.1 Bus problem hint . . . . . . . . . . . . . . . . . . . . . . . 175

5.12.2 Bus problem solution . . . . . . . . . . . . . . . . . . . . 177

iv CONTENTS

Chapter 1

Introduction

1.1 Synchronization

In common use, “synchronization” means making two things happen at the

same time. In computer systems, synchronization is a little more general; it

refers to relationships among events—any number of events, and any kind of

relationship (before, during, after).

Computer programmers are often concerned with synchronization con-

straints, which are requirements pertaining to the order of events. Examples

include:

Serialization: Event A must happen before Event B.

Mutual exclusion: Events A and B must not happen at the same time.

In real life we often check and enforce synchronization constraints using a

clock. How do we know if A happened before B? If we know what time both

events occurred, we can just compare the times.

In computer systems, we often need to satisfy synchonization constraints

without the benefit of a clock, either because there is no universal clock, or

because we don’t know with fine enough resolution when events occur.

That’s what this book is about: software techniques for enforcing synchro-

nization constraints.

1.2 Execution model

In order to understand software synchronization, you have to have a model of

how computer programs run. In the simplest model, computers execute one

instruction after another in sequence. In this model, synchronization is trivial;

we can tell the order of events by looking at the program. If Statement A comes

before Statement B, it will be executed first.

2 Introduction

There are two ways things get more complicated. One possibility is that

the computer is parallel, meaning that it has multiple processors running at the

same time. In that case it is not easy to know if a statement on one processor

is executed before a statement on another.

Another possibility is that a single processor is running multiple threads of

execution. A thread is a sequence of instructions that execute sequentially. If

there are multiple threads, then the processor can work on one for a while, then

switch to another, and so on.

In general the programmer has no control over when each thread runs; the

operating system (specifically, the scheduler) makes those decisions. As a result,

again, the programmer can’t tell when statements in different threads will be

executed.

For purposes of synchronization, there is no difference between the parallel

model and the multithread model. The issue is the same—within one processor

(or one thread) we know the order of execution, but between processors (or

threads) it is impossible to tell.

A real world example might make this clearer. Imagine that you and your

friend Bob live in different cities, and one day, around dinner time, you start to

wonder who ate lunch first that day, you or Bob. How would you find out?

Obviously you could call him and ask what time he ate lunch. But what if

you started lunch at 11:59 by your clock and Bob started lunch at 12:01 by his

clock? Can you be sure who started first? Unless you are both very careful to

keep accurate clocks, you can’t.

Computer systems face the same problem because, even though their clocks

are usually accurate, there is always a limit to their precision. In addition,

most of the time the computer does not keep track of what time things happen.

There are just too many things happening, too fast, to record the exact time of

everything.

Puzzle: Assuming that Bob is willing to follow simple instructions, is there

any way you can guarantee that tomorrow you will eat lunch before Bob?

1.3 Serialization with messages 3

1.3 Serialization with messages

One solution is to instruct Bob not to eat lunch until you call. Then, make

sure you don’t call until after lunch. This approach may seem trivial, but the

underlying idea, message passing, is a real solution for many synchronization

problems.

At the risk of belaboring the obvious, consider this timeline.

You

a1 Eat breakfast

a2 Work

a3 Eat lunch

a4 Call Bob

Bob

b1 Eat breakfast

b2 Wait for a call

b3 Eat lunch

The first column is a list of actions you perform; in other words, your thread

of execution. The second column is Bob’s thread of execution. Within a thread,

we can always tell what order things happen. We can denote the order of events

a1 < a2 < a3 < a4

b1 < b2 < b3

where the relation a1 < a2 means that a1 happened before a2.

In general, though, there is no way to compare events from different threads;

for example, we have no idea who ate breakfast first (is a1 < b1?).

But with message passing (the phone call) we can tell who ate lunch first

(a3 < b3). Assuming that Bob has no other friends, he won’t get a call until

you call, so b2 > a4 . Combining all the relations, we get

b3 > b2 > a4 > a3

which proves that you had lunch before Bob.

In this case, we would say that you and Bob ate lunch sequentially, because

we know the order of events, and you ate breakfast concurrently, because we

don’t.

When we talk about concurrent events, it is tempting to say that they happen

at the same time, or simultaneously. As a shorthand, that’s fine, as long as you

remember the strict definition:

Two events are concurrent if we cannot tell by looking at the program

which will happen first.

Sometimes we can tell, after the program runs, which happened first, but

often not, and even if we can, there is no guarantee that we will get the same

result the next time.

1.4 Non-determinism

Concurrent programs are often non-deterministic, which means it is not pos-

sible to tell, by looking at the program, what will happen when it executes.

4 Introduction

Here is a very simple example of a non-deterministic program:

Thread A

a1 print "yes"

Thread B

b1 print "no"

Because the two threads run concurrently, the order of execution depends

on the scheduler. During any given run of this program, the output might be

“yes no” or “no yes”.

Non-determinism is one of the things that makes concurrent programs hard

to debug. A program might work correctly 1000 times in a row, and then crash

on the 1001st run, depending on the particular decisions of the scheduler.

These kinds of bugs are almost impossible to find by testing; they can only

be avoided by careful programming.

1.5 Shared variables

Most of the time, most variables in most threads are local, meaning that they

belong to a single thread and no other threads can access them. As long as

that’s true, there tend to be few synchronization problems, because threads

just don’t interact.

But usually some variables are shared among two or more threads; this

is one of the ways threads interact with each other. For example, one way

to communicate information between threads is for one thread to read a value

written by another thread.

If the threads are unsynchronized, then we cannot tell by looking at the

program whether the reader will see the value the writer writes or an old value

that was already there. Thus many applications enforce the contraint that

the reader should not read until after the writer writes. This is exactly the

serialization problem in Section 1.3.

Other ways that threads interact are concurrent writes (two or more writ-

ers) and concurrent updates (two or more threads performing a read followed

by a write). The next two sections deal with these interactions. The other

possible use of a shared variable, concurrent reads, does not generally create a

synchronization problem.

1.5.1 Concurrent writes

In the following example, x is a shared variable accessed by two writers.

Thread A

a1 x = 5

a2 print x

Thread B

b1 x = 7

What value of x gets printed? What is the final value of x when all these

statements have executed? It depends on the order in which the statements are

executed, called the execution path. One possible path is a1 < a2 < b1, in

which case the output of the program is 5, but the final value is 7.

1.5 Shared variables 5

Puzzle: What path yields output 5 and final value 5?

Puzzle: What path yields output 7 and final value 7?

Puzzle: Is there a path that yields output 7 and final value 5? Can you

prove it?

Answering questions like these is an important part of concurrent program-

ming: What paths are possible and what are the possible effects? Can we prove

that a given (desirable) effect is necessary or that an (undesirable) effect is

impossible?

1.5.2 Concurrent updates

An update is an operation that reads the value of a variable, computes a new

value based on the old value, and writes the new value. The most common kind

of update is an increment, in which the new value is the old value plus one. The

following example shows a shared variable, count, being updated concurrently

by two threads.

Thread A

a1 count = count + 1

Thread B

b1 count = count + 1

At first glance, it is not obvious that there is a synchronization problem here.

There are only two execution paths, and they yield the same result.

The problem is that these operations are translated into machine language

before execution, and in machine language the update takes two steps, a read

and a write. The problem is more obvious if we rewrite the code with a tempo-

rary variable, temp.

Thread A

a1 temp = count

a2 count = temp + 1

Thread B

b1 temp = count

b2 count = temp + 1

Now consider the following execution path

a1 < b1 < b2 < a2

Assuming that the initial value of x is 0, what is its final value? Because

both threads read the same initial value, they write the same value. The variable

is only incremented once, which is probably not what the programmer had in

mind.

This kind of problem is subtle because it is not always possible to tell, look-

ing at a high-level program, which operations are performed in a single step and

which can be interrupted. In fact, some computers provide an increment in-

struction that is implemented in hardware cannot be interrupted. An operation

that cannot be interrupted is said to be atomic.

So how can we write concurrent programs if we don’t know which operations

are atomic? One possibility is to collect specific information about each opera-

tion on each hardware platform. The drawbacks of this approach are obvious.

6 Introduction

The most common alternative is to make the conservative assumption that

all updates and all writes are not atomic, and to use synchronization constraints

to control concurrent access to shared variables.

The most common constraint is mutual exclusion, or mutex, which I men-

tioned in Section 1.1. Mutual exclusion guarantees that only one thread accesses

a shared variable at a time, eliminating the kinds of synchronization errors in

this section.

1.5.3 Mutual exclusion with messages

Like serialization, mutual exclusion can be implemented using message passing.

For example, imagine that you and Bob operate a nuclear reactor that you

monitor from remote stations. Most of the time, both of you are watching for

warning lights, but you are both allowed to take a break for lunch. It doesn’t

matter who eats lunch first, but it is very important that you don’t eat lunch

at the same time, leaving the reactor unwatched!

Puzzle: Figure out a system of message passing (phone calls) that enforces

these restraints. Assume there are no clocks, and you cannot predict when lunch

will start or how long it will last. What is the minimum number of messages

that is required?

Chapter 2

Semaphores

In real life a semaphore is a system of signals used to communicate visually,

usually with flags, lights, or some other mechanism. In software, a semaphore is

a data structure that is useful for solving a variety of synchronization problems.

Semaphores were invented by Edsgar Dijkstra, a famously eccentric com-

puter scientist. Some of the details have changed since the original design, but

the basic idea is the same.

2.1 Definition

A semaphore is like an integer, with three differences:

1. When you create the semaphore, you can initialize its value to any integer,

but after that the only operations you are allowed to perform are increment

(increase by one) and decrement (decrease by one). You cannot read the

current value of the semaphore.

2. When a thread decrements the semaphore, if the result is negative, the

thread blocks itself and cannot continue until another thread increments

the semaphore.

3. If the value of the semaphore is negative and a thread increments it, one

of the threads that is waiting gets woken up.

To say that a thread blocks itself (or simply “blocks”) is to say that it

notifies the scheduler that it cannot proceed. The scheduler will prevent the

thread from running until it is notified otherwise. Since the blocked thread

cannot run, some other thread has to unblock it. In the tradition of mixed

computer science metaphors, unblocking is often called “waking”.

That’s all there is to the definition, but there are some consequences of the

definition you might want to think about.

8 Semaphores

• In general, there is no way to know before a thread decrements a

semaphore whether it will block or not (in specific cases you might be

able to prove that it will or will not).

• After a thread increments a semaphore and another thread gets woken

up, both threads continue running concurrently. There is no way to know

which thread, if either, will continue immediately.

Finally, you might want to think about what the value of the semaphore

means. If the value is positive, then it represents the number of threads that

can decrement without blocking. If it is negative, then it represents the number

of threads that have blocked and are waiting. If the value is zero, it means there

are no threads waiting, but if a thread tries to decrement, it will block.

2.2 Syntax

In most programming environments, an implementation of semaphores is avail-

able as part of the programming language or the operating system. Different

implementations sometimes offer slightly different capabilities, and usually re-

quire different syntax.

In this book I will use a simple pseudo-language to demonstrate how

semaphores work. The syntax for creating a new semaphore and initializing

it is

Listing 2.1: Semaphore initialization syntax

1 Semaphore fred = 1

When Semaphore appears with a capital letter it indicates a type of variable.

In this case, fred is the name of the new semaphore and 1 is its initial value.

The semaphore operations go by different names in different environments.

The most common alternatives are

Listing 2.2: Semaphore operations

1 fred.increment ()

2 fred.decrement ()

and

Listing 2.3: Semaphore operations

1 fred.signal ()

2 fred.wait ()

and

Listing 2.4: Semaphore operations

1 fred.V ()

2 fred.P ()

2.3 Why semaphores? 9

It may be surprising that there are so many names, but there is a reason for the

plurality. increment and decrement describe what the operations do. signal

and wait describe what they are often used for. And V and P were the original

names proposed by Dijkstra, who wisely realized that a meaningless name is

better than a misleading name

1

.

I consider the other pairs misleading because increment and decrement

neglect to mention the possibility of blocking and waking, and semaphores are

often used in ways that have nothing to do with signal and wait.

If you insist on meaningful names, then I would suggest these:

Listing 2.5: Semaphore operations

1 fred.increment_and_wake_a_waiting_process_if_any ()

2 fred.decrement_and_block_if_the_result_is_negative ()

I don’t think the world is likely to embrace either of these names soon. In

the meantime, I choose (more or less arbitrarily) to use signal and wait.

2.3 Why semaphores?

Looking at the definition of semaphores, it is not at all obvious why they are use-

ful. It’s true that we don’t need semaphores to solve synchronization problems,

but there are some advantages to using them:

• Semaphores impose deliberate constraints that help programmers avoid

errors.

• Solutions using semaphores are often clean and organized, making it easy

to demonstrate their correctness.

• Semaphores can be implemented efficiently on many systems, so solutions

that use semaphores are portable and usually efficient.

1

Actually, V and P aren’t completely meaningless to people who speak Dutch.

10 Semaphores

Chapter 3

Basic synchronization

patterns

This chapter presents a series of basic synchronization problems and shows ways

of using semaphores to solve them. These problems include serialization and

mutual exclusion, which we have already seen, along with others.

3.1 Signaling

Possibly the simplest use for a semaphore is signaling, which means that one

thread sends a signal to another thread to indicate that something has happened.

Signaling makes it possible to guarantee that a section of code in one thread

will run before a section of code in another thread; in other words, it solves the

serialization problem.

Assume that we have a semaphore named sem with initial value 0, and that

Threads A and B have shared access to it.

Thread A

1 statement a1

2 sem.signal ()

Thread B

1 sem.wait ()

2 statement b1

The word statement represents an arbitrary program statement. To make

the example concrete, imagine that a1 reads a line from a file, and b1 displays

the line on the screen. The semaphore in this program guarantees that Thread

A has completed a1 before Thread B begins b1.

Here’s how it works: if thread B gets to the wait statement first, it will find

the initial value, zero, and it will block. Then when Thread A signals, Thread

B proceeds.

Similarly, if Thread A gets to the signal first then the value of the semaphore

will be incremented, and when Thread B gets to the wait, it will proceed im-

mediately. Either way, the order of a1 and b1 is guaranteed.

12 Basic synchronization patterns

This use of semaphores is the basis of the names signal and wait, and

in this case the names are conveniently mnemonic. Unfortunately, we will see

other cases where the names are less helpful.

Speaking of meaningful names, sem isn’t one. When possible, it is a good

idea to give a semaphore a name that indicates what it represents. In this case

a name like a1Done might be good, where a1Done = 0 means that a1 has not

executed and a1Done = 1 means it has.

3.2 Rendezvous

Puzzle: Generalize the signal pattern so that it works both ways. Thread A has

to wait for Thread B and vice versa. In other words, given this code

Thread A

1 statement a1

2 statement a2

Thread B

1 statement b1

2 statement b2

we want to guarantee that a1 happens before b2 and b1 happens before a2. In

writing your solution, be sure to specify the names and initial values of your

semaphores (little hint there).

Your solution should not enforce too many constraints. For example, we

don’t care about the order of a1 and b1. In your solution, either order should

be possible.

This synchronization problem has a name; it’s a rendezvous. The idea is

that two threads rendezvous at a point of execution, and neither is allowed to

proceed until both have arrived.

3.2 Rendezvous 13

3.2.1 Rendezvous hint

The chances are good that you were able to figure out a solution, but if not,

here is a hint. Create two semaphores, named aArrived and bArrived, and

initialize them both to zero.

As the names suggest, aArrived indicates whether Thread A has arrived at

the rendezvous, and bArrived likewise.

14 Basic synchronization patterns

3.2 Rendezvous 15

3.2.2 Rendezvous solution

Here is my solution, based on the previous hint:

Thread A

1 statement a1

2 aArrived.signal ()

3 bArrived.wait ()

4 statement a2

Thread B

1 statement b1

2 bArrived.signal ()

3 aArrived.wait ()

4 statement b2

While working on the previous problem, you might have tried something like

this:

Thread A

1 statement a1

2 bArrived.wait ()

3 aArrived.signal ()

4 statement a2

Thread B

1 statement b1

2 bArrived.signal ()

3 aArrived.wait ()

4 statement b2

This solution also works, although it is probably less efficient, since it might

have to switch between A and B one time more than necessary.

If A arrives first, it waits for B. When B arrives, it wakes A and might

proceed immediately to its wait in which case it blocks, allowing A to reach its

signal, after which both threads can proceed.

Think about the other possible paths through this code and convince yourself

that in all cases neither thread can proceed until both have arrived.

3.2.3 Deadlock #1

Again, while working on the previous problem, you might have tried something

like this:

Thread A

1 statement a1

2 bArrived.wait ()

3 aArrived.signal ()

4 statement a2

Thread B

1 statement b1

2 aArrived.wait ()

3 bArrived.signal ()

4 statement b2

If so, I hope you rejected it quickly, because it has a serious problem. As-

suming that A arrives first, it will block at its wait. When B arrives, it will also

block, since A wasn’t able to signal aArrived. At this point, neither thread can

proceed, and never will.

This situation is called a deadlock and, obviously, it is not a successful

solution of the synchronization problem. In this case, the error is obvious, but

often the possibility of deadlock is more subtle. We will see more examples later.

16 Basic synchronization patterns

3.3 Mutex

A second common use for semaphores is to enforce mutual exclusion. We have al-

ready seen one use for mutual exclusion, controlling concurrent access to shared

variables. The mutex guarantees that only one thread accesses the shared vari-

able at a time.

A mutex is like a token that passes from one thread to another, allowing one

thread at a time to proceed. For example, in The Lord of the Flies a group of

children use a conch as a mutex. In order to speak, you have to hold the conch.

As long as only one child holds the conch, only one can speak.

Similarly, in order for a thread to access a shared variable, it has to “get”

the mutex; when it is done, it “releases” the mutex. Only one thread can hold

the mutex at a time.

Puzzle: Add semaphores to the following example to enforce mutual exclu-

sion to the shared variable count.

Thread A

count = count + 1

Thread B

count = count + 1

3.3 Mutex 17

3.3.1 Mutual exclusion hint

Create a semaphore named mutex that is initialized to 1. A value of one means

that a thread may proceed and access the shared variable; a value of zero means

that it has to wait for another thread to release the mutex.

18 Basic synchronization patterns

3.4 Multiplex 19

3.3.2 Mutual exclusion solution

Here is a solution:

Thread A

mutex.wait ()

// critical section

count = count + 1

mutex.signal ()

Thread B

mutex.wait ()

// critical section

count = count + 1

mutex.signal ()

Since mutex is initially 1, whichever thread gets to the wait first will be able

to proceed immediately. Of course, the act of waiting on the semaphore has the

effect of decrementing it, so the second thread to arrive will have to wait until

the first signals.

I have indented the update operation to show that it is contained within the

mutex.

In this example, both threads are running the same code. This is sometimes

called a symmetric solution. If the threads have to run different code, the solu-

tion is asymmetric. Symmetric solutions are often easier to generalize. In this

case, the mutex solution can handle any number of concurrent threads without

modification. As long as every thread waits before performing an update and

signals after, then no two threads will access count concurrently.

Often the code that needs to be protected is called the critical section, I

suppose because it is critically important to prevent concurrent access.

In the tradition of computer science and mixed metaphors, there are several

other ways people sometimes talk about mutexes. In the metaphor we have been

using so far, the mutex is a token that is passed from one thread to another.

In an alternative metaphor, we think of the critical section as a room, and

only one thread is allowed to be in the room at a time. In this metaphor,

mutexes are called locks, and a thread is said to lock the mutex before entering

and unlock it while exiting. Occasionally, though, people mix the metaphors

and talk about “getting” or “releasing” a lock, which doesn’t make much sense.

Both metaphors are potentially useful and potentially misleading. As you

work on the next problem, try out both ways of thinking and see which one

leads you to a solution.

3.4 Multiplex

Puzzle: Generalize the previous solution so that it allows multiple threads to

run in the critical section at the same time, but it enforces an upper limit on

the number of concurrent threads. In other words, no more than n threads can

run in the critical section at the same time.

This pattern is called a multiplex. In real life, the multiplex problem occurs

at busy nightclubs where there is a maximum number of people allowed in the

building at a time, either to maintain fire safety or to create the illusion of

exclusivity.

20 Basic synchronization patterns

At such places a bouncer usually enforces the synchronization constraint by

keeping track of the number of people inside and barring arrivals when the room

is at capacity. Then, whenever one person leaves another is allowed to enter.

Enforcing this constraint with semaphores may sound difficult, but it is

almost trivial.

3.5 Barrier 21

3.4.1 Multiplex solution

To allow multiple threads to run in the critical section, just initialize the mutex

to n, which is the maximum number of threads that should be allowed.

At any time, the value of the semaphore represents the number of additional

threads that may enter. If the value is zero, then the next thread will block

until one of the threads inside exits and signals. When all threads have exited

the value of the semaphore is restored to n.

Since the solution is symmetric, it’s conventional to show only one copy of the

code, but you should imagine multiple copies of the code running concurrently

in multiple threads.

Listing 3.1: Multiplex solution

1 multiplex.wait ()

2 critical section

3 multiplex.signal ()

What happens if the critical section is occupied and more than one thread

arrives? Of course, what we want is for all the arrivals to wait. This solution

does exactly that. Each time an arrival joins the queue, the semaphore is decre-

mented, so that the value of the semaphore (negated) represents the number of

threads in queue.

When a thread leaves, it signals the semaphore, incrementing its value and

allowing one of the waiting threads to proceed.

Thinking again of metaphors, in this case I find it useful to think of the

semaphore as a set of tokens (rather than a lock). As each thread invokes wait,

it picks up one of the tokens; when it invokes signal it releases one. Only a

thread that holds a token can enter the room. If no tokens are available when

a thread arrives, it waits until another thread releases one.

In real life, ticket windows sometimes use a system like this. They hand

out tokens (sometimes poker chips) to customers in line. Each token allows the

holder to buy a ticket.

3.5 Barrier

Consider again the Rendezvous problem from Section 3.2. A limitation of the

solution we presented is that it does not work with more than two threads.

Puzzle: Generalize the rendezvous solution. Every thread should run the

following code:

Listing 3.2: Barrier code

1 rendezvous

2 critical point

The synchronization requirement is that no thread executes critical point

until after all threads have executed rendezvous.

22 Basic synchronization patterns

You can assume that there are n threads and that this value is stored in a

variable, n, that is accessible from all threads.

When the first n − 1 threads arrive they should block until the nth thread

arrives, at which point all the threads may proceed.

3.5 Barrier 23

3.5.1 Barrier hint

For many of the problems in this book I will provide hints by presenting the

variables I used in my solution and explaining their roles.

Listing 3.3: Barrier hint

1 int n // the number of threads

2 int count = 0

3 Semaphore mutex = 1

4 Semaphore barrier = 0

count keeps track of how many threads have arrived. mutex provides exclu-

sive access to count so that threads can increment it safely.

barrier is locked (zero or negative) until all threads arrive; then it should

be unlocked (1 or more).

24 Basic synchronization patterns

3.5 Barrier 25

3.5.2 Barrier non-solution

First I will present a solution that is not quite right, because it is useful to

examine incorrect solutions and figure out what is wrong.

Listing 3.4: Barrier non-solution

1 rendezvous

2

3 mutex.wait ()

4 count = count + 1

5 mutex.signal ()

6

7 if (count == n) barrier.signal ()

8

9 barrier.wait ()

10

11 critical point

Since count is protected by a mutex, it counts the number of threads that

pass. The first n− 1 threads wait when they get to the barrier, which is initially

locked. When the nth thread arrives, it unlocks the barrier.

Puzzle: What is wrong with this solution?

26 Basic synchronization patterns

3.5 Barrier 27

3.5.3 Deadlock #2

The problem is a deadlock.

An an example, imagine that n = 5 and that 4 threads are waiting at the

barrier. The value of the semaphore is the number of threads in queue, negated,

which is -4.

When the 5th thread signals the barrier, one of the waiting threads is allowed

to proceed, and the semaphore is incremented to -3.

But then no one signals the semaphore again and none of the other threads

can pass the barrier. This is a second example of a deadlock.

Puzzle: Fix this problem.

28 Basic synchronization patterns

3.5 Barrier 29

3.5.4 Barrier solution

Finally, here is a working barrier:

Listing 3.5: Barrier solution

1 rendezvous

2

3 mutex.wait ()

4 count = count + 1

5 mutex.signal ()

6

7 if (count == n) barrier.signal ()

8

9 barrier.wait ()

10 barrier.signal ()

11

12 critical point

The only change is another signal after waiting at the barrier. Now as each

thread passes, it signals the semaphore so that the next thread can pass.

This pattern, a wait and a signal in rapid succession, occurs often enough

that it has a name; it’s called a turnstile, because it allows one thread to pass

at a time, and it can be locked to bar all threads.

In its initial state (zero), the turnstile is locked. The nth thread unlocks it

and then all n threads go through.

After the nth thread, what state is the turnstile in? Is there any way the

barrier might be signalled more than once?

30 Basic synchronization patterns

3.6 Reusable barrier 31

3.5.5 Deadlock #3

Since only one thread at a time can pass through the mutex, and only one

thread at a time can pass through the turnstile, it might seen reasonable to put

the turnstile inside the mutex, like this:

Listing 3.6: Bad barrier solution

1 rendezvous

2

3 mutex.wait ()

4 count = count + 1

5 if (count == n) barrier.signal ()

6

7 barrier.wait ()

8 barrier.signal ()

9 mutex.signal ()

10

11 critical point

This turns out to be a bad idea because it can cause a deadlock.

Imagine that the first thread enters the mutex and then blocks when it

reaches the turnstile. Since the mutex is locked, no other threads can enter,

so the condition, count==n, will never be true and no one will ever unlock the

turnstile.

In this case the deadlock is fairly obvious, but it demonstrates a common

source of deadlocks: blocking on a semaphore while holding a mutex.

Does this code always create a deadlock? Can you find an execution path

through this code that does not cause a deadlock?

3.6 Reusable barrier

Often a set of cooperating threads will perform a series of steps in a loop and

synchonize at a barrier after each step. For this application we need a reusable

barrier that locks itself after all the threads have passed through.

Puzzle: Rewrite the barrier solution so that after all the threads have passed

through, the turnstile is locked again.

32 Basic synchronization patterns

3.6 Reusable barrier 33

3.6.1 Reusable barrier hint

Here’s a hint about how to do it. Just as the nth thread unlocked the turnstile

on the way in, it should be the nth thread that locks it on the way out.

Here are the variables I used:

Listing 3.7: Reusable barrier hint

1 int count = 0

2 Semaphore mutex = 1

3 Semaphore turnstile = 0

34 Basic synchronization patterns

3.6 Reusable barrier 35

3.6.2 Reusable barrier non-solution #1

Once again, we will start with a simple attempt at a solution and gradually

improve it:

Listing 3.8: Reusable barrier non-solution

1 rendezvous

2

3 mutex.wait ()

4 count++

5 mutex.signal ()

6

7 if (count == n) turnstile.signal ()

8

9 turnstile.wait ()

10 turnstile.signal ()

11

12 critical point

13

14 mutex.wait ()

15 count--

16 mutex.signal ()

17

18 if (count == 0) turnstile.wait ()

count++ is shorthand for count = count + 1 and count-- for count =

count - 1

Notice that the code after the turnstile is pretty much the same as the code

before it. Again, we have to use the mutex to protect access to the shared

variable count.

Tragically, though, this code is not quite correct. Puzzle: What is the

problem?

36 Basic synchronization patterns

3.6 Reusable barrier 37

3.6.3 Reusable barrier problem #1

There is a problem spot at Line 6 of the previous code.

If the n − 1th thread is interrupted at this point, and then the nth thread

comes through the mutex, both threads will find that count==n and both

threads will signal the turnstile. In fact, it is even possible that all the threads

will signal the turnstile.

If this barrier isn’t reused, then multiple signals are not a problem. For a

reusable barrier, they are.

Puzzle: Fix the problem.

38 Basic synchronization patterns

3.6 Reusable barrier 39

3.6.4 Reusable barrier non-solution #2

This attempt fixes the previous error, but a subtle problem remains.

Listing 3.9: Reusable barrier non-solution

1 rendezvous

2

3 mutex.wait ()

4 count++

5 if (count == n) turnstile.signal ()

6 mutex.signal ()

7

8 turnstile.wait ()

9 turnstile.signal ()

10

11 critical point

12

13 mutex.wait ()

14 count--

15 if (count == 0) turnstile.wait ()

16 mutex.signal ()

In both cases the check is inside the mutex so that a thread cannot be

interrupted after changing the counter and before checking it.

Tragically, this code is still not correct. Remember that this barrier will be

inside a loop. So, after executing the last line, each thread will go back to the

rendezvous.

Puzzle: Identify and fix the problem.

40 Basic synchronization patterns

3.6 Reusable barrier 41

3.6.5 Reusable barrier hint

As it is currently written, this code allows a precocious thread to pass through

the second mutex, then loop around and pass through the first mutex and the

turnstile, effectively getting ahead of the other threads by a lap.

To solve this problem we can use two turnstiles.

Listing 3.10: Reusable barrier hint

1 Semaphore turnstile = 0;

2 Semaphore turnstile2 = 1;

3 Semaphore mutex = 1;

Initally the first is locked and the second is open. When all the threads

arrive at the first, we lock the second and unlock the first. When all the threads

arrive at the second we relock the first, which makes it safe for the threads to

loop around to the beginning, and then open the second.

42 Basic synchronization patterns

3.6 Reusable barrier 43

3.6.6 Reusable barrier solution

Listing 3.11: Reusable barrier solution

1 rendezvous

2

3 mutex.wait ()

4 count++

5 if (count == n)

6 turnstile2.wait () // lock the second

7 turnstile.signal () // unlock the first

8 mutex.signal ()

9

10 turnstile.wait () // first turnstile

11 turnstile.signal ()

12

13 critical point

14

15 mutex.wait ()

16 count--

17 if (count == 0)

18 turnstile.wait () // lock the first

19 signal (turnstile2) // unlock the second

20 mutex.signal ()

21

22 wait (turnstile2) // second turnstile

23 signal (turnstile2)

This example is, unfortunately, typical of many synchronization problems:

it is difficult to be sure that a solution is correct. Often there is a subtle way

that a particular path through the program can cause an error.

To make matters worse, testing an implementation of a solution is not much

help. The error might occur very rarely because the particular path that causes

it might require a spectacularly unlucky combination of circumstances. Such

errors are almost impossible to reproduce and debug by conventional means.

The only alternative is to examine the code carefully and “prove” that it is

correct. I put “prove” in quotation marks because I don’t mean, necessarily,

that you have to write a formal proof (although there are zealots who encourage

such lunacy).

The kind of proof I have in mind is more informal. We can take advantage

of the structure of the code, and the idioms we have developed, to assert, and

then demonstrate, a number of intermediate-level claims about the program.

For example:

1. Only the nth thread can lock or unlock the turnstiles.

2. Before a thread can unlock the first turnstile, it has to close the second,

44 Basic synchronization patterns

and vice versa; therefore it is impossible for one thread to get ahead of

the others by more than one turnstile.

By finding the right kinds of statements to assert and prove, you can some-

times find a concise way to convince yourself (or a skeptical colleague) that your

code is bulletproof.

3.6.7 Barrier objects

It is natural to encapsulate a barrier in an object. The instance variables are n,

count, turnstile and turnstile2.

Here is the code to initialize and use a Barrier object:

Listing 3.12: Barrier interface

1 Barrier barrier = 12 // initialize a new barrier

2 barrier.wait () // wait at a barrier

In this case the first 11 threads will block when they invoke wait. When the

12th thread arrives, all threads unblock. If the barrier object is implemented

using the code in Section 3.5.4, the barrier object will be reusable; that is,

after the first batch of 12 thread pass, the next batch has to wait until the 24th

thread arrives.

3.6.8 Kingmaker pattern

As threads pass a barrier, it is sometimes useful to assign identifiers to the

threads or to choose one of them to perform a special function. The counter

inside the barrier provides an obvious way to solve this problem.

All we need is the ability to store a value in each thread. For example, each

thread might have a variable named threadNum that records a different number

for each thread. This kind of variable is called a thread variable or a local

variable to distinguish it from the shared variables we have been using.

The following example assigns a different integer to each thread that passes.

Here are the variable declarations:

Listing 3.13: Kingmaker initialization

1 Semaphore mutex = 1

2 int count = 0

3 local int threadNum

3.6 Reusable barrier 45

And here is the code:

Listing 3.14: Kingmaker code

1 mutex.wait ()

2 count++

3 threadNum = count

4 mutex.signal ()

Because count is a shared variable, any thread can increment it and all

threads see the changed value. On the other hand, when a thread assigns a

value to threadNum, only the local version of threadNum changes. The other

threads are unaffected.

46 Basic synchronization patterns

3.7 FIFO queue 47

3.7 FIFO queue

If there is more than one thread waiting in queue when a semaphore is signalled,

there is usually no way to tell which thread will be woken. Some implemen-

tations wake threads up in a particular order, like first-in-first-out, but the

semantics of semaphores don’t require any particular order. As an alternative,

it might be useful to assign priorities to threads and wake them in order of

priority.

But even if your environment doesn’t provide first-in-first-out queueing, you

can build it yourself.

Puzzle: use an array of semaphores to build a first-in-first-out queue that

can hold up to n threads. Each time the semaphore sem is signalled, the thread

at the head of the queue should proceed. You should not make any assumption

about the implementation of your semaphores.

48 Basic synchronization patterns

3.7 FIFO queue 49

3.7.1 FIFO queue hint

Here are the variables I used.

Listing 3.15: FIFO queue hint

1 local int i

2 Semaphore array[n+1] = { 0, 1, 1, ..., 1 }

3 Semaphore sem = 0

The local variable i is used by each thread to keep track of where it is in

queue. The array of semaphores controls the flow of threads through the queue.

Assume that the elements of the array are indexed from 1 to n+1. Initially, the

first semaphore is locked and all the rest are open. sem is the semaphore that

is signalled to waken the thread at the head of the queue.

50 Basic synchronization patterns

3.7 FIFO queue 51

3.7.2 FIFO queue solution

Here is my solution:

Listing 3.16: FIFO queue solution

1 i = n

2 array[i+1].wait ()

3 while (i > 0) {

4 array[i].wait ()

5 array[i+1].signal ()

6 i--

7 }

When a first thread arrives, it gets the i+1 semaphore and then enters the

loop. To advance in the queue, the thread has to get the next semaphore, i. If

it succeeds, then it releases the previous semaphore, which allows a subsequent

thread to proceed. If it fails, it waits while holding the previous semaphore,

which blocks a subsequent thread.

The first thread to arrive will cascade down to array[1], which is initially

locked. When it blocks, it will hold array[2]. The next thread will block on

array[2] while holding array[3], and so on.

Eventually, some external thread will signal sem to allow a thread to exit

the queue. To make that work, we can use a helper thread running this loop:

Listing 3.17: FIFO queue solution (helper)

1 while (1) {

2 sem.wait ()

3 array[1].signal ()

4 }

So, each time sem is signalled, the helper thread signals array[1]. That

wakes up the first thread in queue, which will signal array[2] and then proceed.

The second thread in queue will advance one position, which allows each of the

following threads, in turn, to advance one position. At each step in the cascade,

we know that there is only one thread waiting on each semaphore, and that each

thread obtains the next semaphore before releasing the one it has. Therefore,

no thread can overtake another and the threads exit the queue in FIFO order.

That is, unless the queue is full. In that case, more than one thread can

be queued on array[n+1], and we have no control over which one enters the

queue next. So, to be precise, we can say that threads will leave the queue in

the order they pass array[n+1].

Naturally, we can encapsulate this solution in a object, called a Fifo, that

has the following syntax:

Listing 3.18: FIFO queue solution (helper)

1 Fifo fifo(n)

2 fifo.wait ()

52 Basic synchronization patterns

3 fifo.signal ()

The initializer creates a Fifo with n places in queue. wait and signal have

the same semantics as for semaphores, except that threads proceed in the order

they arrive, provided that there are no more than n in queue at a time.

Chapter 4

Classical synchronization

problems

In this chapter we examine the classical problems that appear in nearly every

operating systems class. They are usually presented in terms of real-world

problems, so that the statement of the problem is clear and so that students

can bring their intuition to bear.

For the most part, though, these problems do not happen in the real world, or

if they do, the real-world solutions are not much like the kind of synchronization

code we are working with.

The reason we are interested in these problems is that they are analogous

to common problems that operating systems (and some applications) need to

solve. For each classical problem I will present the classical formulation, and

also explain the analogy to the corresponding OS problem.

4.1 Producer-consumer problem

In multithreaded programs there is often a division of labor between threads. In

one common pattern, some threads are producers and some are consumers. Pro-

ducers create items of some kind and add them to a data structure; consumers

remove the items and process them.

Event-driven programs are a good example. An “event” is something that

happens that requires the program to respond: the user presses a key or moves

the mouse, a block of data arrives from the disk, a packet arrives from the

network, a pending operation completes.

Whenever an event occurs, a producer thread creates an event object and

adds it to the event buffer. Concurrently, consumer threads take events out

of the buffer and process them. In this case, the consumers are called “event

handlers.”

There are several synchronization constraints that we need to enforce to

make this system work correctly:

54 Classical synchronization problems

• While an item is being added to or removed from the buffer, the buffer is

in an inconsistent state. Therefore, threads must have exclusive access to

the buffer.

• If a consumer thread arrives while the buffer is empty, it blocks until a

producer adds a new item.

Assume that producers perform the following operations over and over:

Listing 4.1: Basic producer code

1 event = waitForEvent ()

2 addEventToBuffer (event)

Also, assume that consumers perform the following operations:

Listing 4.2: Basic consumer code

1 event = getEventFromBuffer ()

2 processEvent (event)

As specified above, access to the buffer has to be exclusive, but

waitForEvent and processEvent can run concurrently.

Puzzle: Add synchronization statements to the producer and consumer code

to enforce the synchronization constraints.

4.1 Producer-consumer problem 55

4.1.1 Producer-consumer hint

Here are the variables you might want to use:

Listing 4.3: Producer-consumer initialization

1 Semaphore mutex = 1

2 Semaphore length = 0

3 local Event event

Not surprisingly, mutex provides exclusive access to the buffer. When length

is positive, it indicates the number of items in the buffer. When it is negative,

it indicates the number of consumer threads in queue.

event is a local variable (each thread has its own variable with this name).

Let’s assume that capital-E Event is some type of object.

56 Classical synchronization problems

4.1 Producer-consumer problem 57

4.1.2 Producer-consumer solution

Here is the producer code from my solution.

Listing 4.4: Producer solution

1 event = waitForEvent ()

2 mutex.wait ()

3 addEventToBuffer (event)

4 length.signal ()

5 mutex.signal ()

The producer doesn’t have to get exclusive access to the buffer until it gets

an event. Several threads can run waitForEvent concurrently.

The length semaphore keeps track of the number of items in the buffer.

Each time the producer adds an item, it signals length, incrementing it by one.

The consumer code is similar.

Listing 4.5: Consumer solution

1 length.wait ()

2 mutex.wait ()

3 event = getEventFromBuffer ()

4 mutex.signal ()

5 processEvent (event)

Again, the buffer operation is protected by a mutex, but before the con-

sumer gets to it, it has to decrement length. If length is zero or negative, the

consumer blocks until a producer signals.

Although this solution is correct, there is an opportunity to make one small

improvement to its performance. Imagine that there is at least one consumer

in queue when a producer signals length. If the scheduler allows the consumer

to run, what happens next? It immediately blocks on the mutex that is (still)

held by the producer.

Blocking and waking up are moderately expensive operations; performing

them unnecessarily can impair the performance of a program. So it would

probably be better to rearrange the producer like this:

Listing 4.6: Improved producer solution

1 event = waitForEvent ()

2 mutex.wait ()

3 addEventToBuffer (event)

4 mutex.signal ()

5 length.signal ()

Now we don’t bother unblocking a consumer until we know it can proceed

(except in the rare case that another producer beats it to the mutex).

There’s one other thing about this solution that might bother a stickler. In

the hint section I claimed that the length semaphore keeps track of the number

58 Classical synchronization problems

of items in queue. But looking at the consumer code, we see the possibility that

several consumers could decrement length before any of them gets the mutex

and removes an item from the buffer. At least for a little while, length would

be inaccurate.

We might try to address that by checking the buffer inside the mutex:

Listing 4.7: Broken consumer solution

1 mutex.wait ()

2 length.wait ()

3 event = getEventFromBuffer ()

4 mutex.signal ()

5 processEvent (event)

This is a bad idea.

Puzzle: why?

4.1 Producer-consumer problem 59

4.1.3 Deadlock #4

If the consumer is running this code

Listing 4.8: Broken consumer solution

1 mutex.wait ()

2 length.wait ()

3 event = getEventFromBuffer ()

4 mutex.signal ()

5

6 processEvent (event)

it can cause a deadlock. Imagine that the buffer is empty. A consumer arrives,

gets the mutex, and then blocks on length. When the producer arrives, it

blocks on mutex and the system comes to a griding halt.

This is a common error in synchronization code: any time you wait for a

semaphore while holding a mutex, there is a danger of deadlock. When you are

checking a solution to a synchronization problem, you should check for this kind

of deadlock.

4.1.4 Producer-consumer with a finite buffer

In the example I described above, event-handling threads, the shared buffer is

usually infinite (more accurately, it is bounded by system resources like physical

memory and swap space).

In the kernel of the operating system, though, there are limits on available

space. Buffers for things like disk requests and network packets are usually fixed

size. In situations like these, we have an additional synchronization constraint:

• If a producer arrives when the buffer is full, it blocks until a consumer

removes an item.

Assume that we know the size of the buffer. Call it bufferSize. Since we

have a semaphore that is keeping track of the number of items, it is tempting

to write something like

Listing 4.9: Broken finite buffer solution

1 if (length >= bufferSize)

2 block ()

But we can’t. Remember that we can’t check the current value of a

semaphore; the only operations are wait and signal.

Puzzle: write producer-consumer code that handles the finite-buffer con-

straint.

60 Classical synchronization problems

4.1 Producer-consumer problem 61

4.1.5 Finite buffer producer-consumer hint

Add a second semaphore to keep track of the number of available spaces in the

buffer.

Listing 4.10: Finite-buffer producer-consumer initialization

1 Semaphore mutex = 1

2 Semaphore length = 0

3 Semaphore spaces = bufferSize

When a consumer removes an item it should signal spaces. When a producer

arrives it should decrement spaces, at which point it might block until the next

consumer signals.

62 Classical synchronization problems

4.2 Readers-writers problem 63

4.1.6 Finite buffer producer-consumer solution

Here is a solution.

Listing 4.11: Finite buffer consumer solution

1 length.wait ()

2 mutex.wait ()

3 event = getEventFromBuffer ()

4 mutex.signal ()

5 spaces.signal ()

6

7 processEvent (event)

The producer code is symmetric, in a way:

Listing 4.12: Finite buffer producer solution

1 event = waitForEvent ()

2

3 spaces.wait()

4 mutex.wait ()

5 addEventToBuffer (event)

6 mutex.signal ()

7 length.signal ()

In order to avoid deadlock, producers and consumers check availability be-

fore getting the mutex. For best performance, they release the mutex before

signalling.

4.2 Readers-writers problem

The next classical problem, called the Reader-Writer Problem, pertains to any

situation where a data structure, database, or file system is read and modified

by concurrent threads. While the data structure is being written or modified

it is often necessary to bar other threads from reading, in order to prevent a

reader from interrupting a modification in progress and reading inconsistent or

invalid data.

As in the producer-consumer problem, the solution is asymmetric. Readers

and writers execute different code before entering the critical section. The basic

synchronization constraints are:

1. Any number of readers can be in the critical section simultaneously.

2. Writers must have exclusive access to the critical section.

In other words, a writer cannot enter the critical section while any other

thread (reader or writer) is there, and while the writer is there, no other thread

may enter.

64 Classical synchronization problems

Puzzle: Use semaphores to enforce these constraints, while allowing readers

and writers to access the data structure, and avoiding the possibility of deadlock.

4.2 Readers-writers problem 65

4.2.1 Readers-writers hint

Here is a set of variables that is sufficient to solve the problem.

Listing 4.13: Readers-writers initialization

1 int readers = 0;

2 Semaphore mutex = 1;

3 Semaphore roomEmpty = 1;

The counter readers keeps track of how many readers are in the room.

mutex protects the shared counter. roomEmpty is 1 if there are no threads

(readers or writers) in the critical section, and 0 otherwise.

66 Classical synchronization problems

4.2 Readers-writers problem 67

4.2.2 Readers-writers solution

The code for writers is simple. If the critical section is empty, a writer may

enter, but entering has the effect of excluding all other threads:

Listing 4.14: Writers solution

1 roomEmpty.wait ()

2 critical section for writers

3 roomEmpty.signal ()

When the writer exits, can it be sure that the room is now empty? Yes,

because it knows that no other thread can have entered while it was there.

The code for readers is similar to the barrier code we saw in the previous

section. We keep track of the number of readers in the room so that we can

give a special assignment to the first to arrive and the last to leave.

The first reader that arrives has to wait for roomEmpty. If the room is empty,

then the reader proceeds and, at the same time, bars writers. Subsequent readers

can still enter because none of them will try to wait on roomEmpty.

If a reader arrives while there is a writer in the room, it waits on roomEmpty.

Since it holds the mutex, any subsequent readers queue on mutex.

Listing 4.15: Readers solution

1 mutex.wait ()

2 readers++

3 if (readers == 1)

4 roomEmpty.wait () // first in locks

5 mutex.signal ()

6

7 critical section for readers

8

9 mutex.wait ()

10 readers--

11 if (readers == 0)

12 roomEmpty.signal () // last out unlocks

13 mutex.signal ()

The code after the critical section is similar. The last reader to leave the

room turns out the lights—that is, it signals roomEmpty, possibly allowing a

waiting writer to enter.

Again, to demonstrate that this code is correct, it is useful to assert and

demonstrate a number of claims about how the program must behave. Can you

convince yourself that the following are true?

• Only one reader can queue waiting for roomEmpty, but several writers

might be queued.

• When a reader signals roomEmpty the room must be empty.

68 Classical synchronization problems

Patterns similar to this reader code are common: the first thread into a

section locks a semaphore (or queues) and the last one out unlocks it. In fact,

it is so common we should give it a name and wrap it up in an object.

The name of the pattern is “Film Louie,” which stands for “first in locks

mutex, last out unlocks it, eh?” For convenience, we’ll call the pattern a Louie

and exapsulate it in an object, Louie, with the following instance variable:

Listing 4.16: Louie definition

1 int counter

2 Semaphore mutex

The methods that operate on Louies are lock and unlock.

Listing 4.17: Louie lock definition

1 function lock (semaphore)

2 wait (mutex)

3 counter++

4 if (counter = 1)

5 wait (semaphore)

6 signal (mutex)

lock takes one parameter, a semaphore that it will check and possibly hold.

It performs the following operations:

• If the semaphore is locked, then the calling thread and all subsequent

callers will block.

• When the semaphore is signalled, all waiting threads and all subsequent

callers can proceed.

• While the filo is locked, the semaphore is locked.

Inside the definition of lock, the references to mutex and counter refer to

the instance variables of the current object, the Louie the method was invoked

on.

Here is the code for unlock:

Listing 4.18: Louie unlock definition

1 function filoUnlock (semaphore)

2 wait (mutex)

3 counter--

4 if (counter = 0)

5 signal (semaphore)

6 signal (mutex)

unlock has the following behavior:

4.2 Readers-writers problem 69

• This function does nothing until every thread that called lock also calls

unlock.

• When the last thread calls unlock, it unlocks the semaphore.

Using these functions, we can rewrite the reader code more simply:

Listing 4.19: Concise reader solution

1 readLouie.lock (roomEmpty)

2 critical section for readers

3 readLouie.unlock (roomEmpty)

readLouie is a shared Louie object whose counter is initially zero.

4.2.3 Starvation

In the previous solution, is there any danger of deadlock? In order for a deadlock

to occur, it must be possible for a thread to wait on a semaphore while holding

another, and thereby prevent itself from being signalled.

In this example, deadlock is not possible, but there is a related problem that

is almost as bad: it is possible for a writer to starve.

If a writer arrives while there are readers in the critical section, it might wait

in queue forever while readers come and go. As long as a new reader arrives

before the last of the current readers exits, there will always be at least one

reader in the room.

This situation is not a deadlock, because some threads are making progress,

but it is not exactly desireable. A program like this might work as long as the

load on the system is low, because then there are plenty of opportunities for the

writers. But as the load increases the behavior of the system would deteriorate

quickly (at least from the point of view of writers).

Puzzle: Extend this solution so that when a writer arrives, the existing

readers can finish, but no additional readers may enter.

70 Classical synchronization problems

4.2 Readers-writers problem 71

4.2.4 No-starve readers-writers hint

Here’s a hint. You can add a turnstile for the readers and allow writers to lock it.

The writers have to pass through the same turnstile, but they should check the

roomEmpty semaphore while they are inside the turnstile. If a writer gets stuck

in the turnstile it has the effect of forcing the readers to queue at the turnstile.

Then when the last reader leaves the critical section, we are guaranteed that at

least one writer enters next (before any of the queued readers can proceed).

Listing 4.20: No-starve readers-writers initialization

1 Louie readers = 0

2 Semaphore roomEmpty = 1

3 Semaphore turnstile = 1

readers keeps track of how many readers are in the room; the Louie locks

roomEmpty when the first reader enters and unlocks it when the last reader

leaves.

turnstile is a turnstile for readers and a mutex for writers.

72 Classical synchronization problems

4.2 Readers-writers problem 73

4.2.5 No-starve readers-writers solution

Here is the writer code:

Listing 4.21: No-starve writer solution

1 turnstile.wait ()

2

3 roomEmpty.wait ()

4

5 critical section for writers

6

7 turnstile.signal ()

8

9 roomEmpty.signal ()

If a writer arrives while there are readers in the room, it will block at Line 3,

which means that the turnstile will be locked. This will bar readers from entering

while a writer is queued. Here is the reader code:

Listing 4.22: No-starve reader solution

1 turnstile.wait ()

2 turnstile.signal ()

3

4 filo.lock (roomEmpty)

5 critical section for readers

6 filo.unlock (roomEmpty)

When the last reader leaves, it signals roomEmpty, unblocking the waiting

writer. The writer immediately enters its critical section, since none of the

waiting readers can pass the turnstile.

When the writer exits it signals turnstile, which unblocks a waiting thread,

which could be a reader or a writer. Thus, this solution guarantees that at least

one writer gets to proceed, but it is still possible for a reader to enter while

there are writers queued.

Depending on the application, it might be a good idea to give more priority

to writers. For example, if writers are making time-critical updates to a data

structure, it is best to minimize the number of readers that see the old data

before the writer has a chance to proceed.

In general, though, it is up to the scheduler, not the programmer, to choose

which waiting thread to unblock. Some schedulers use a first-in-first-out queue,

which means that threads are unblocked in the same order they queued. Other

schedulers choose at random, or according to a priority scheme based on the

properties of the waiting threads.

If your programming environment makes it possible to give some threads

priority over others, then that is a simple way to address this issue. If not, you

will have to find another way.

74 Classical synchronization problems

Puzzle: Write a solution to the readers-writers problem that gives priority

to writers. That is, once a writer arrives, no readers should be allowed to enter

until all writers have left the system.

4.2 Readers-writers problem 75

4.2.6 Writer-priority readers-writers hint

As usual, the hint is in the form of variables used in the solution.

Listing 4.23: Writer-priority readers-writers initialization

1 Louie readLouie, writeLouie

2 Semaphore mutex

3 Semaphore noReaders = 1

4 Semaphore noWriters = 1

76 Classical synchronization problems

4.2 Readers-writers problem 77

4.2.7 Writer-priority readers-writers solution

Here is the reader code:

Listing 4.24: Writer-priority reader solution

1 noReaders.wait()

2 readLouie.lock (noWriters)

3 noReaders.signal ()

4

5 critical section for readers

6 readLouie.unlock (noWriters)

If a reader is in the critical section, it holds noWriters, but it doesn’t hold

noReaders. Thus if a writer arrives it can lock noReaders, which will cause

subsequent readers to queue.

When the last reader exits, it signals noWriters, allowing any queued writers

to proceed.

The writer code:

Listing 4.25: Writer-priority writer solution

1 writeLouie.lock (noReaders)

2 noWriters.wait ()

3 critical section for writers