DOCUMENT RESUME

ED 429 110

TM 029 652

AUTHOR

Thompson, Bruce

TITLE

Common Methodology Mistakes in Educational Research,

Revisited, along with a Primer on Both Effect Sizes and the

Bootstrap.

PUB DATE

1999-04-00

NOTE

126p.; Paper presented at the Annual Meeting of the American

Educational Research Association (Montreal, Quebec, Canada,

April 19-23, 1999).

PUB TYPE

Numerical/Quantitative Data (110)

Reports - Descriptive

(141)

Speeches/Meeting Papers (150)

EDRS PRICE

MF01/PC06 Plus Postage.

DESCRIPTORS

*Analysis of Variance; *Educational Research; *Effect Size;

*Research Methodology; Research Problems; *Statistical

Significance; Tables (Data)

IDENTIFIERS

*Bootstrap Methods

ABSTRACT

As an extension of B. Thompson's 1998 invited address to the

American Educational Research Association, this paper cites two additional

common faux pas in research methodology and explores some research issues for

the future. These two errors in methodology are the use of univariate

analyses in the presence of multiple outcome variables (with the converse use

of univariate analyses in post hoc explorations of detected multivariate

effects) and the conversion of intervally scaled predictor variables into

nominally scaled data in the service of the "of variance" (OVA) analyses.

Among the research issues to receive further attention in the future is the

appropriate use of statistical significance tests. The use of the descriptive

bootstrap and the various types of effect size from which the researcher

should select when characterizing quantitative results are also discussed.

The paper concludes with an exploration of the conditions necessary and

sufficient for the realization of improved practices in educational research.

Three appendixes contain the Statistical Package for the Social Sciences for

Windows syntax used to analyze data for three tables. (Contains 16 tables, 15

figures, and 173 references.)

(SLD)

********************************************************************************

*

Reproductions supplied by EDRS are the best that can be made

*

*

from the original document.

*

********************************************************************************

aeraad99.wp1 3/29/99

Common Methodology Mistakes in Educational Research, Revisited,

Along with a Primer on both Effect Sizes and the Bootstrap

Bruce Thompson

Texas A&M University 77843-4225

and

Baylor College of Medicine

U.S. DEPARTMENT OF EDUCATION

Office of Educational Research and improvement

EDUCATIONAL RESOURCES INFORMATION

CENTER (ERIC)

Erer-,:rdocument has been reproduced as

received from the person or organization

originating it.

0 Minor changes have been made to

improve reproduction quality.

Points of view or opinions stated in this

document do not necessarily represent

official OERI position or policy. 1

PERMISSION TO REPRODUCE AND

DISSEMINATE THIS MATERIAL HAS

BEEN GRANTED BY

3_0,Lee,

slIANO.501

TO THE EDUCATIONAL RESOURCES

INFORMATION CENTER (ERIC)

Invited address presented at the annual meeting

of the

American

Educational Research Association (session

#44.25),

Montreal, April 22, 1999. Justin Levitov first introduced me to the

bootstrap, for which I remain most grateful. I also appreciate the

C4

thoughtful comments of Cliff Lunneborg and Russell Thompson on a

U,

previous draft of this paper. The author and related reprints may

CID

CD

be accessed through Internet URL: "http://acs.tamu.edu/-bbt6147/".

04

CD

2

BEST COPY AVAILABLE

0

Common Methodology Mistakes -2-

Abstract

Abstract

The present AERA invited address was solicited to address the theme

for the 1999 annual meeting, "On the Threshold of the Millennium:

Challenges and Opportunities." The paper represents an extension of

my

1998

invited

address, and

cites

two

additional

common

methodology faux pas to complement those enumerated in the previous

address. The remainder of these remarks are forward-looking. The

paper

then

considers

(a) the

proper

role of

statistical

significance tests in contemporary behavioral research,

(b)

the

utility of the descriptive bootstrap, especially as regards the use

of "modern" statistics, and (c) the various types of effect sizes

from

which

researchers should

be

expected

to select

in

characterizing quantitative results. The paper concludes with an

exploration of the conditions necessary and sufficient for the

realization of improved practices in educational research.

3

Common Methodology Mistakes -3-

Introduction

In 1993, Carl Kaestle, prior to his term as President of the

National Academy

of

Education,

published in

the

Educational

Researcher an article titled, "The Awful Reputation of Education

Research." It is noteworthy that the article took as a given the

conclusion that educational research suffers an awful reputation,

and rather than justifying this conclusion, Kaestle focused instead

on exploring the etiology of this reality. For example, Kaestle

(1993)

noted that the education R&D community is seemingly in

perpetual disarray, and that there is a

...lack of consensus--lack of consensus on goals,

lack of consensus on research results, and lack of a

united

front on

funding

priorities

and

procedures.... [T]he lack of consensus on goals is

more than political; it is the result of a weak

field that cannot make tough decisions to do some

things and not others,

so

it does

a

little of

everything... (p. 29)

Although Kaestle (1993) did not find it necessary to provide

a

warrant for his conclusion that educational research has an awful

reputation, others have directly addressed this concern.

The National Academy of Science evaluated educational research

generically, and found "methodologically weak research, trivial

studies, an infatuation with jargon, and a tendency toward fads

with a consequent fragmentation of effort" (Atkinson & Jackson,

1992, p. 20). Others also have argued that "too much of what we

see

in print is seriously flawed" as regards research methods, and that

"much of the work in print ought not to be there" (Tuckman, 1990,

4

Common Methodology Mistakes -4-

Introduction

p.

22). Gall, Borg and Gall (1996) concurred, noting that "the

quality of published studies in education and related disciplines

is, unfortunately, not high" (p. 151).

Indeed,

empirical studies of published research involving

methodology experts as judges corroborate these impressions. For

example, Hall, Ward and Comer (1988) and Ward, Hall and Schramm

(1975) found that over 40% and over 60%, respectively, of published

research was seriously or completely flawed. Wandt

(1967) and

Vockell and Asher

(1974)

reported similar results from their

empirical

studies

of

the

quality

of

published

research.

Dissertations,

too,

have been examined,

and have been found

methodologically wanting (cf. Thompson, 1988a, 1994a).

Researchers have also questioned the ecological validity of

both quantitative and qualitative educational studies. For example,

Elliot Eisner studied two volumes of the flagship journal of

the

American Educational Research Association, the American Educational

Research Journal (AERJ). He reported that,

The median experimental treatment time for seven of

the

15

experimental

studies

that

reported

experimental treatment time in Volume 18 of the AERJ

is 1 hour and 15 minutes. I suppose that we should

take some comfort in the fact that this represents a

66 percent increase over a 3-year period. In 1978

the median experimental treatment time per subject

was 45 minutes. (Eisner, 1983, p. 14)

Similarly, Fetterman (1982) studied major qualitative projects,

and

reported that, "In one study, labeled fAn ethnographic study of...,

5

Common Methodology Mistakes -5-

Introduction

observers were on site at only one point in time for five days. In

a(nother] national study purporting to be ethnographic, once-a-

week, on-site observations were made for 4 months" (p. 17)

None of this is to deny that educational research, whatever

its methodological and other limits, has influenced and informed

educational practice

(cf.

Gage,

1985;

Travers,

1983).

Even a

methodologically flawed study may still contribute something to

our

understanding of educational phenomena.

As Glass (1979) noted,

"Our research literature in education is not of the highest

quality, but I suspect that it is good enough on most topics"

(p.

12).

However, as I pointed out in a 1998 AERA invited address, the

problem with methodologically flawed educational studies is that

these flaws are entirely gratuitous. I argued that

incorrect analyses arise from doctoral methodology

instruction that teaches research methods as series

of rotely-followed routines, as against thoughtful

elements of a reflective enterprise; from doctoral

curricula that seemingly have.less and less

room for

quantitative statistics

and measurement content,

even while our knowledge base in these areas is

burgeoning

(Aiken, West,

Sechrest,

Reno,

with

Roediger, Scarr, Kazdin & Sherman, 1990; Pedhazur &

Schmelkin, 1991, pp. 2-3); and, in some cases, from

an unfortunate atavistic impulse to somehow escape

responsibility for analytic decisions by justifying

choices, sans rationale, solely on the basis that

6

Common Methodology Mistakes -6-

Introduction

the choices are common or traditional.

(Thompson,

1998a, p. 4)

Such concerns have certainly been voiced by others.

For

example, following the 1998 annual AERA meeting,

one conference

attendee wrote AERA President Alan Schoenfeld to complain that

At [the 1998 annual meeting] we had a hard time

finding

rigorous

research

that

reported

actual

conclusions.

Perhaps

we

should

rename

the

association the

American

Educational

Discussion

Association....

This

is

a

serious

problem.

By

encouraging anything that passes for inquiry to be

a

valid

way of

discovering

answers

to

complex

questions, we support a culture of intuition and

artistry rather than building reliable research

bases and robust theories. Incidentally, theory

was

even harder to find than good research. (Anonymous,

1998, p. 41)

Subsequently, Schoenfeld appointed a new AERA committee, the

Research Advisory Committee, which currently is chaired by

Edmund

Gordon. The current members of the Committee

are: Ann Brown, Gary

Fenstermacher, Eugene Garcia, Robert Glaser, James Greeno,

Margaret

LeCompte,

Richard Shavelson,

Vanessa Siddle Walker,

and Alan

Schoenfeld, ex officio, Lorrie Shepard, ex officio, and William

Russell, ex officio. The Committee is charged to strengthen

the

research-related capacity of AERA and its members, coordinate its

activities with appropriate AERA programs, and be entrepreneurial

in nature. [In some respects, the AERA Research Advisory

Committee

Common Methodology Mistakes -7-

Introduction

has a mission similar to that of the APA Task Force

on Statistical

Inference, which was appointed in 1996 (Azar, 1997; Shea, 1996).]

AERA President Alan Schoenfeld also appointed Geoffrey Saxe

the

1999

annual meeting program

chair.

Together,

they then

described the theme for the AERA annual meeting in Montreal:

As we thought about possible themes for the upcoming

annual meeting,

we were pressed by

a

sense of

timeliness and urgency. With regard to timeliness,

...the calendar year for the next annual meeting is

1999, the year that heralds the new millennium....

It's a propitious time to think about what we know,

what we need to know,

and where we should be

heading. Thus, our overarching theme [for the

1999

annual

meeting] is

"On

the

Threshold

of

the

Millennium: Challenges and Opportunities."

There is also a sense of urgency. Like many

others, we see the field of education at a point of

critical choices--in some arenas,

one might say

crises. (Saxe & Schoenfeld, 1998, p. 41)

The present paper was among those invited by various divisions

to

address this theme, and is an extension of my 1998 AERA address

(Thompson, 1998a).

Purpose of the Present Paper

In my 1998 AERA invited address I advocated the improvement of

educational research via the eradication of five identified

faux

pas:

(1) the use of stepwise methods;

8

Common Methodology Mistakes -8-

Purpose of the Paper

(2) the failure to consider in result interpretation the context

specificity of analytic

weights (e.g., regression beta

weights, factor pattern coefficients, discriminant function

coefficients, canonical function coefficients) that are part

of all parametric quantitative analyses;

(3) the failure to interpret

both weights and

structure

coefficients as part of result interpretation;

(4) the failure to recognize that reliability is a characteristic

of scores, and not of tests; and

(5) the incorrect interpretation of statistical significance and

the related failure to report and interpret the effect sizes

present in all quantitative analyses.

Two Additional Methodology Faux Pas

The present didactic essay elaborates two additional common

methodology errors to delineate a constellation of seven cardinal

sins of analytic research practice:

(6) the use of univariate analyses in the presence of multiple

outcomes

variables,

and the

converse use

of univariate

analyses in post hoc explorations of detected multivariate

effects; and

(7) the conversion of intervally-scaled predictor variables into

nominally-scaled data in service of OVA (i.e., ANOVA, ANCOVA,

MANOVA, MANCOVA) analyses.

However, the present paper is more than a further elaboration

of bad behaviors. Here the discussion of these two errors focuses

on driving home two important realizations that should undergird

best methodological practice:

9

Common Methodology Mistakes -9-

Purpose of the Paper

1. All

statistical

analyses of

scores

on measured/observed

variables actually focus on correlational analyses of

scores

on synthetic/latent variables derived by applying weights to

the observed variables; and

2. The researcher's fundamental task in deriving defensible

results is to employ an analytic model that matches the

researcher's (too often implicit) model of reality.

These two realization will provide a conceptual foundation for the

treatment in the remainder of the paper.

Focus on the Future: Improving Educational Research

Although the focus on common methodological faux pas has

some

merit, in keeping with the theme of this 1999 annual meeting

of

AERA,

the

present

invited

address

then

turns

toward

the

constructive portrayal of a brighter research future. Three issues

are addressed. First, the proper role of statistical significance

testing in future practice is explored. Second, the

use of so-

called

"internal replicability"

analyses

in the

form

of the

bootstrap is described. As part of this discussion

some "modern"

statistics

are briefly discussed.

Third,

the computation and

interpretation of effects sizes are described.

Other methods faux pas and other methods improvements might

both have been elaborated. However, the proposed changes would

result in considerable improvement in future educational research.

In my view,

(a)

informed use of statistical tests,

(b) the more

frequent use of external and internal replicability analyses, and

especially

(c)

required reporting and interpretation of effect

sizes

in

all

quantitative

research

are

both

necessary

and

10

Common Methodology Mistakes -10-

Purpose of the Paper

sufficient conditions for realizing improvements.

Essentials for Realizing Improvements

The essay ends by considering how fields move and what must be

done to realize these potential improvements. In my view, AERA must

exercise visible and coherent academic leadership if change is to

occur. To date, such leadership has not often been within the

organization's traditions.

Faux Pas #6: Univariate as Against Multivariate Analyses

Too often,

educational researchers

invoke

a

series of

univariate analyses (e.g., ANOVA, regression) to analyze multiple

dependent variable scores from a single sample of participants.

Conversely,

too often

researchers who

correctly

select a

multivariate analysis invoke univariate analyses post hoc in their

investigation of the origins of multivariate effects. Here it will

be demonstrated once again,

using heuristic data to make the

discussion completely concrete, that in both cases these choices

may lead to serious interpretation errors.

The fundamental conceptual emphasis of this discussion, as

previously noted, is on making the point that:

1. All

statistical

analyses of scores

on

measured/observed

variables actually focus on correlational analyses of scores

on synthetic/latent variables derived by applying weights to

the observed variables.

Two small heuristic data sets are employed to illustrate the

relevant dynamics, respectively, for the univariate (i.e., single

dependent/outcome

variable) and

multivariate

(i.e.,

multiple

outcome variables) cases.

11

Common Methodology Mistakes -11-

Faux Pas #6: Multivariate vs Univariate

Univariate Case

Table 1 presents a heuristic data set involving

scores on

three measured/observed variables: Y, Xl, and X2. These variables

are called "measured" (or "observed") because they

are directly

measured, without any application of additive

or multiplicative

weights, via rulers, scales, or psychometric tools.

INSERT TABLE 1 ABOUT HERE.

However,

ALL

parametric

analyses

apply

weights

to the

measured/observed variables to estimate scores for each

person on

synthetic or latent variables. This is true notwithstanding

the

fact that for some statistical analyses (e.g., ANOVA)

the weights

are not printed by some statistical packages. As I have noted

elsewhere, the weights in different analyses

...are all analogous, but are given different names

in

different

analyses

(e.g.,

beta

weights

in

regression, pattern coefficients in factor analysis,

discriminant function coefficients in discriminant

analysis,

and canonical function coefficients in

canonical correlation analysis), mainly to

obfuscate

the commonalities of [all] parametric methods,

and

to confuse graduate students. (Thompson, 1992a,

pp.

906-907)

The synthetic variables derived by applying weights

to the measured

variables then become the focus of the statistical

analyses.

The fact that all analyses are part

on one single General

Linear

Model

(GLM)

family

is a

fundamental

foundational

Common Methodology Mistakes -12-

Faux Pas #6: Multivariate vs Univariate

understanding essential (in my view) to the informed selection of

analytic methods. The seminal readings have been provided

by Cohen

(1968)

viz,

the

univariate

case,

by

Knapp

(1978)

viz,

the

multivariate case,

and by Bagozzi,

Fornell and Larcker

(1981)

regarding the most general case of the GLM: structural equation

modeling. Related heuristic demonstrations of General Linear Model

dynamics have been offered by Fan (1996, 1997) and Thompson

(1984,

1991, 1998a, in press-a).

In the multiple regression case, a given

person's score on

the

measured/observed

variable

Y.

is

estimated

as

the

synthetic/latent variable

The predicted outcome score for a

given person equals

= a + b1(X1.) + b2(X2.), which for these data,

as reported in Figure 1, equals -581.735382 + [1.301899 x X1] +

[0.862072 x X21]. For example, for person 1, Y1

= [1.301899 x 392]

+ [0.862072 x 573] = 422.58.

INSERT FIGURE 1 ABOUT HERE.

Some Noteworthy Revelations.

The "ordinary least squares"

(OLS) estimation used in classical regression analysis optimizes

the fit in the sample of each Y. to each Yi

score. Consequently, as

noted by Thompson (1992b), even if all the predictors

are useless,

A

the means of Y and Y will always be equal (here 500.25),

and the

A

mean of the e scores

(ei = Yi

-

Y.)

will always be zero. These

expectations are confirmed in the Table 1 results.

It is also worth noting that the sum of

squares (i.e., the sum

of the squared deviations of each person's

score from the mean) of

the Y scores (i.e., 167,218.50) computed in Table

1 matches the

13

Common Methodology Mistakes -13-

Faux Pas #6: Multivariate vs Univariate

"regression"

sum of

squares

(variously

synonymously called

"explained," "model," "between," so as to confuse the graduate

students) reported in the Figure 1 SPSS output. Furthermore, the

sum of squares

of

the e

scores reported

in Table

1

(i.e.,

32,821.26) exactly matches the "residual" sum of squares (variously

called "error," "unexplained," and "residual") value reported in

the Figure 1 SPSS output.

It is especially noteworthy that the sum of squares explained

(i.e.,

167,218.50) divided the sum of squares of the Y scores

(i.e.,

the sum of squares "total" = 167,218.50 + 32,821.26

=

200,039.75) tells us the proportion of the variance in the Y

scores

that we can predict given knowledge of the X1 and the X2

scores.

For these data the proportion is 167,218.50 / 200,039.75

= .83593.

This formula is one of several formulas with which to compute

the

uncorrected regression effect size, the multiple le.

Indeed, for the univariate case, because ALL analyses

are

correlational,

an r2 analog of this effect size can always be

computed, using this formula across analyses. However, in

ANOVA,

for example, when we compute this effect size using this generic

formula,

we

call

the result

eta2

(n2;

or

synonymously

the

correlation ratio [not the correlation coefficient!]), primarily

to

confuse the graduate students.

Even

More

Important

Revelations.

Figure

2

presents the

correlation coefficients involving all possible pairs

of the five

(three measured,

two synthetic)

variables.

Several additional

revelations become obvious.

14

Common Methodology Mistakes -14-

Faux Pas #6: Multivariate vs Univariate

INSERT FIGURE 2 ABOUT HERE.

A

First, note that the Y scores and the e scores are perfectly

uncorrelated. This will ALWAYS be the case, by definition, since

A

the Y scores are the aspects of the Y scores that the predictors

can explain or predict, and the e scores are the aspects of the Y

scores that the predictors cannot explain or predict (i.e., because

A

ei

is defined as Y.

- Y.,

therefore rywax, = 0).

Similarly, the

measured

predictor

variables (here X1

and

X2)

always

have

correlations of zero with the e scores, again because the e scores

by definition are the parts of the Y scores that the predictors

cannot explain.

Second, note that the ry,yma reported in Figure 3

(i.e.,

.9143) matches the multiple R reported in Figure 1 (i.e., .91429),

except for the arbitrary decision by different computer programs to

present these statistics to different numbers of decimal places.

A

The equality makes sense conceptually, if we think of the Y

scores

as being the part of the predictors useful in predicting/explaining

the Y scores, discarding all the parts of the measured predictors

that are not useful (about which we are completely uninterested,

because the focus of the analysis

is solely on the outcome

variable).

This last revelation is extremely important to a conceptual

understanding of statistical analyses. The fact that Rywition,

X2 = ry

A

xylva means that the synthetic variable, Y, is actually the focus of

the analysis. Indeed, synthetic variables are ALWAYS the real focus

of statistical analyses!

15

Common Methodology Mistakes -15-

Faux Pas #6: Multivariate vs Univariate

This makes sense, when we realize that our measures are only

indicators of our psychological constructs, and that what we really

care about in educational research are not the observed scores on

our measurement tools per se,

but instead is

the underlying

construct. For example, if I wish to improve the self-concepts of

third-grade elementary students, what

I

really care about is

improving their unobservable self-concepts, and not the scores on

an imperfect measure of this construct, which I only use as a

vehicle to estimate the latent construct of interest, because the

construct cannot be directly observed.

Third, the correlations of the measured predictor variables

with the synthetic variable (i.e.,

.7512 and -.0741) are called

"structure" coefficients. These can also be derived by computation

(cf. Thompson & Borrello, 1985) as rs = rymitioc / R (e.g.,

.6868 /

.91429

= .7512). [Due to a

strategic error on the part of

methodology professors, who convene annually in a secret coven to

generate more statistical terminology with which to confuse the

graduate students, for some reason the mathematically analogous

structure coefficients across all analyses are uniformly called by

the same name--an oversight that will doubtless soon be corrected.]

The reason structure coefficients are called "structure"

coefficients is that these coefficients provide insight regarding

what is the nature or the structure of the underlying synthetic

variables of the actual research focus. Although space precludes

further detail here,

I

regard the interpretation of structure

coefficients are being essential in most research applications

(Thompson,

1997b, 1998a;

Thompson

& Borrello,

1985).

Some

16

Common Methodology Mistakes -16-

Faux Pas #6: Multivariate vs Univariate

educational researchers erroneously believe that these coefficients

are unimportant insofar as they are not reported for all analyses

by

some

computer

packages; these

researchers

believe that SPSS and other computer packages

were

sole authorship venture by a benevolent God who

judiciously to report on printouts (a) a// results of interest and

(b) only the results of genuine interest.

The Critical, Essential Revelation. Figure 2 also provides the

basis for delineating a paradox which, once resolved, leads

to a

fundamentally important insight regarding statistical analyses.

Notice for these data the r2 between Y and X1 is .68682=

47.17% and

the r2 between Y and X2 is -.06772 = 0.46%. The

sum of these two

values is .4763.

Yet, as reported in Figures 2 and 3, the R2 value for these

data is .914292 = 83.593%, a value approaching the mathematical

limen for E2. How can the multiple R2 value (83.593%) be

not only

larger, but nearly twice as large as the sum of the r2 values

of the

two predictor variables with Y?

These data illustrate a "suppressor" effect. These effects

were first noted in World War II when psychologists used paper-and-

pencil measures of spatial and mechanical ability to

predict

ability to pilot planes. Counterintuitively, it

was discovered that

verbal ability, which is essentially unrelated with pilot

ability,

nevertheless substantially improved the It2 when used

as a predictor

in conjunction spatial and mechanical ability

scores. As Horst

(1966,

p.

355)

explained,

"To include the verbal score with

a

negative

weight

served

to

suppress or

subtract

irrelevant

incorrectly

written in a

has elected

17

Common Methodology Mistakes -17-

Faux Pas #6: Multivariate vs Univariate

[measurement artifact] ability [in the spatial and mechanical

ability scores], and to discount the scores of those

who did well

on the test simply because of their verbal ability rather than

because of abilities required for success in pilot training."

Thus, suppressor effects are desirable, notwithstanding what

some may deem

a pejorative name,

because suppressor effects

actually increase effect sizes. Henard (1998) and

Lancaster (in

press) provide readable elaborations. All this discussion

leads to

the extremely important point that

The latent or synthetic variables analyzed in all

parametric methods are always more than the

sum of

their constituent parts. If we only look at observed

variables, such as by only examining

a series of

bivariate r's, we can easily under or overestimate

the actual effects that are embedded within

our

data. We must use analytic methods that honor the

complexities of the reality that we purportedly wish

to study--a reality in which variables can interact

in all sorts of complex and counterintuitive

ways.

(Thompson, 1992b, pp. 13-14, emphasis in original)

Multivariate Case

Table 2 presents heuristic data for 10 people in each

of two

groups on two measured/observed outcome/response variables,

X and

Y.

These data are somewhat similar to those

reported by Fish

(1988), who argued that multivariate analyses

are usually vital.

The Table 2 data are used here to illustrate that

(a) when you have

more than one outcome variable, multivariate analyses

may be

18

Common Methodology Mistakes -18-

Faux Pas #6: Multivariate vs Univariate

essential, and (b) when you do

a multivariate analysis, you must

not use a univariate method post hoc

to explore the detected

multivariate effects.

INSERT TABLE 2 ABOUT HERE.

For these heuristic data, the outcome

scores of X and Y have

exactly the same variance in both

groups 1 and 2, as reported in

the bottom of Table 2. This exactly equal

SD (and variance and sum

of

squares)

means

that

the ANOVA

"homogeneity

of

variance"

assumption

(called this

because this

characterization

sounds

fancier than simply saying "the outcome

variable scores were

equally

'spread out'

in all groups")

was perfectly met,

and

therefore the calculated ANOVA F test results

are exactly accurate

for

these

data.

Furthermore,

the

analogous

multivariate

"homogeneity of dispersion matrices" assumption

(meaning simply

that the variance/covariance matrices in the

two groups were equal)

was also perfectly met,

and therefore the MANOVA F tests

are

exactly accurate as well. In short, the demonstrations

here are not

contaminated by the failure to meet statistical

assumptions!

Figure 3 presents ANOVA results for

separate analyses of the

X and Y scores presented in Table 2.

For both X and Y, the two

means do not differ to a statistically significant

degree. In fact,

for both variables the

pcurmAnm values were .774. Furthermore, the

eta2 effect sizes

were both computed to be 0.469% (e.g., 5.0 / [5.0

+ 1061.0) = 5.0 / 1065.0

= .00469). Thus, the two sets of ANOVA

results are not statistically significant

and they both involve

extremely small effect sizes.

19

Common Methodology Mistakes -19-

Faux Pas #6: Multivariate vs Univariate

INSERT FIGURE 3 ABOUT HERE.

However,

as

also

reported

in

the

Figure

3

results, a

MANOVA/Descriptive Discriminant Analysis

(DDA;

for

a

one-way

MANOVA, MANOVA and DDA yield the same results, but the DDA provides

more detailed analysis--see Huberty, 1994; Huberty & Barton, 1989;

Thompson, 1995b)

of the same data yields a pcucmAnm value of

.000239, and an eta2 of 62.5%. Clearly, the resulting interpretation

of the same data would be night-and-day different for these two

sets of analyses. Again, the synthetic variables in some senses can

become more than the sum of their parts, as was also the case in

the previous heuristic demonstration.

Table 2

reports these latent variable scores for the 20

participants, derived by applying the weights (-1.225 and 1.225)

reported in Figure 3 to the two measured outcome variables. For

heuristic purposes only,

the scores on the synthetic variable

labelled "DSCORE" were then subjected to the ANOVA reported in

Figure 4.

As reported

in

Figure 4,

this

analysis

of

the

multivariate synthetic variable,

a weighted aggregation of the

outcome variables X and Y, yields the same eta2 effect size (i.e.,

62.5%) reported in Figure 3 for the DDA/MANOVA results. Again, all

statistical

analyses actually

focus

on the

synthetic/latent

variables

actually derived

in the

analyses,

guod

erat

demonstrandum.

INSERT FIGURE 4 ABOUT HERE.

The present heuristic example can be framed in either of two

2 0

Common Methodology Mistakes -20-

Faux Pas #6: Multivariate vs Univariate

ways,

both of which highlight common errors

in

contemporary

analytic practice. The first error involves conducting multiple

univariate analyses to evaluate multivariate data; the second

error

involves using univariate analyses

(e.g., ANOVAs)

in post hoc

analyses of detected multivariate effects.

Usina Several Univariate Analyses to Analyze Multivariate

Data. The present example might be framed as an illustration of

a

researcher conducting only two ANOVAs to analyze the two sets of

dependent variable scores.

The researcher here would find no

statistically

significant

(both pcurmAnm values

=

.774)

nor

(probably, depending upon the context of the study and researcher

personal values) any noteworthy effect (both eta2 values

= 0.469%).

This

researcher would remain oblivious

to

the

statistically

significant effect

(PCALCULATED =

.000239)

and huge

(as regards

typicality;

see Cohen,

1988)

effect size

(multivariate eta2 =

62.5%).

One potentially noteworthy argument in favor of employing

multivariate methods with data involving

more than one outcome

variable involves the inflation of "experimentwise"

Type I error

rates

(auw;

i.e., the probability of making

one or more Type I

errors in a set of hypothesis tests--see Thompson, 1994d). At the

extreme, when the outcome variables or the hypotheses (as in

a

balanced ANOVA design)

are

perfectly uncorrelated,

am,/

is

a

function of the "testwise" alpha level

(am) and the number of

outcome variables or hypotheses tested (k), and equals

1 - (1 - anv)k.

Because this function is exponential, experimentwise

error rates

21

Common Methodology Mistakes -21-

Faux Pas #6: Multivariate vs Univariate

can

inflate quite rapidly!

[Imagine my consternation when

I

detected a local dissertation invoking

more than 1,000 univariate

statistical significance tests (Thompson, 1994a).]

One way to control the inflation of experimentwise

error is to

use a "Bonferroni correction" which adjusts the arw downward

so as

to minimize the final auw.

Of course, one consequence of this

strategy is lessened statistical power against

Type II error.

However, the primary argument against using a series of univariate

analyses to evaluate data involving multiple outcome variables

does

not invoke statistical significance testing concepts.

Multivariate methods are often vital in behavioral

research

simply because multivariate methods best honor the

reality to which

the researcher is purportedly trying to generalize. Implicit

within

every analysis is an analytic model.

Each researcher also has a

presumptive model of what reality is believed to

be like.

It is

critical that our analytic models and

our models of reality match,

otherwise our conclusions will be invalid.

It is generally best to

consciously reflect on the fit of these two models

whenever we do

research.

Of course, researchers with different models of reality

may make different analytic choices, but this is not disturbing

because analytic choices are philosophically driven

anyway (Cliff,

1987, p. 349).

My personal model of reality is one "in which the

researcher

cares about multiple outcomes, in which most outcomes have

multiple

causes, and in which most causes have multiple effects"

(Thompson,

1986b, p. 9). Given such a model of reality, it is

critical that

the full network of all possible relationships

be considered

C

Common Methodology Mistakes -22-

Faux Pas #6: Multivariate vs Univariate

simultaneously within the

analysis.

Otherwise,

the

Figure

3

multivariate effects, presumptively real given

my model of reality,

would go undetected. Thus, Tatsuokals (1973b) previous

remarks

remain telling:

The often-heard argument, "I'm more interested in

seeing how each variable, in its

own right, affects

the outcome" overlooks the fact that

any variable

taken in

isolation

may

affect

the

criterion

differently from the way it will act in the

company

of other variables. It also overlooks the fact that

multivariate analysis--precisely by considering

all

the variables simultaneously--can throw light

on how

each one contributes to the relation.

(p. 273)

For these various reasons empirical studies (Emmons, Stallings

&

Layne,

1990)

show that,

"In

the

last

20

years,

the use of

multivariate statistics has become commonplace" (Grimm

& Yarnold,

1995, p. vii).

Using Univariate Analyses post hoc to Investigate

Detected

Multivariate Effects. In ANOVA and ANCOVA, post hoc

(also called "a

posteriori," "unplanned," and "unfocused") contrasts

(also called

"comparisons") are necessary to explore the origins

of detected

omnibus effects iff ("if and only if")

(a)

an omnibus effect is

statistically significant (but see Barnette &McLean,

1998) and (b)

the way (also called an OVA "factor", but this alternative

name

tends to become confused with a factor analysis

"factor") has more

than two levels.

However, in MANOVA and MANCOVA post

hoc

tests are necessary to

Common Methodology Mistakes -23-

Faux Pas #6: Multivariate vs Univariate

evaluate (a) which groups differ (b) as regards which one or more

outcome variables. Even in a two-level way (or "factor"), if the

effect is statistically significant, further analyses are necessary

to determine on which one or more outcome/response variables the

two groups differ. An alarming number of researchers employ ANOVA

as

a post hoc analysis

to explore

detected MANOVA effects

(Thompson, 1999b).

Unfortunately, as the previous example made clear, because the

two post hoc ANOVAs would fail to explain where the incredibly

large and statistically significant MANOVA effect originated, ANOVA

is not a suitable MANOVA post hoc analysis. As Borgen and Seling

(1978) argued, "When data truly are multivariate, as implied by the

application of MANOVA, a multivariate follow-up technique seems

necessary to 'discover' the complexity of the data" (p. 696). It is

simply illogical to first declare interest in a multivariate

omnibus system of variables, and to then explore detected effects

in this multivariate world by conducting non-multivariate tests!

Faux Pas #7: Discarding Variance in Intervally-Scaled Variables

Historically,

OVA methods

(i.e.,

ANOVA,

ANCOVA,

MANOVA,

MANCOVA)

dominated the

social

scientist's

analytic

landscape

(Edgington, 1964, 1974). However, more recently the proportion of

uses of OVA methods has declined (cf.

Elmore & Woehlke,

1988;

Goodwin &

Goodwin,

1985;

Willson,

1980).

Planned

contrasts

(Thompson, 1985, 1986a, 1994c) have been increasingly favored over

omnibus tests. And regression and related techniques within the GLM

family have been increasingly employed.

Improved analytic choices have partially been a function of

2 4

Common Methodology Mistakes -24-

Faux Pas #7: Discarding Variance

growing researcher awareness that:

2. The researcher's fundamental

task in deriving defensible

results is to employ an analytic model that matches

the

researcher's (too often implicit) model of reality.

This growing awareness can largely be traced to

a seminal article

written by Jacob Cohen (1968,

P. 426).

Theory

Cohen (1968) noted that ANOVA and ANCOVA

are special cases of

multiple regression analysis, and argued that in

this realization

"lie possibilities for more relevant and

therefore more powerful

exploitation of research data." Since that time

researchers have

increasingly recognized that conventional multiple

regression

analysis of data as they were initially collected

(no conversion of

intervally

scaled

independent

variables

into

dichotomies

or

trichotomies) does not discard information

or distort reality, and

that the "general linear model"

...can be used equally well in experimental or

non-

experimental research. It can handle continuous

and

categorical variables.

It can handle two,

three,

four, or more independent variables... Finally,

as

we

will

abundantly

show,

multiple

regression

analysis can do anything the analysis of

variance

does--sums of squares, mean squares, F ratios--and

more. (Kerlinger & Pedhazur, 1973, p. 3)

Discarding variance is generally not good research

practice.

As Kerlinger (1986) explained,

...partitioning a

continuous

variable

into

a

2 5

Common Methodology Mistakes -25-

Faux Pas #7: Discarding Variance

dichotomy or trichotomy throws information

away...

To reduce a set of values with a relatively wide

range to a dichotomy is to reduce its variance and

thus its possible correlation with other variables.

A good rule of research data analysis, therefore,

is:

Do

not

reduce

continuous

variables

to

partitioned variables

(dichotomies,

trichotomies,

etc.) unless compelled to do so by circumstances

or

the nature of the data (seriously skewed, bimodal,

etc.).

(p. 558, emphasis in original)

Kerlinger (1986, p. 558) noted that variance is

the "stuff" on

which all analysis is based. Discarding variance

by categorizing

intervally-scaled

variables

amounts

to

the

"squandering

of

information" (Cohen, 1968, p. 441). As Pedhazur (1982,

pp. 452-453)

emphasized,

Categorization of attribute variables is

all too

frequently resorted to in the social sciences....

It

is possible that some of the conflicting evidence

in

the research literature of a given

area may be

attributed to the practice of categorization

of

continuous variables.... Categorization leads

to a

loss of information,

and consequently to a

less

sensitive analysis.

Some researchers may be prone to categorizing

continuous

variables and overuse of ANOVA because they

unconsciously and

erroneously associate ANOVA with the power of experimental

designs.

As I have noted previously,

26

Common Methodology Mistakes -26-

Faux Pas #7: Discarding Variance

Even most experimental studies invoke intervally

scaled "aptitude" variables (e.g., IQ

scores in a

study with academic achievement

as

a

dependent

variable),

to

conduct

the

aptitude-treatment

interaction

(ATI)

analyses

recommended

so

persuasively by Cronbach (1957, 1975)

in his 1957

APA Presidential address. (Thompson, 1993a,

pp. 7-8)

Thus, many researchers employ interval predictor

variables, even in

experimental designs, but these same researchers

too often convert

their interval predictor variables to nominal

scale merely to

conduct OVA analyses.

It is true that experimental designs allow

causal inferences

and that ANOVA is appropriate for

many experimental designs.

However, it is not therefore true that doing

an ANOVA makes the

design experimental and thus allows causal

inferences.

Humphreys (1978, p. 873, emphasis added)

noted that:

The basic fact is that a

measure of individual

differences is not an independent variable

[in a

experimental design], and it does not become

one by

categorizing the scores and treating the categories

as if they defined a variable under experimental

control

in

a

factorially

designed

analysis

of

variance.

Similarly,

Humphreys and Fleishman

(1974,

p.

468)

noted that

categorizing variables in a nonexperimental

design using an ANOVA

analysis "not infrequently produces in

both the investigator and

his audience the illusion that he has

experimental control over the

2 7

Common Methodology Mistakes -27-

Faux Pas #7: Discarding Variance

independent variable. Nothing could be

more wrong." Because within

the general linear model all analyses are correlational,

and it is

the design and not the analysis that yields the capacity

to make

causal inferences, the practice of converting intervally-scaled

predictor variables to nominal scale so that ANOVA

and other OVAs

(i.e., ANCOVA, MANOVA, MANCOVA) can be conducted is inexcusable,

at

least in most cases.

As Cliff (1987, p. 130, emphasis added) noted, the practice

of

discarding variance on intervally-scaled predictor variables

to

perform OVA analyses creates problems in almost all

cases:

Such divisions are not infallible; think of

the

persons near the borders. Some who should be highs

are actually classified as lows, and vice versa. In

addition, the "barely highs" are classified the

same

as the "very highs," even though they are different.

Therefore,

reducing

a

reliable

variable

to

a

dichotomy [or a trichotomy] makes the variable

more

unreliable, not less.

In such cases,

it is the reliability of the dichotomy that

we

actually analyze, and not the reliability of the highly-reliable,

intervally-scaled data that we originally collected, which

impact

the analysis we are actually conducting.

Heuristic Examples for Three Possible Cases

When we convert an intervally-scaled independent

variable into

a nominally-scaled way in service of performing an OVA analysis,

we

are

implicitly

invoking a

model

of

reality with two strict

assumptions:

2 8

Common Methodology Mistakes -28-

Faux Pas #7: Discarding Variance

1. all the participants assigned to a given level

of the way (or

"factor") are the same, and

2. all the participants assigned to different levels

of the way

are different.

For example, if we have a normal distribution of IQ

scores, and we

use scores of 90 and 110 to trichotomize our interval data,

we are

saying that:

1. the 2 people in the High IQ group with IQs of

111 and 145 are

the same, and

2. the 2 people in the Low and Middle IQ

groups with IQs of 89

and 91, respectively, are different.

Whether our decision to convert our intervally-scaled

data to

nominal scale is appropriate depends entirely

on the research

situation. There are three possible situations.

Table 3

presents heuristic data

illustrating the three

possibilities. The measured/observed outcome variable

in all three

cases is Y.

INSERT TABLE 3 ABOUT HERE.

Case #1: No harm, no foul. In case #1 the intervally-scaled

variable X1 is re-expressed as a trichotomy in

the form of variable

X1'.

Assuming that the standard

error of the measurement

is

something like 3 or 6, the conversion in this

instance does not

seem problematic, because it appears reasonable to

assume that:

1. all the participants assigned to

a given level of the way are

the same, and

2. all the participants assigned to different

levels of the way

2 9

Common Methodology Mistakes -29-

Faux Pas #7: Discarding Variance

are different.

Case #2: Creating variance where there is none. Case #2 again

assumes that the standard error of the measurement is something

like 3

to 6 for the hypothetical scores. Here

none of the 21

participants appear to be different as regards their

scores on

Table 3 variable X2, so assigning the participants to

three groups

via variable X2' seems to create differences where

there are none.

This will generate analytic results in which the

analytic model

does not honor our model of reality, which in turn compromises

the

integrity of our results.

Some may protest that no real researcher would

ever, ever

assign people to groups where there

are, in fact, no meaningful

differences among the participants as regards their

scores on an

independent variable. But consider a recent local dissertation

that

involved administration of a depression

measure to children; based

on scores on this measure the children were assigned to

one of

three depression groups.

Regrettably,

these children were all

apparently happy and well-adjusted.

It is especially interesting that the highest

score

on this [depression] variable.., was apparently 3.43

(p. 57).

As... [the student] acknowledged, the PNID

authors themselves recommend a cutoff

score of 4 for

classifying subjects as being severely depressed.

Thus, the highest score in...

[the] entire sample

appeared to be less than the minimum cutoff

score

suggested by the test's own authors!

(Thompson,

1994a, p. 24)

3 0

Common Methodology Mistakes -30-

Faux Pas #7: Discarding Variance

Case #3: Discarding variance, distorting distribution

shape.

Alternatively,

presume that the

intervally-scaled

independent

variable (e.g.,

an aptitude way in an ATI design)

is somewhat

normally distributed. Variable X3

in Table

3

can be used to

illustrate the

potential

consequences

of

re-expressing

this

information in the form of a nominally-scaled

variable such as X3/.

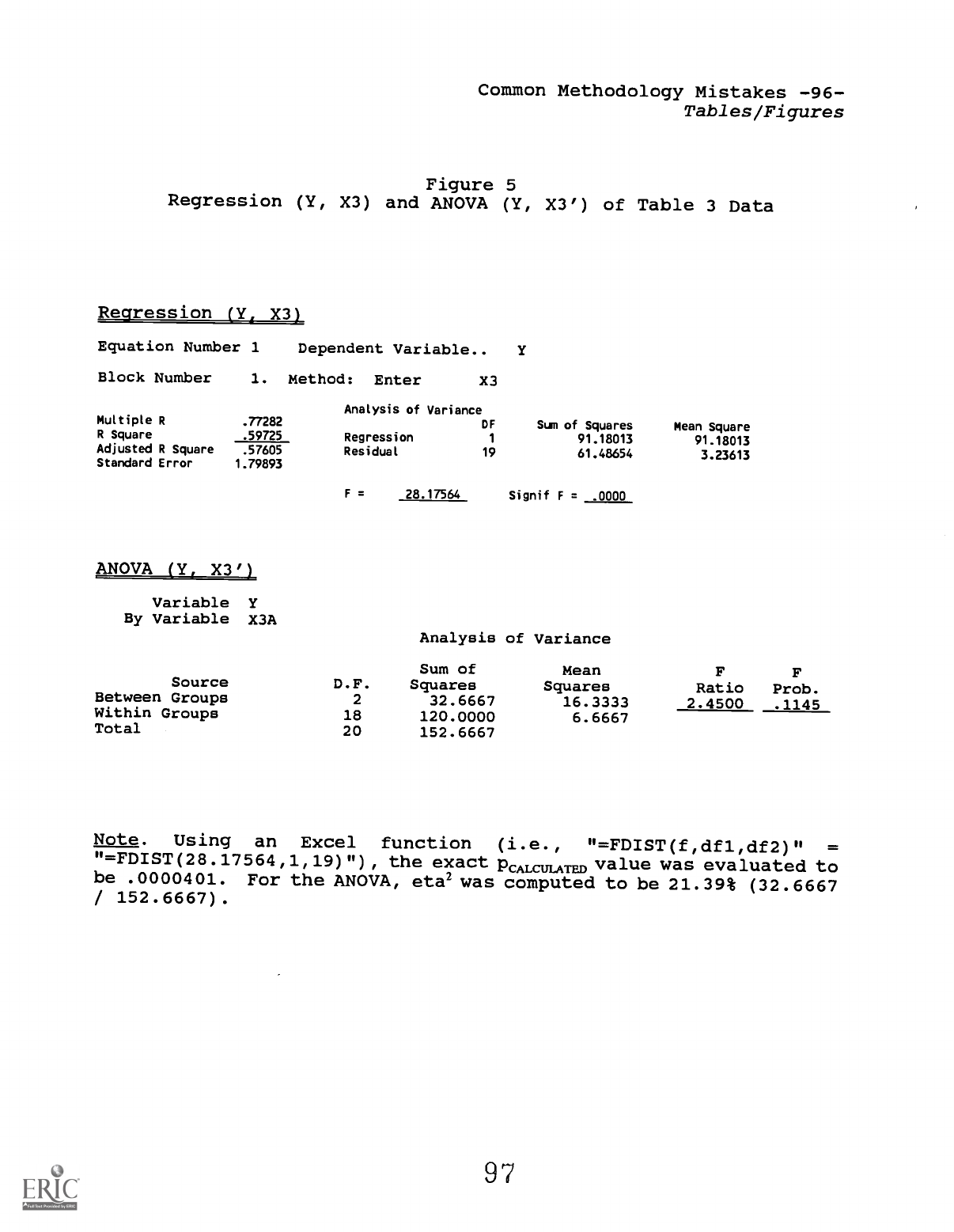

Figure 5 presents the SPSS output from

analyzing the data in

both unmutilated (i.e.,

X3)

and mutilated (i.e., X3')

form. In

unmutilated

form,

the

results

are

statistically

significant

(pcuemAnm =

.00004)

and the R2 effect size is 59.7%.

For the

mutilated data, the results are not statistically

significant at a

conventional alpha level (a

CALCULATED = '

1145) and the eta2 effect size

is

21.4%,

roughly a third of the effect for the

regression

analysis.

INSERT FIGURE 5 ABOUT HERE.

Criticisms of Statistical Significance

Tests

Tenor of Past Criticism

The last several decades have delineated

an exponential growth

curve in the decade-by-decade criticisms

across disciplines of

statistical testing practices (Anderson,

Burnham & Thompson, 1999).

In their historical summary dating back to the

origins of these

tests, Huberty and Pike (in press) provide

a thoughtful review of

how we got to where we're at. Among the

recent commentaries on

statistical testing practices, I prefer Cohen

(1994), Kirk (1996),

Rosnow and Rosenthal (1989), Schmidt (1996),

and Thompson (1996).

31

Common Methodology Mistakes -31-

Criticisms of Significance

Among the classical criticisms, my favorites are Carver (1978),

Meehl (1978), and Rozeboom (1960).

Among

the more

thoughtful

works advocating

statistical

testing, I would cite Cortina and Dunlap (1997), Frick (1996), and

especially Abelson (1997). The most balanced and comprehensive

treatment is provided by Harlow, Mulaik and Steiger (1997) (for

reviews of this book, see Levin, 1998 and Thompson, 1998c).

My purpose here is not to further articulate the various

criticisms

of

statistical

significance tests.

My

own recent

thinking is elaborated in the several reports enumerated in Table

4.

The focus here is on what should be the future. Therefore,

criticisms of statistical tests are only briefly summarized in the

present treatment.

INSERT TABLE 4 ABOUT HERE.

But two quotations may convey the tenor of some of these

commentaries. Rozeboom (1997) recently argued that

Null-hypothesis significance testing is surely the

most bone-headedly

misguided procedure ever

institutionalized in the rote training of science

students...

[I]t

is a sociology-of-science

wonderment

that this statistical

practice has

remained so unresponsive to criticism...

(p. 335)

And Tryon (1998) recently lamented,

[T]he

fact

that statistical experts

and

investigators publishing in the best journals cannot

consistently interpret the results of these analyses

3 2

Common Methodology Mistakes -32-

Criticisms of Significance

is

extremely

disturbing.

Seventy-two

years

of

education

have

resulted

in

minuscule,

if

any,

progress toward correcting this situation.

It is

difficult to estimate the handicap that widespread,

incorrect, and intractable use of a primary data

analytic method has on a scientific discipline, but

the deleterious effects are doubtless substantial...

(p. 796)

Indeed, empirical studies confirm that many researchers do

not

fully understand the logic of their statistical tests (cf. Mittag,

1999; Nelson, Rosenthal & Rosnow, 1986; Oakes, 1986; Rosenthal

&

Gaito,

1963; Zuckerman, Hodgins, Zuckerman & Rosenthal,

1993).

Misconceptions are taught even in widely-used statistics

textbooks

(Carver, 1978).

Brief Summary of Four Criticisms of Common Practice

Statistical significance tests evaluate the probability

of

obtaining sample statistics

(e.g.,

means, medians,

correlation

coefficients) that diverge as far from the null hypothesis

as the

sample statistics, or further, assuming that the null hypothesis

is

true in the population, and given the sample size (Cohen,

1994;

Thompson, 1996). The utility of these estimates has been questioned

on various grounds, four of which are briefly summarized here.

Conventionally,

Statistical

Tests

Assume

"Nil"

Null

Hypotheses. Cohen (1994) defined a "nil" null hypothesis

as a null

specifying no differences

(e.g., Ho:

SDI

-

SD2

= 0)

or

zero

correlations

(e.g.,

R2=0).

Researchers must specify some null

hypothesis, or otherwise the probability of the

sample statistics

3 3

Common Methodology Mistakes -33-

Criticisms of Significance

is completely indeterminate (Thompson,

1996)--infinitely many

values become equally plausible. But "nil" nulls

are not required.

Nevertheless, "as almost universally used, the null in

Ho is taken

to mean nil, zero" (Cohen, 1994, p. 1000).

Some researchers employ nil nulls because statistical

theory

does not easily accommodate the testing of

some non-nil nulls. But

probably most researchers employ nil nulls because

these nulls have

been unconsciously accepted as traditional, because

these nulls can

be mindlessly formulated without consulting previous

literature, or

because most computer software defaults to tests

of nil nulls

(Thompson, 1998c, 1999a). As Boring (1919) argued

80 years ago, in

his critique of the mindless

use of statistical tests titled,

"Mathematical vs. scientific significance,"

The case is one of many where statistical ability,

divorced

from a

scientific

intimacy

with

the

fundamental observations, leads nowhere.

(p. 338)

I believe that when researchers presume

a nil null is true in

the population, an untruth is posited. As

Meehl (1978, p.

822)

noted, "As I believe is generally recognized by

statisticians today

and by thoughtful social scientists, the [nil]

null hypothesis,

taken literally, is always false."

Similarly, Hays (1981, p. 293)

pointed out that "[t]here is surely nothing

on earth that is

completely independent of anything else (in the population].

The

strength of association may approach

zero, but it should seldom or

never be exactly zero." Roger Kirk (1996) concurred, noting

that:

It is ironic that a ritualistic adherence to

null

hypothesis significance testing has led

researchers

3 4

Common Methodology Mistakes -34-

Criticisms of Significance

to focus on controlling the Type I error that cannot

occur because a// null hypotheses are false.

(p.

747, emphasis added)

A pcucmAnm, value computed on the foundation of

a false premise

is

inherently of

somewhat

limited utility.

As

I

have noted

previously, "in many contexts the use of

a 'nil' hypothesis as the

hypothesis we assume can render me largely disinterested in

whether

a result is thonchance" (Thompson, 1997a, p. 30).

Particularly egregious is the use of "nil" nulls

to test

measurement hypotheses,

where wildly non-nil results are both

anticipated and demanded. As Abelson (1997) explained,

And when a reliability coefficient is declared to be

nonzero,

that is

the

ultimate

in

stupefyingly

vacuous information. What we really want to know is

whether

an

estimated reliability

is

.50'ish

or

.80'ish.

(p. 121)

Statistical Tests Can be a Tautological Evaluation

of Sample

Size. When "nil" nulls are used, the null will always

be rejected

at some sample size. There are infinitely

many possible sample

effects. Given this, the probability of realizing

an exactly zero

sample effect is infinitely small. Therefore, given

a "nil" null,

and a non-zero sample effect, the null hypothesis will

always be

rejected at some sample size!

Consequently, as Hays (1981) emphasized, "virtually

any study

can be made to show significant results

if

one uses enough

subjects" (p. 293).

This means that

Statistical

significance

testing

can

involve a

3 5

Common Methodology Mistakes -35-

Criticisms of Significance

tautological logic

in

which

tired

researchers,

having collected data from hundreds of subjects,

then conduct a statistical test to evaluate whether

there were a lot of subjects, which the researchers

already know, because they collected the data and

know they're tired. (Thompson, 1992c, p. 436)

Certainly this dynamic is well known, if it is just

as widely

ignored. More than 60 years ago, Berkson (1938)

wrote an article

titled, "Some difficulties of interpretation encountered

in the

application of the chi-square test." He noted that

when working

with data from roughly 200,000 people,

an

observant

statistician

who

has

had

any

considerable experience with applying the chi-square

test repeatedly will agree with my statement that,

as a matter of observation, when the numbers in the

data are quite large,

the P's tend to come out

small... [W]e know in advance the P that will result

from an application of a chi-square test to

a large

sample... But since the result of the former is

known, it is no test at all!

(pp. 526-527)

Some 30 years ago, Bakan (1966) reported that, "The author

had

occasion to run a number of tests of significance

on a battery of

tests collected on about 60,000 subjects from all

over the United

States.

Every

test came out significant"

(p.

425).

Shortly

thereafter, Kaiser (1976) reported not being surprised

when many

substantively trivial

factors were found to be

statistically

significant when data were available from 40,000

participants.

3 6

Common Methodology Mistakes -36-

Criticisms of Significance

Because

Statistical Tests

Assume

Rather

than

Test

the

Population, Statistical Tests Do Not Evaluate Result Replicabilitv.

Too many

researchers

incorrectly

assume,

consciously or

unconsciously,

that

the

p values

calculated

in

statistical

significance tests evaluate the probability that results will

replicate (Carver, 1978,

1993).

But statistical tests do not

evaluate the probability that the sample statistics

occur in the

population as parameters (Cohen, 1994).

Obviously, knowing the probability of the sample is less

interesting than knowing the probability of the population. Knowing

the probability of population parameters would bear upon result

replicability, because we would then know something about the

population from which future researchers would also draw their

samples. But as Shaver (1993) argued so emphatically:

[A]

test of

statistical significance

is not an

indication of the probability that a result would be

obtained upon replication of the study.... Carver's

(1978) treatment should have dealt a death blow to

this fallacy....

(p. 304)

And so Cohen (1994) concluded that the statistical significance

test "does not tell us what we want to know, and

we so much want to

know what we want to know that, out of desperation,

we nevertheless

believe that it does!" (p. 997).

Statistical Significance Tests Do Not Solely Evaluate Effect

Magnitude.

Because

various

study

features

(including

score

reliability) impact calculated p values,

PcALcuLATED cannot be used as

a

satisfactory index of

study effect size.

As

I

have noted

Common Methodology Mistakes -37-

Criticisms of Significance

elsewhere,

The calculated p values in

a given study are a

function

of

several

study

features,

but

are

particularly influenced by the

confounded,

joint

influence of study sample size and

study effect

sizes. Because p values

are confounded indices, in

theory 100 studies with varying sample sizes

and 100

different effect sizes could each

have the same

single

RCALcuIATED,

and 100 studies with the

same single

effect size could each have 100 different

values for

RourmAnm (Thompson, 1999a, pp. 169-170)

The recent fourth edition of the American

Psychological

Association style manual (APA, 1994) explicitly

acknowledged that

values are not acceptable indices of effect:

Neither of the two types of probability

values

[statistical

significance

tests]

reflects

the

importance or magnitude of

an effect because both

depend

on

sample

size...

You

are

[therefore]

encouraged to provide effect-size information.

(APA,

1994, p. 18, emphasis added)

In short, effect sizes should be reported in

every quantitative

study.

The "Bootstrap"

Explanation of the "bootstrap" will provide

a concrete basis

for facilitating genuine understanding

of what statistical tests do

(and do not) do. The "bootstrap" has

been so named because this

statistical procedure represents

an attempt to "pull oneself up"

on

3 8

Common Methodology Mistakes -38-

The Bootstrap

one's own, using one's sample data, without external

assistance

from a theoretically-derived sampling distribution.

Related books have been offered by Davison and Hinkley

(1997),

Efron and Tibshirani

(1993), Manly

(1994),

and Sprent

(1998).

Accessible shorter conceptual treatments have been

presented by

Diaconis and Efron (1983) and Thompson (1993b).

I especially and

particularly recommend the remarkable book by

Lunneborg (1999).

Software to

invoke

the bootstrap

is

available

in most

structural

equation

modeling

software

(e.g.,

EQS,

AMOS).

Specialized bootstrap software for microcomputers

(e.g., S Plus,

SC, and Resampling Stats) is also readily available.

The Sampling Distribution

Key

to

understanding

statistical

significance

tests

is

understanding the sample distribution and distinguishing

the (a)

sampling distribution from (b) the population

distribution and (c)

the score distribution. Among the better book

treatments is one

offered by Hinkle, Wiersma and Jurs (1998,

pp. 176-178). Shorter

treatments include those by Breunig (1995), Mittag

(1992), and

Rennie (1997).

The population distribution consists of the

scores of the N

entities

(e.g.,

people,

laboratory mice)

of

interest to the

researcher, regarding whom the researcher wishes to generalize.

In

the social sciences, many researchers deem the population

to be

infinite.

For example,

an educational researcher may hope to

generalize about the effects of

a teaching method on all human

beings across time.

Researchers typically describe the population by

computing or

3 9

Common Methodology Mistakes -39-

The Bootstrap

estimating characterizations of the population

scores (e.g., means,

interquartile ranges), so that the population can be

more readily

comprehended. These characterizations of the population are called

"parameters," and are conventionally symbolized using Greek letters

(e.g., A for the population score mean, a for the population score

standard deviation).

The sample distribution also consists of scores, but only

a

subsample of n scores from the population. The characterizations of

the sample scores are called "statistics," and are conventionally

represented by Roman letters (e.g., M, SD, r). Strictly speaking,

statistical significance tests evaluate the probability of

a given

set of statistics occurring, assuming that the sample

came from a

population exactly described by the null hypothesis, given the

sample size.

Because each sample is only a subset of the population scores,

the sample does not exactly reproduce the population distribution.

Thus,

each set

of

sample

scores contains

some

idiosyncratic

variance, called "sampling error" variance, much like each

person

has idiosyncratic personality features. (Of course, sampling

error

variance should not be confused with either "measurement error"

variance or "model specification" error variance (sometimes modeled

as

the

"within"

or

"residual"

sum of

squares

in

univariate

analyses)

(Thompson, 1998a).]

Of course, like people, sampling

distributions may differ in how much idiosyncratic "flukiness" they

each contain.

Statistical tests evaluate the probability that the deviation

of the sample statistics from the assumed population parameters is

4 0

Common Methodology Mistakes -40-

The Bootstrap

due to sampling error. That is, statistical

tests evaluate whether

random sampling from the population may explain

the deviations of

the sample statistics from the hypothesized population

parameters.

However, very few researchers employ random samples

from the

population. Rokeach (1973) was an exception;

being a different

person living in a different era, he was able to hire the Gallup

polling organization to provide

a representative national sample

for his inquiry. But in the social sciences

fewer than 5% of

studies are based on random samples (Ludbrook

& Dudley, 1998).

On the basis that most researchers do not have

random samples

from the population, some

(cf.

Shaver,

1993)

have argued that

statistical

significance tests should almost

never

be used.

However, most researchers presume that statistical

tests may be

reasonable if there are grounds to believe that

the score sample of

convenience is expected to be reasonably

representative of a

population.

In order to evaluate the probability that the

sample scores

came from a population of scores described exactly by

the null

hypothesis, given the sample size, researchers

typically invoke the

sampling distribution. The sampling distribution

does not consist

of scores

(except when the sample size

is one).

Rather,

the

sampling distribution consists

of

estimated parameters,

each

computed for samples of exactly size

n,

so as to model the

influences of random sampling

error on the statistics estimating

the population parameters, given the sample size.

This sampling distribution is then used

to estimate the

probability of the observed sample statistic(s)

occurring due to

41

Common Methodology Mistakes -41-

The Bootstrap

sampling error. For example, we might take the

population to be

infinitely many IQ scores normally distributed with

a mean, median

and mode of 100 and a standard deviation of 15.

Perhaps we have

drawn a sample of 10 people, and compute the sample median

(not all

hypotheses have to be about means!) to be 110. We wish

to know

whether our statistic or one higher is unlikely,

assuming the

sample came from the posited population.

We can make this determination by drawing all possible

samples

of size 10 from the population, computing the median

of each

sample, and then creating the distribution of these

statistics

(i.e., the sampling distribution). We then examine

the sampling